Apache Kafka seems to be the standard solution in nowadays architecture, but we should focus if it is the right choice for our needs.

Nowadays, we’re in a new age of Event-Driven Architecture, and this is not the first time we’ve lived that. Before microservices and cloud, EDA was the new normal in enterprise integration. Based on different kinds of standards, there where protocols like JMS or AMQP used in broker-based products like TIBCO EMS, Active MQ, or IBM Websphere MQ, so this approach is not something new.

With the rise of microservices architectures and the API lead approach, it seemed that we’ve forgotten about the importance of the messaging systems, and we had to go through the same challenges we saw in the past to come to a new messaging solution to solve that problem. So, we’re coming back to EDA Architecture, pub-sub mechanism, to help us decouple the consumers and producers, moving from orchestration to choreography, and all these concepts fit better in nowaday worlds with more and more independent components that need cooperation and integration.

During this effort, we started to look at new technologies to help us implement that again. Still, with the new reality, we forgot about the heavy protocols and standards like JMS and started to think about other options. And we need to admit that we felt that there is a new king in this area, and this is one of the critical components that seem to be no matter what in today’s architecture: Apache Kafka.

And don’t get me wrong. Apache Kafka is fantastic, and it has been proven for so long, a production-ready solution, performant, with impressive capabilities for replay and powerful API to ease the integration. Apache Kafka has some challenges in this cloud-native world because it doesn’t play so well with some of its rules.

If you have used Apache Kafka for some time, you are aware that there are particular challenges with it. Apache Kafka has an architecture that comes from its LinkedIn days in 2011, where Kubernetes or even Docker and container technologies were not a thing, that makes to run Apache Kafka (purely stateful service) in a container fashion quite complicated. There are improvements using helm charts and operators to ease the journey, but still, it doesn’t feel like pieces can integrate well into that fashion. Another thing is the geo-replication that even with components like MirrorMaker, it is not something used, works smooth, and feels integrated.

Other technologies are trying to provide a solution for those capabilities, and one of them is also another Apache Foundation project that has been donated by Yahoo! and it is named Apache Pulsar.

Don’t get me wrong; this is not about finding a new truth, that single messaging solution that is perfect for today’s architectures: it doesn’t exist. In today’s world, with so many different requirements and variables for the different kinds of applications, one size fits all is no longer true. So you should stop thinking about which messaging solution is the best one, and think more about which one serves your architecture best and fulfills both technical and business requirements.

We have covered different ways for general communication, with several specific solutions for synchronous communication (service mesh technologies and protocols like REST, GraphQL, or gRPC) and different ones for asynchronous communication. We need to go deeper into the asynchronous communication to find what works best for you. But first, let’s speak a little bit more about Apache Pulsar.

Apache Pulsar

Apache Pulsar, as mentioned above, has been developed internally by Yahoo! and donated to the Apache Foundation. As stated on their official website, they are several key points to mention as we start exploring this option:

- Pulsar Functions: Easily deploy lightweight compute logic using developer-friendly APIs without needing to run your stream processing engine

- Proven in production: Apache Pulsar has run in production at Yahoo scale for over three years, with millions of messages per second across millions of topics

- Horizontally scalable: Seamlessly expand capacity to hundreds of nodes

- Low latency with durability: Designed for low publish latency (< 5ms) at scale with strong durability guarantees

- Geo-replication: Designed for configurable replication between data centers across multiple geographic regions

- Multi-tenancy: Built from the ground up as a multi-tenant system. Supports Isolation, Authentication, Authorization, and Quotas

- Persistent storages: Persistent message storage based on Apache BookKeeper. Provides IO-level isolation between write and read operations

- Client libraries: Flexible messaging models with high-level APIs for Java, C++, Python and GO

- Operability: REST Admin API for provisioning, administration, tools, and monitoring. Deploy on bare metal or Kubernetes.

So, as we can see, in its design, Apache Pulsar is addressing some of the main weaknesses of Apache Kafka as Geo-replication and their cloud-native approach.

Apache Pulsar provides support for the pub/sub pattern, but also provides so many capabilities that also place as a traditional queue messaging system with their concept of exclusive topics where only one of the subscribers will receive the message. Also provides interesting concepts and features used in other messaging systems:

- Dead Letter Topics: For messages that were not able to be processed by the consumer.

- Persistent and Non-Persistent Topics: To decide if you want to persist your messages or not during the transition.

- Namespaces: To have a logical distribution of your topics, so an application can be grouped in namespaces as we do, for example, in Kubernetes so we can isolate some applications from the others.

- Failover: Similar to exclusive, but when the attached consumer failed to process another takes the chance to process the messages.

- Shared: To be able to provide a round-robin approach similar to the traditional queue messaging system where all the subscribers will be attached to the topic, but the only one will receive the message, and it will distribute the load along all of them.

- Multi-topic subscriptions: To be able to subscribe to several topics using a regexp (similar to the Subject approach from TIBCO Rendezvous, for example, in the 90s) that has been so powerful and popular.

But also, if you require features from Apache Kafka, you will still have similar concepts as partitioned topics, key-shared topics, and so on. So you have everything at your hand to choose which kind of configuration works best for you and your specific use cases, you also have the option to mix and match.

Apache Pulsar Architecture

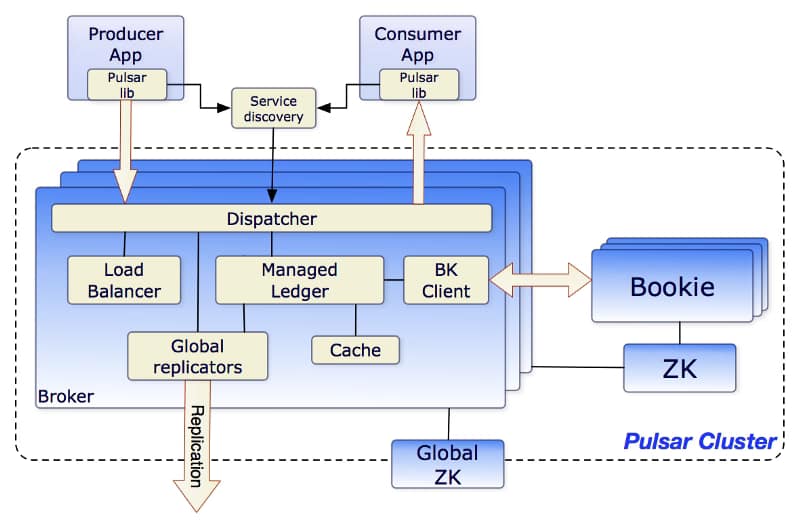

Apache Pulsar Architecture is similar to other comparable messaging systems today. As you can see in the picture below from the Apache Pulsar website, those are the main components of the architecture:

- Brokers: One or more brokers handles incoming messages from producers, dispatches messages to consumers

- BookKeeper Cluster for persistent storage of messages management

- ZooKeeper Cluster for management purposes.

So you can see this architecture is also quite similar to the Apache Kafka one again with the addition of a new concept of the BookKeeper Cluster.

Broker in Apache Pulsar are stateless components that mainly will run two pieces

- HTTP Server that exposes a REST API for management and is used by consumers and producers for topic lookup.

- TCP Server using a binary protocol called dispatcher that is used for all the data transfers. Usually, Messages are dispatched out of a managed ledger cache for performance purposes. But also if this cache grows too big, it will interact with the BookKeeper cluster for persistence reasons.

To support the Global Replication (Geo-Replication), the Brokers manage replicators that tail the entries published in the local region and republish them to the remote regions.

Apache BookKeeper Cluster is used as persistent message storage. Apache BookKeeper is a distributed write-ahead log (WAL) system that manages when messages should be persisted. It also supports horizontal scaling based on the load and multi-log support. Not only messages are persistent but also the cursors that are the consumer position for a specific topic (similar to the offset in Apache Kafka terminology)

Finally, Zookeeper Cluster is used in the same role as Apache Kafka as a metadata configuration storage cluster for the whole system.

Hello World using Apache Pulsar

Let’s see how we can create a quick “Hello World” case using Apache Pulsar as a protocol, and to do that, we’re going to try to implement it in a cloud-native fashion. So we will do a single-node cluster of Apache Pulsar in a Kubernetes installation and deploy a producer application using Flogo technology and a consumer application using Go. Something similar to what you can see in the diagram below:

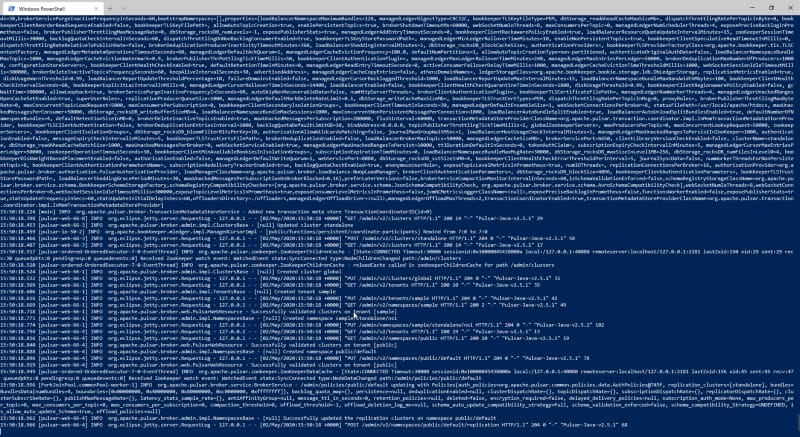

And we’re going to try to keep it simple, so we will just use pure docker this time. So, first of all, just spin up the Apache Pulsar server and to do that we will use the following command:

docker run -it -p 6650:6650 -p 8080:8080 --mount source=pulsardata,target=/pulsar/data --mount source=pulsarconf,target=/pulsar/conf apachepulsar/pulsar:2.5.1 bin/pulsar standalone

And we will see an output similar to this one:

Now, we need to create simple applications, and for that, Flogo and Go will be used.

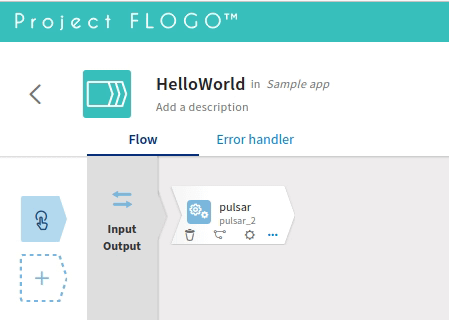

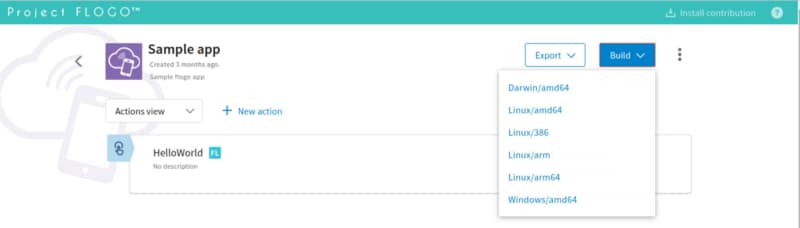

Let’s start with the producer, and in this case, we will use the open-source version to create a quick application.

First of all, we will just use the Web UI (dockerized) to do that. Run the command:

docker run -it -p 3303:3303 flogo/flogo-docker eula-accept

And we install a new contribution to enable the Pulsar publisher activity. To do that we will click on the “Install new contribution” button and provide the following URL:

flogo install github.com/mmussett/flogo-components/activity/pulsarAnd now we will create a simple flow as you can see in the picture below:

We will now build the application using the menu, and that’s it!

To be able to run just launch the application as you can see here:

./sample-app_linux_amd64

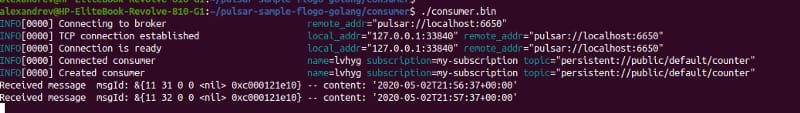

Now, we just need to create the Go-lang consumer to be able to do that we need to install the golang package:

go get github.com/apache/pulsar-client-go/pulsar

And now we need to create the following code:

package main

import (

“fmt”

“log”

“github.com/apache/pulsar-client-go/pulsar”

)

func main() {

client, err := pulsar.NewClient(pulsar.ClientOptions{URL: “pulsar://localhost:6650”})

if err != nil {

log.Fatal(err)

}

defer client.Close()

channel := make(chan pulsar.ConsumerMessage, 100)

options := pulsar.ConsumerOptions{

Topic: “counter”,

SubscriptionName: “my-subscription”,

Type: pulsar.Shared,

}

options.MessageChannel = channel

consumer, err := client.Subscribe(options)

if err != nil {

log.Fatal(err)

}

defer consumer.Close()// Receive messages from channel. The channel returns a struct which contains message and the consumer from where

// the message was received. It’s not necessary here since we have 1 single consumer, but the channel could be

// shared across multiple consumers as well

for cm := range channel {

msg := cm.Message

fmt.Printf(“Received message msgId: %v — content: ‘%s’\n”,

msg.ID(), string(msg.Payload()))

consumer.Ack(msg)

}

}And after running both programs, you can see the following output as you can see, we were able to communicate both applications in an effortless flow.

This article is just a starting point, and we will continue talking about how to use Apache Pulsar in your architectures. If you want to take a look at the code we’ve used in this sample, you can find it here: