TIBCO BW ECS Logging Support is becoming one demanded feature based on the increased usage of the Elastic Common Schema for the log aggregation solution based on the Elastic stack (previously known as ELK stack)

We have already commented a lot about the importance of log aggregation solutions and their benefits, especially when discussing container architecture. Because of that, today, we will focus on how we can adapt our BW applications to support this new logging format.

Because the first thing that we need to know is the following statement: Yes, this can be done. And it can be done non-dependant on your deployment model. So, the solution provided here works for both on-premises solutions as well as container deployments using BWCE.

TIBCO BW Logging Background

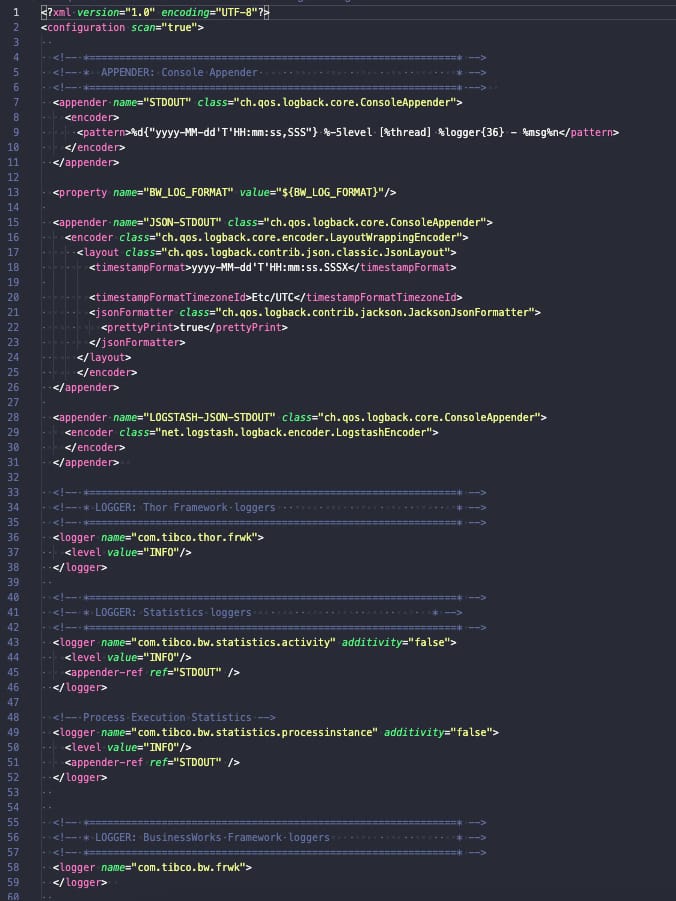

TIBCO BusinessWorks (container or not) relies on its logging capabilities in the logback library, and this library is configured using a file named logback.xml that could have the configuration that you need, as you can see in the picture below:

Logback is a well-known library for Java-based developments and has an architecture based on a core solution and plug-ins that extend its current capabilities. It’s this plug-in approach that we are going to do to support ECS.

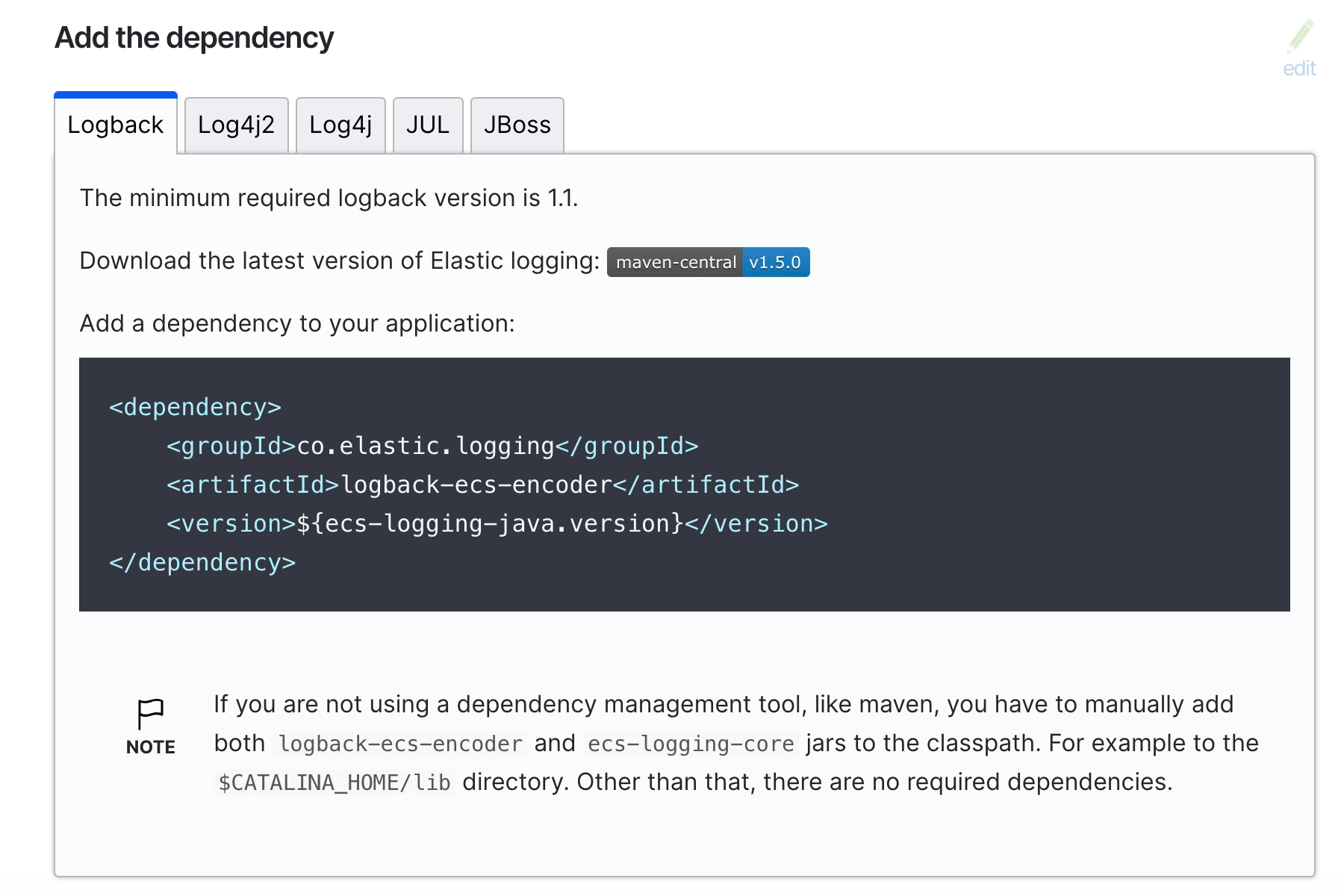

Even in the ECS Official documentation covers the configuration of enabling this logging configuration when using the Logback solution as you can see in the picture below and this official link:

In our case, we don’t need to add the dependency anywhere but just download the dependency, as we will need to include to the existing OSGI bundles for the TIBCO BW installation. We will need just two files that are the following ones:

- ecs-logging-core-1.5.0.jar

- logback-ecs-encoder-1.5.0.jar

At the moment of writing this article, current versions are 1.5.0 for each of them, but keep a look to make sure you’re using a recent version of this software to avoid any problems with support and vulnerabilities.

Once we have these libraries, we need to add it to the BW system installation, and we need to do it differently if we are using a TIBCO on-premises installation or a TIBCO BW base installation. To be honest, the things we need to do are the same; the process of doing it is different.

Because, in the end, what we need to do is just a simple task. Include these JAR files as part of the current logback OSGI bundle that TIBCO BW loads. So, let’s see how we can do that and start with an on-premises installation. We will use the TIBCO BWCE 2.8.2 version as an example, but similar steps will be required for other versions.

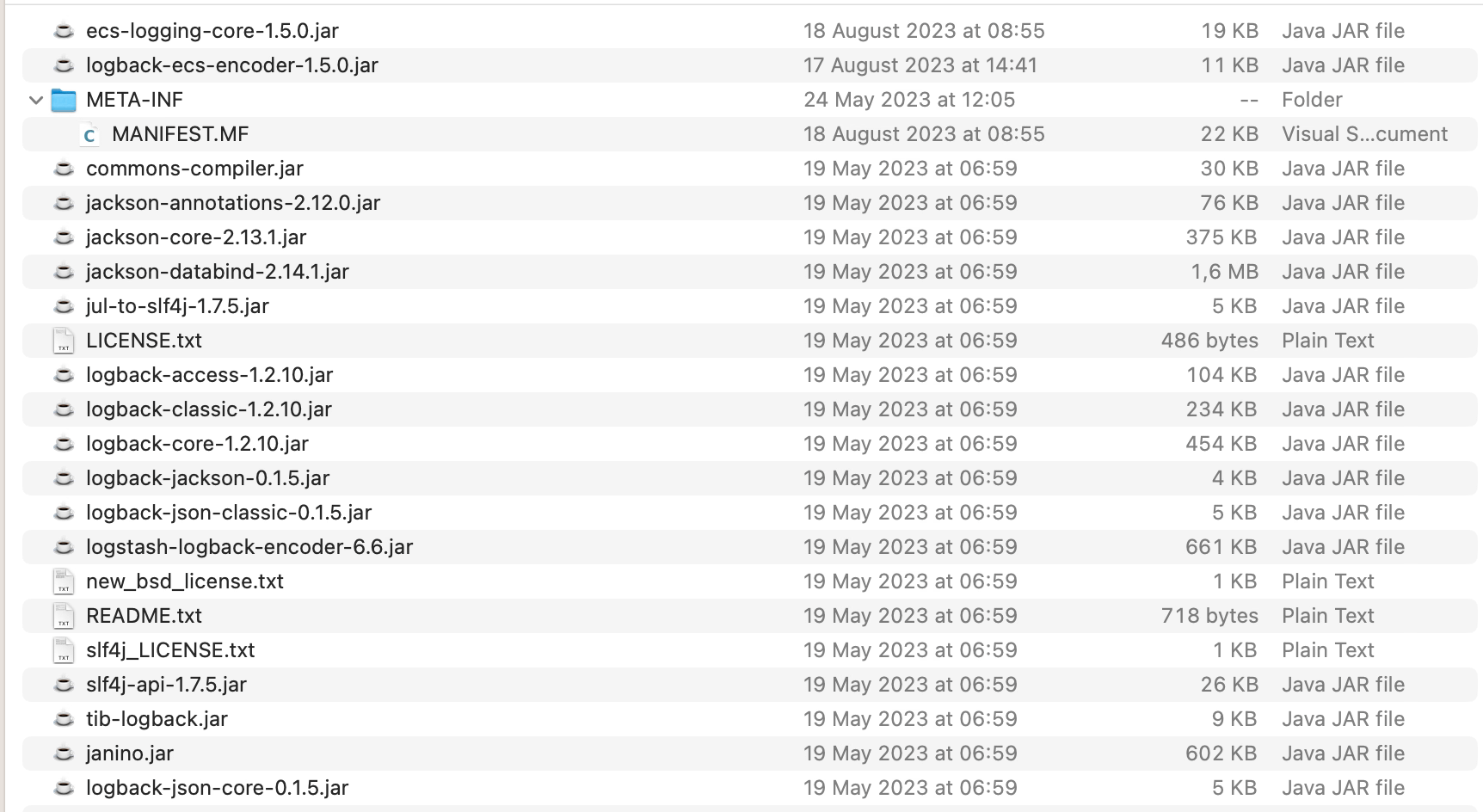

On-premise installation is the easiest way to do it, but just because it has fewer steps than when we are doing it in a TIBCO BWCE base image. So, in this case, we will go to the following location: <TIBCO_HOME>/bwce/2.8/system/shared/com.tibco.tpcl.logback_1.2.1600.002/

- We will place the download JARs in that folder

- We will open the

META-INF/MANIFEST.MF and do the following modifications:

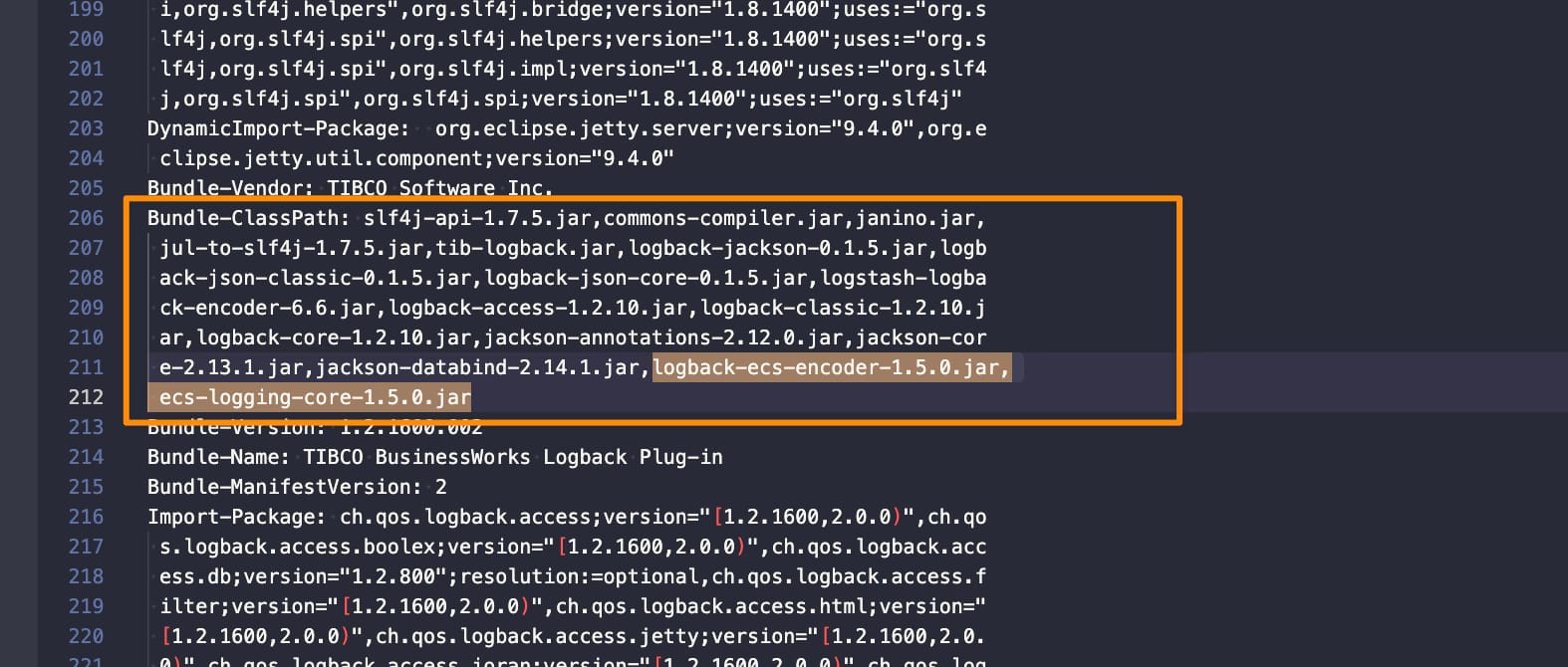

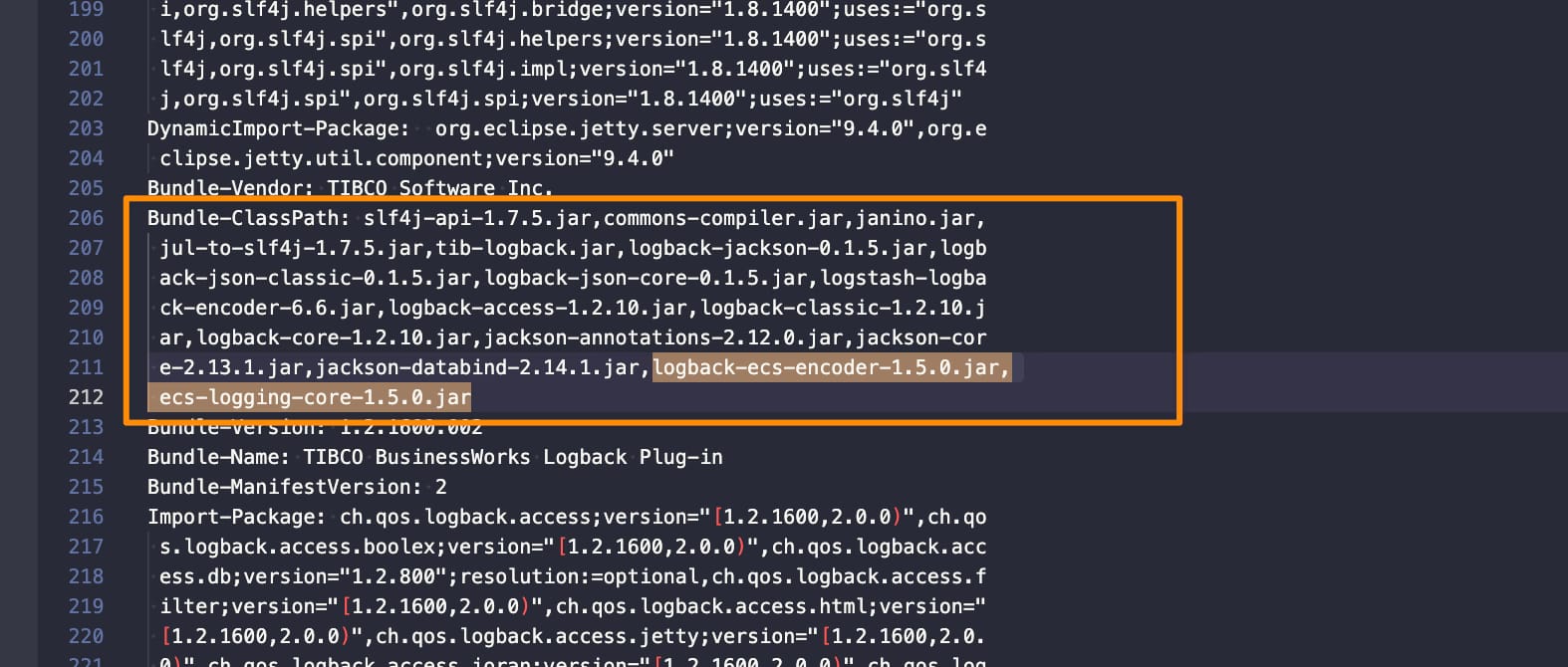

- Add those JARs to the

Bundle-Classpath section:

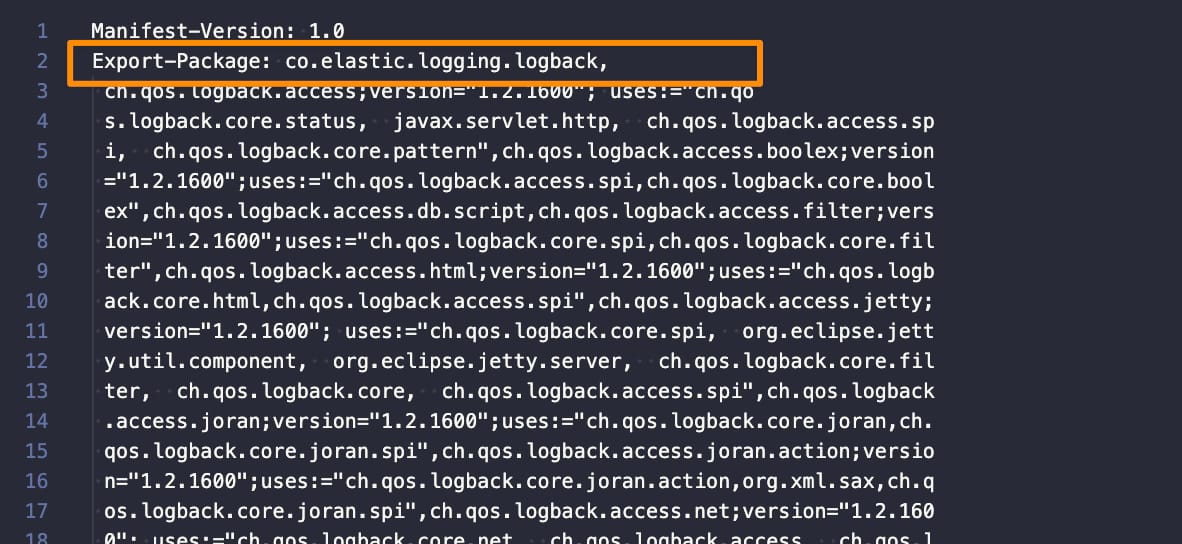

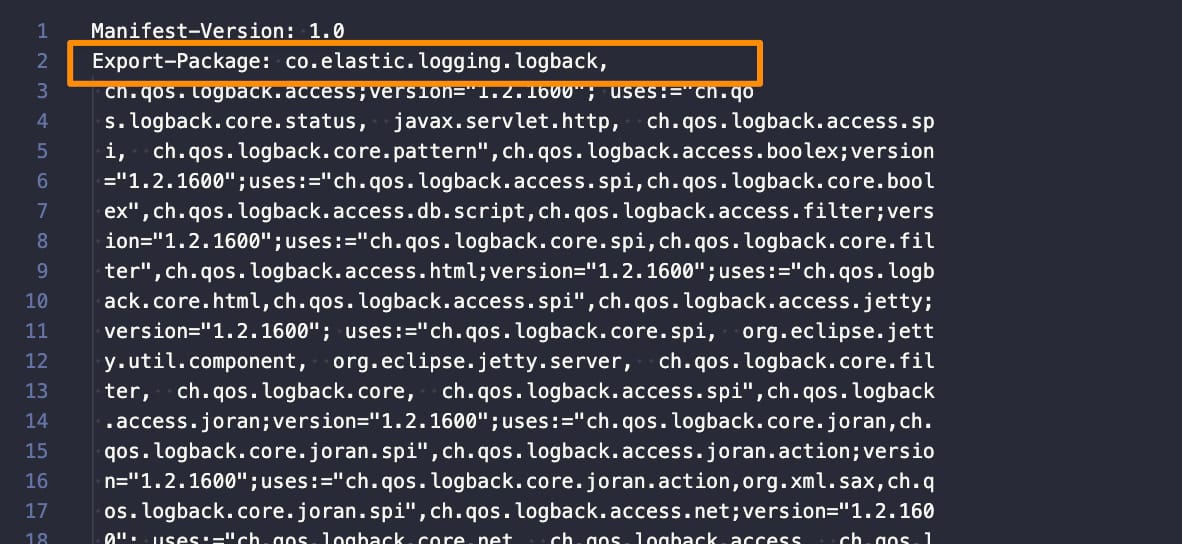

- Include the following package (co.elastic.logging.logback) as part of the exported packages by adding it to the

Exported-packagesection:

Once this is done, our TIBCO BW installation supports ECS format. and we just need to configure the logback.xml to use it, and we can do that relying on the official documentation on the ECS page. We need to include the following encoder, as shown below:

<encoder class="co.elastic.logging.logback.EcsEncoder">

<serviceName>my-application</serviceName>

<serviceVersion>my-application-version</serviceVersion>

<serviceEnvironment>my-application-environment</serviceEnvironment>

<serviceNodeName>my-application-cluster-node</serviceNodeName>

</encoder>

For example, if we modify the default logback.xml configuration file with this information, we will have something like this:

<?xml version="1.0" encoding="UTF-8"?>

<configuration scan="true">

<!-- *=============================================================* -->

<!-- * APPENDER: Console Appender * -->

<!-- *=============================================================* -->

<appender name="STDOUT" class="ch.qos.logback.core.ConsoleAppender">

<encoder class="co.elastic.logging.logback.EcsEncoder">

<serviceName>a</serviceName>

<serviceVersion>b</serviceVersion>

<serviceEnvironment>c</serviceEnvironment>

<serviceNodeName>d</serviceNodeName>

</encoder>

</appender>

<!-- *=============================================================* -->

<!-- * LOGGER: Thor Framework loggers * -->

<!-- *=============================================================* -->

<logger name="com.tibco.thor.frwk">

<level value="INFO"/>

</logger>

<!-- *=============================================================* -->

<!-- * LOGGER: BusinessWorks Framework loggers * -->

<!-- *=============================================================* -->

<logger name="com.tibco.bw.frwk">

<level value="WARN"/>

</logger>

<logger name="com.tibco.bw.frwk.engine">

<level value="INFO"/>

</logger>

<!-- *=============================================================* -->

<!-- * LOGGER: BusinessWorks Engine loggers * -->

<!-- *=============================================================* -->

<logger name="com.tibco.bw.core">

<level value="WARN"/>

</logger>

<logger name="com.tibco.bx">

<level value="ERROR"/>

</logger>

<logger name="com.tibco.pvm">

<level value="ERROR"/>

</logger>

<logger name="configuration.management.logger">

<level value="INFO"/>

</logger>

<!-- *=============================================================* -->

<!-- * LOGGER: BusinessWorks Palette and Activity loggers * -->

<!-- *=============================================================* -->

<!-- Default Log activity logger -->

<logger name="com.tibco.bw.palette.generalactivities.Log">

<level value="DEBUG"/>

</logger>

<logger name="com.tibco.bw.palette">

<level value="ERROR"/>

</logger>

<!-- *=============================================================* -->

<!-- * LOGGER: BusinessWorks Binding loggers * -->

<!-- *=============================================================* -->

<!-- SOAP Binding logger -->

<logger name="com.tibco.bw.binding.soap">

<level value="ERROR"/>

</logger>

<!-- REST Binding logger -->

<logger name="com.tibco.bw.binding.rest">

<level value="ERROR"/>

</logger>

<!-- *=============================================================* -->

<!-- * LOGGER: BusinessWorks Shared Resource loggers * -->

<!-- *=============================================================* -->

<logger name="com.tibco.bw.sharedresource">

<level value="ERROR"/>

</logger>

<!-- *=============================================================* -->

<!-- * LOGGER: BusinessWorks Schema Cache loggers * -->

<!-- *=============================================================* -->

<logger name="com.tibco.bw.cache.runtime.xsd">

<level value="ERROR"/>

</logger>

<logger name="com.tibco.bw.cache.runtime.wsdl">

<level value="ERROR"/>

</logger>

<!-- *=============================================================* -->

<!-- * LOGGER: BusinessWorks Governance loggers * -->

<!-- *=============================================================* -->

<!-- Governance: Policy Director logger1 -->

<logger name="com.tibco.governance">

<level value="ERROR"/>

</logger>

<logger name="com.tibco.amx.governance">

<level value="WARN"/>

</logger>

<!-- Governance: Policy Director logger2 -->

<logger name="com.tibco.governance.pa.action.runtime.PolicyProperties">

<level value="ERROR"/>

</logger>

<!-- Governance: SPM logger1 -->

<logger name="com.tibco.governance.spm">

<level value="ERROR"/>

</logger>

<!-- Governance: SPM logger2 -->

<logger name="rta.client">

<level value="ERROR"/>

</logger>

<!-- *=============================================================* -->

<!-- * LOGGER: BusinessWorks Miscellaneous Loggers * -->

<!-- *=============================================================* -->

<logger name="com.tibco.bw.platformservices">

<level value="INFO"/>

</logger>

<logger name="com.tibco.bw.core.runtime.statistics">

<level value="ERROR"/>

</logger>

<!-- *=============================================================* -->

<!-- * LOGGER: Other loggers * -->

<!-- *=============================================================* -->

<logger name="org.apache.axis2">

<level value="ERROR"/>

</logger>

<logger name="org.eclipse">

<level value="ERROR"/>

</logger>

<logger name="org.quartz">

<level value="ERROR"/>

</logger>

<logger name="org.apache.commons.httpclient.util.IdleConnectionHandler">

<level value="ERROR"/>

</logger>

<!-- *=============================================================* -->

<!-- * LOGGER: User loggers. User's custom loggers should be * -->

<!-- * configured in this section. * -->

<!-- *=============================================================* -->

<!-- *=============================================================* -->

<!-- * ROOT * -->

<!-- *=============================================================* -->

<root level="ERROR">

<appender-ref ref="STDOUT" />

</root>

</configuration>

You can also do more custom configurations based on the information available on the ECS encoder configuration page here.

How to enable TIBCO BW ECS Logging Support?

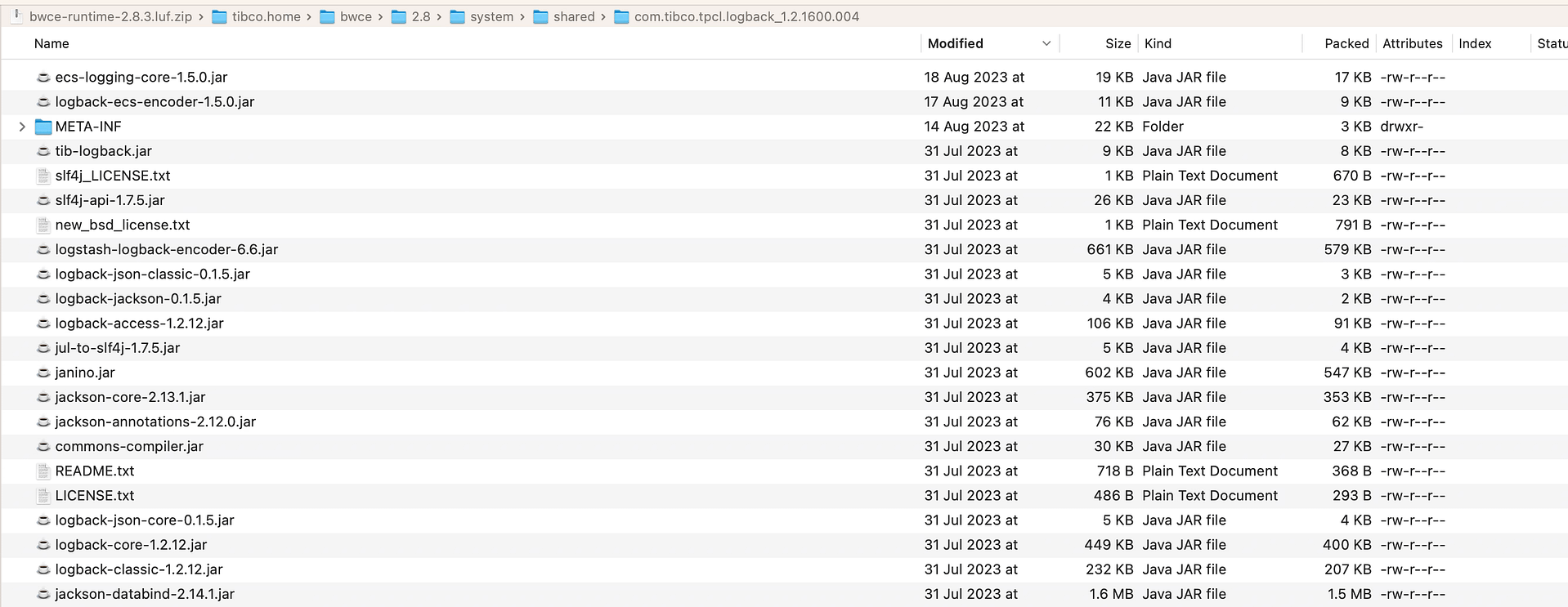

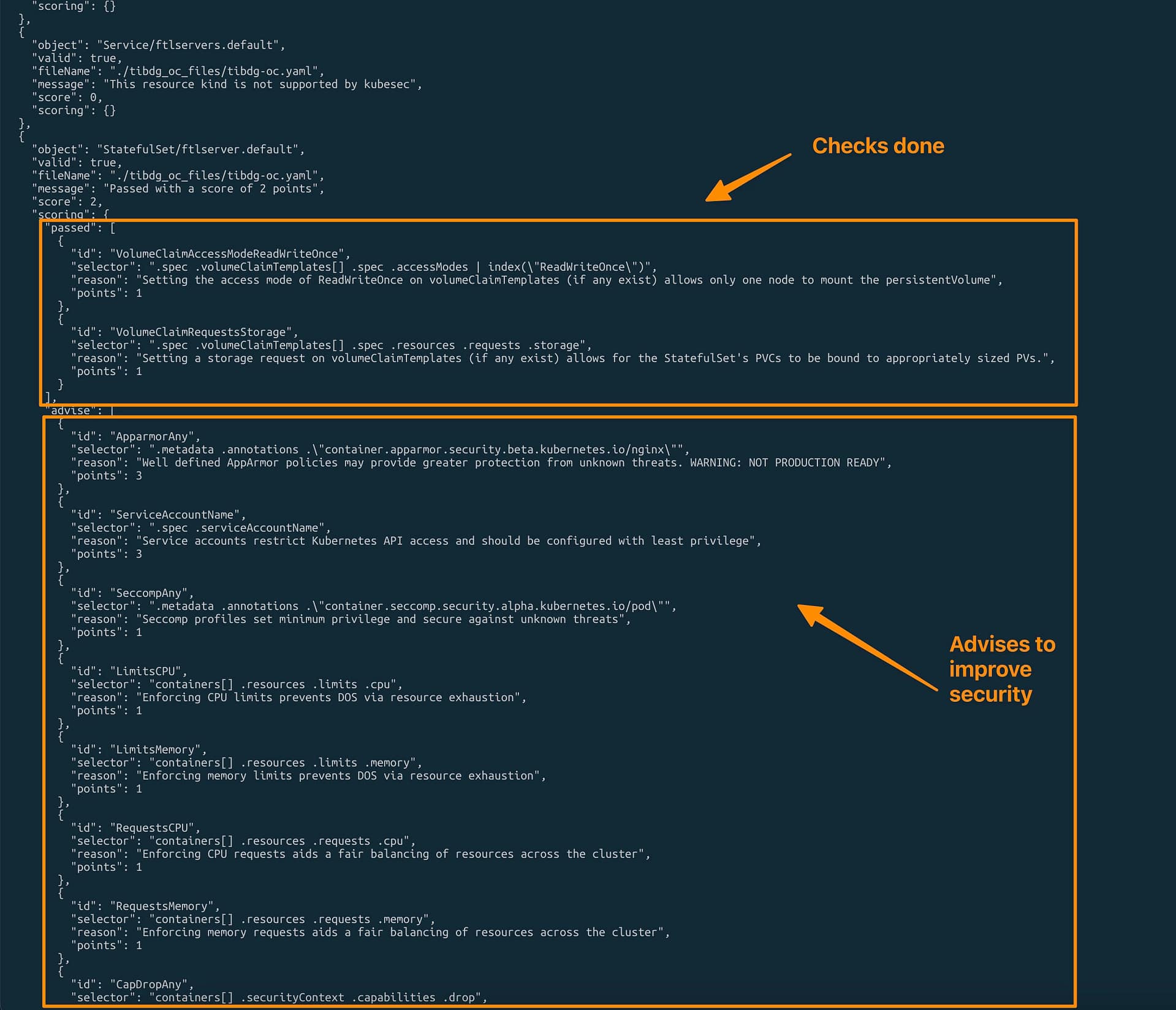

For BWCE, the steps are similar, but we need to be aware that all the runtime components are packaged inside the base-runtime-version.zip that we download from our TIBCO eDelivery site, so we will need to use a tool to open that ZIP and do the following modifications:

- We will place the download JARs on that folder

/tibco.home/bwce/2.8/system/shared/com.tibco.tpcl.logback_1.2.1600.004

- We will open the

META-INF/MANIFEST.MF and do the following modifications:

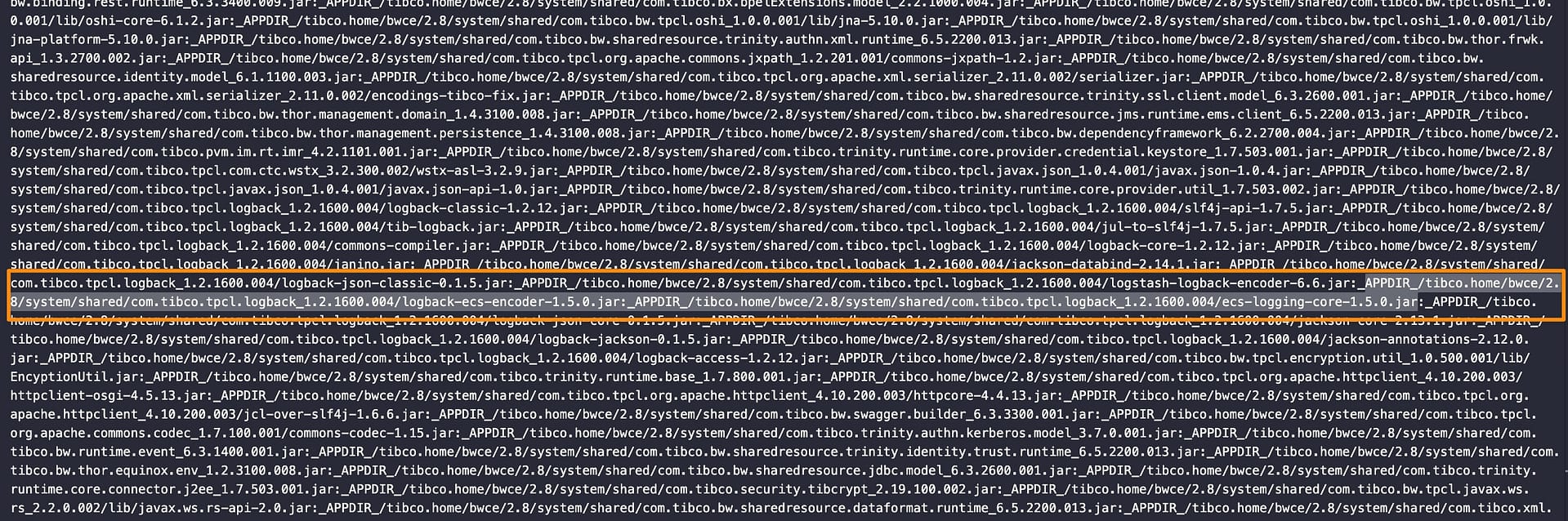

- Add those JARs to the

Bundle-Classpath section:

- Include the following package (co.elastic.logging.logback) as part of the exported packages by adding it to the

Exported-packagesection:

- Additionally we will need to modify the

bwappnode in the location /tibco.home/bwce/2.8/bin to add the JAR files also to the classpath that the BWCE base image use to run to ensure this is loading:

Now we can build our BWCE base image as usual and modify the logback.xml as explained above. Here you can see a sample application using this configuration:

{"@timestamp":"2023-08-28T12:49:08.524Z","log.level": "INFO","message":"TIBCO BusinessWorks version 2.8.2, build V17, 2023-05-19","ecs.version": "1.2.0","service.name":"a","service.version":"b","service.environment":"c","service.node.name":"d","event.dataset":"a","process.thread.name":"main","log.logger":"com.tibco.thor.frwk"}

<>@BWEclipseAppNode> {"@timestamp":"2023-08-28T12:49:25.435Z","log.level": "INFO","message":"Started by BusinessStudio.","ecs.version": "1.2.0","service.name":"a","service.version":"b","service.environment":"c","service.node.name":"d","event.dataset":"a","process.thread.name":"main","log.logger":"com.tibco.thor.frwk.Deployer"}

{"@timestamp":"2023-08-28T12:49:32.795Z","log.level": "INFO","message":"TIBCO-BW-FRWK-300002: BW Engine [Main] started successfully.","ecs.version": "1.2.0","service.name":"a","service.version":"b","service.environment":"c","service.node.name":"d","event.dataset":"a","process.thread.name":"main","log.logger":"com.tibco.bw.frwk.engine.BWEngine"}

{"@timestamp":"2023-08-28T12:49:34.338Z","log.level": "INFO","message":"TIBCO-THOR-FRWK-300001: Started OSGi Framework of AppNode [BWEclipseAppNode] in AppSpace [BWEclipseAppSpace] of Domain [BWEclipseDomain]","ecs.version": "1.2.0","service.name":"a","service.version":"b","service.environment":"c","service.node.name":"d","event.dataset":"a","process.thread.name":"Framework Event Dispatcher: Equinox Container: 1395256a-27a2-4e91-b774-310e85b0b87c","log.logger":"com.tibco.thor.frwk.Deployer"}

{"@timestamp":"2023-08-28T12:49:34.456Z","log.level": "INFO","message":"TIBCO-THOR-FRWK-300018: Deploying BW Application [t3:1.0].","ecs.version": "1.2.0","service.name":"a","service.version":"b","service.environment":"c","service.node.name":"d","event.dataset":"a","process.thread.name":"Framework Event Dispatcher: Equinox Container: 1395256a-27a2-4e91-b774-310e85b0b87c","log.logger":"com.tibco.thor.frwk.Application"}

{"@timestamp":"2023-08-28T12:49:34.524Z","log.level": "INFO","message":"TIBCO-THOR-FRWK-300021: All Application dependencies are resolved for Application [t3:1.0]","ecs.version": "1.2.0","service.name":"a","service.version":"b","service.environment":"c","service.node.name":"d","event.dataset":"a","process.thread.name":"Framework Event Dispatcher: Equinox Container: 1395256a-27a2-4e91-b774-310e85b0b87c","log.logger":"com.tibco.thor.frwk.Application"}

{"@timestamp":"2023-08-28T12:49:34.541Z","log.level": "INFO","message":"Started by BusinessStudio, ignoring .enabled settings.","ecs.version": "1.2.0","service.name":"a","service.version":"b","service.environment":"c","service.node.name":"d","event.dataset":"a","process.thread.name":"Framework Event Dispatcher: Equinox Container: 1395256a-27a2-4e91-b774-310e85b0b87c","log.logger":"com.tibco.thor.frwk.Application"}

{"@timestamp":"2023-08-28T12:49:35.842Z","log.level": "INFO","message":"TIBCO-THOR-FRWK-300006: Started BW Application [t3:1.0]","ecs.version": "1.2.0","service.name":"a","service.version":"b","service.environment":"c","service.node.name":"d","event.dataset":"a","process.thread.name":"EventAdminThread #1","log.logger":"com.tibco.thor.frwk.Application"}

{"@timestamp":"2023-08-28T12:49:35.954Z","log.level": "INFO","message":"aaaaaaa ","ecs.version": "1.2.0","service.name":"a","service.version":"b","service.environment":"c","service.node.name":"d","event.dataset":"a","process.thread.name":"bwEngThread:In-Memory Process Worker-1","log.logger":"com.tibco.bw.palette.generalactivities.Log.t3.module.Log"}

gosh: stopping shell