In the dynamic and ever-evolving world of container orchestration, Kubernetes continues to reign as the ultimate choice for managing, deploying, and scaling containerized applications. As Kubernetes evolves, so do its features and capabilities, and one such fascinating addition is the concept of Ephemeral Containers. In this blog post, we will delve into the world of Ephemeral Containers, understanding what they are, exploring their primary use cases, and learning how to implement them, all with guidance from the Kubernetes official documentation.

What Are Ephemeral Containers?

Ephemeral Containers, introduced as an alpha feature in Kubernetes 1.16 and reached a stable level on the Kubernetes 1.25 version, offer a powerful toolset for debugging, diagnosing, and troubleshooting issues within your Kubernetes pods without requiring you to alter your pod’s original configuration. Unlike regular containers that are part of the central pod’s definition, ephemeral containers are dynamically added to a running pod for a short-lived duration, providing you with a temporary environment to execute diagnostic tasks.

The good thing about ephemeral containers is that they allow you to have all the required tools to do the job (debug, data recovery, or anything else that could be required) without adding more devices to the base containers and increasing the security risk based on that action.

Main Use-Cases of Ephemeral Containers

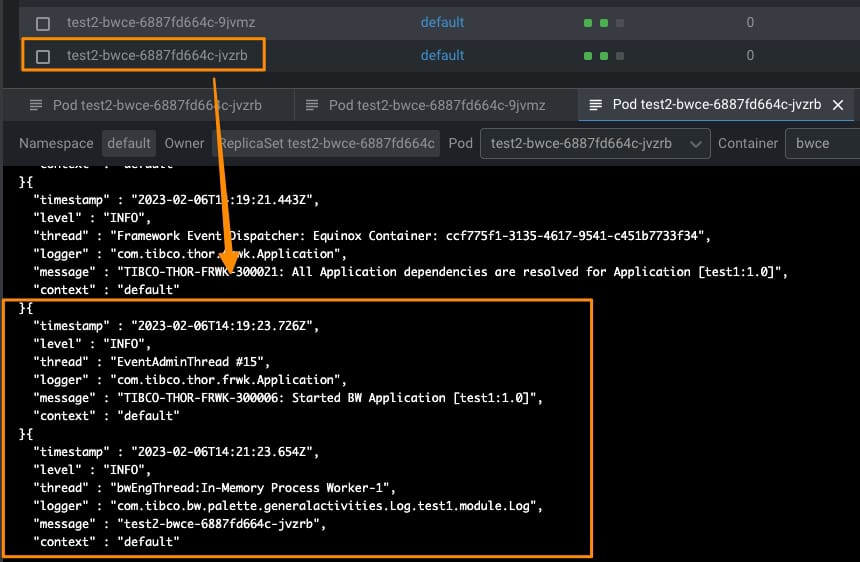

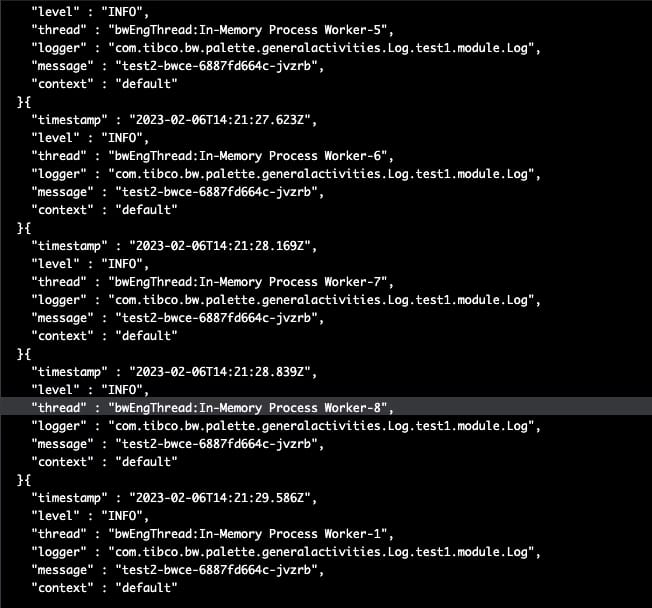

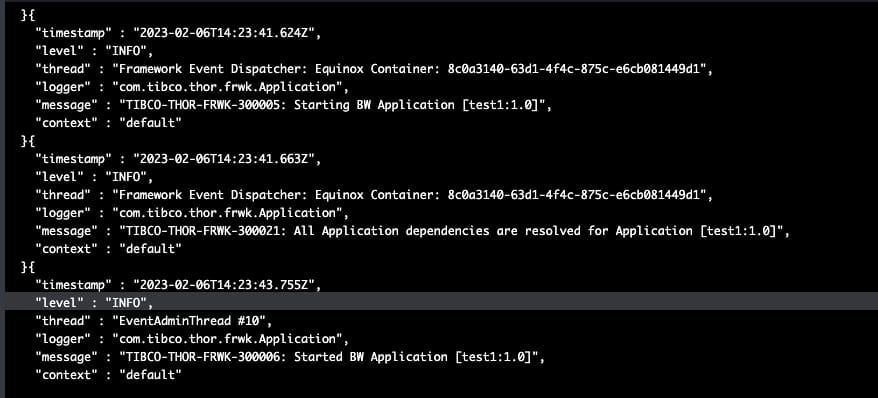

- Troubleshooting and Debugging: Ephemeral Containers shine brightest when troubleshooting and debugging. They allow you to inject a new container into a problematic pod to gather logs, examine files, run commands, or even install diagnostic tools on the fly. This is particularly valuable when encountering issues that are difficult to reproduce or diagnose in a static environment.

- Log Collection and Analysis: When a pod encounters issues, inspecting its logs is often essential. Ephemeral Containers make this process seamless by enabling you to spin up a temporary container with log analysis tools, giving you instant access to log files and aiding in identifying the root cause of problems.

- Data Recovery and Repair: Ephemeral Containers can also be used for data recovery and repair scenarios. Imagine a situation where a database pod faces corruption. With an ephemeral container, you can mount the pod’s storage volume, perform data recovery operations, and potentially repair the data without compromising the running pod.

- Resource Monitoring and Analysis: Performance bottlenecks or resource constraints can sometimes affect a pod’s functionality. Ephemeral Containers allow you to analyze resource utilization, run diagnostics, and profile the pod’s environment, helping you optimize its performance.

Implementing Ephemeral Containers

Thanks to Kubernetes ‘ user-friendly approach, implementing ephemeral containers is straightforward. Kubernetes provides the kubectl debug command, which streamlines the process of attaching ephemeral containers to pods. This command allows you to specify the pod and namespace and even choose the debugging container image to be injected.

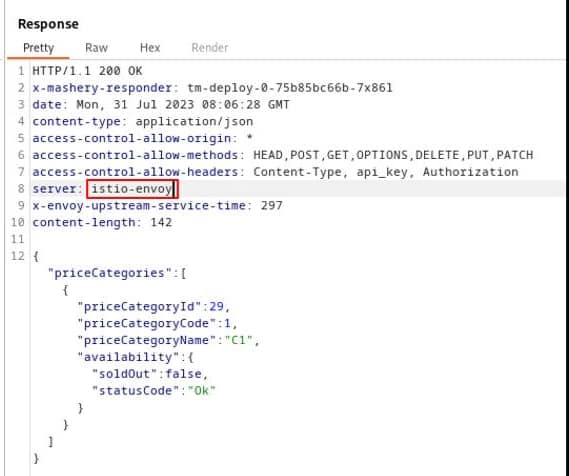

kubectl debug <pod-name> -n <namespace> --image=<debug-container-image>

You can go even beyond, and instead of adding the ephemeral containers to the running pod, you can do the same to a copy of the pod, as you can see in the following command:

kubectl debug myapp -it --image=ubuntu --share-processes --copy-to=myapp-debug

Finally, once you have done your duty, you can permanently remove it using a kubectl delete command, and that’s it.

It’s essential to notice that all these actions require direct access to the environment. Even that temporarily generates a mismatch on the “infrastructure-as-code” deployment, as we’re manipulating the runtime status temporarily. Hence, this approach is much more challenging to implement if you use some GitOps practices or tools such as Rancher Fleet or ArgoCD.

Conclusion

Ephemeral Containers, while currently a stable feature since the Kubernetes 1.25 release, offer impressive capabilities for debugging and diagnosing issues within your Kubernetes pods. By allowing you to inject temporary containers into running pods dynamically, they empower you to troubleshoot problems, collect logs, recover data, and optimize performance without disrupting your application’s core functionality. As Kubernetes continues to evolve, adding features like Ephemeral Containers demonstrates its commitment to providing developers with tools to simplify the management and maintenance of containerized applications. So, the next time you encounter a stubborn issue within your Kubernetes environment, remember that Ephemeral Containers might be the debugging superhero you need!

For more detailed information and usage examples, check out the Kubernetes official documentation on Ephemeral Containers. Happy debugging!

📚 Want to dive deeper into Kubernetes? This article is part of our comprehensive Kubernetes Architecture Patterns guide, where you’ll find all fundamental and advanced concepts explained step by step.