Improve the performance and productivity of your DevSecOps pipeline using containers.

CICD Docker means the approach most companies are using to introduce containers also in the building and pre-deployment phase to implement a part of the CICD pipeline. Let’s see why.

DevSecOps is the new normal for deployments at scale in large enterprises to meet the pace required in digital business nowadays. These processes are orchestrated using a CICD orchestration tool that acts as the brain of this process. Usual tools for doing this job are Jenkins, Bamboo, AzureDevOps, GitLab, GitHub.

In the traditional approach, we have different worker servers doing stages of the DevOps process: Code, Build, Test, Deploy, and for each of them, we need different kinds of tools and utilities to do the job. For example, to get the code, we can need a git installed. To do the build, we can rely on maven or Gradle, and to test, we can use SonarQube and so on.

So, in the end, we need a set of tools to perform successfully, and that also requires some management. In the new days, with the rise of cloud-native development and the container approach in the industry, this is also affecting the way that you develop your pipelines to introduce containers as part of the stage.

In most of the CI Orchestrators, you can define a container image to run as any step of your DevSecOps process, and let me tell you that is great if you do so because this will provide you a lot of the benefits that you need to be aware of.

1.- Much more scalable solution

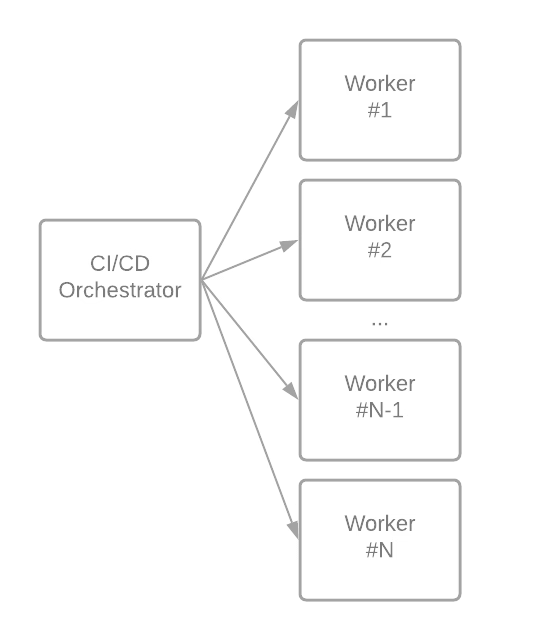

One of the problems when you use an orchestrator as the main element in your company, and that is being used by a lot of different technologies that can be open-source proprietary, code-based, visual development, and so on that means that you need to manage a lot of things and install the software in the workers.

Usually, what you do is that you define some workers to do the build of some artifacts, like the image shown below:

That is great because it allows segmentation of the build process and doesn’t require all software installed in all machines, even when they can be non-compatible.

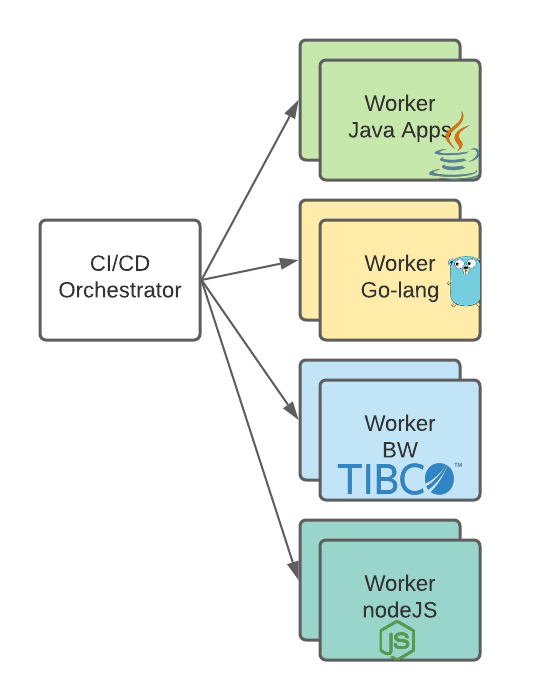

But what happens if we need to deploy a lot of applications of one of the types that we have in the picture below, like TIBCO BusinessWorks applications? That you will be limited based on the number of workers who have the software installed to build it and deploy it.

With a container-based approach, you will have all the workers available because no software is needed, you just need to pull the docker image, and that’s it, so you are only limited by the infrastructure you use, and if you adopt a cloud platform as part of the build process, these limitations are just removed. Your time to market and deployment pace is improved.

2.- Easy to maintain and extend

If you remove the need to install and manage the workers because they are spin up when you need it and delete it when they are not needed and all the thing you need to do is to create a container image that does the job, the time and the effort the teams need to spend in maintaining and extending the solution will drop considerably.

Also the removal of any upgrade process for the components involved on the steps as they follow the usual container image process.

3.- Avoid Orchestrator lock-in

As we rely on the containers to do most of the job, the work that we need to do to move from one DevOps solution to another is small, and that gives us the control to choose at any moment if the solution that we are using is the best one for our use-case and context or we need to move to another more optimized without the problem to justify big investments to do that job.

You get the control back, and you can also even go to a multi-orchestrator approach if needed, like using the best solution for each use-case and getting all the benefits for each of them at the same time without needing to fight against each of them.

Summary

All the benefits that we all know from cloud-native development paradigms and containers are relevant for application development and other processes that we use in our organization, being one of those your DevSecOps pipeline and processes. Start today making that journey to get all those advantages in the building process and not wait until it is too late. Enjoy your day. Enjoy your life.

📚 Want to dive deeper into Kubernetes? This article is part of our comprehensive Kubernetes Architecture Patterns guide, where you’ll find all fundamental and advanced concepts explained step by step.