KEDA provides a rich environment to scale your application apart from the traditional HPA approach using CPU and Memory

Autoscaling is one of the great things of cloud-native environments and helps us to provide an optimized use of the operations. Kubernetes provides many options to do that being one of those the Horizontal Pod Autoscaler (HPA) approach.

HPA is the way Kubernetes has to detect if it is needed to scale any of the pods, and it is based on the metrics such as CPU usage or memory.

https://kubernetes.io/docs/tasks/run-application/horizontal-pod-autoscale/

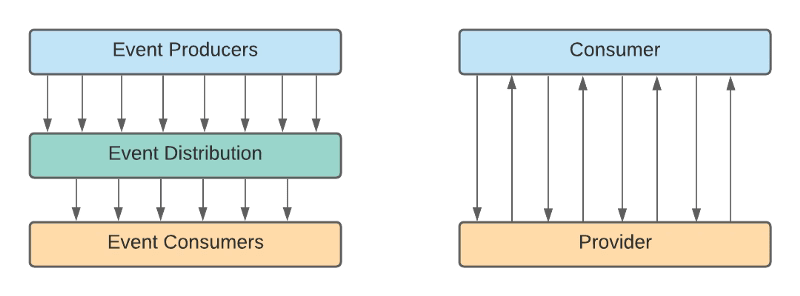

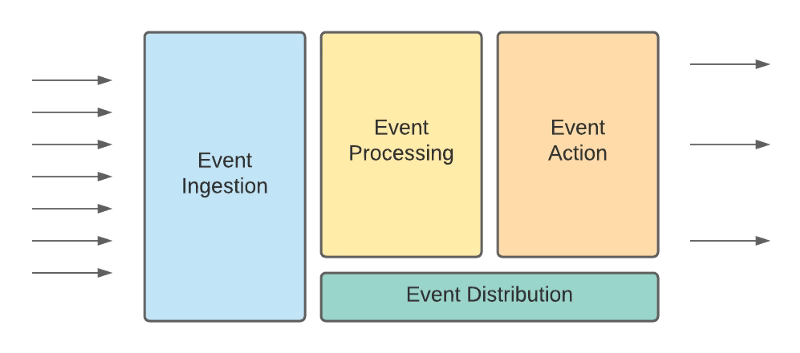

Sometimes those metrics are not enough to decide if the number of replicas we have available is enough. Other metrics can provide a better perspective, such as the number of requests or the number of pending events.

Kubernetes Event-Driven Autoscaling (KEDA)

Here is where KEDA comes to help. KEDA stands for Kubernetes Event-Driven Autoscaling and provides a more flexible approach to scale our pods inside a Kubernetes cluster.

It is based on scalers that can implement different sources to measure the number of requests or events that we receive from different messaging systems such as Apache Kafka, AWS Kinesis, Azure EventHub, and other systems as InfluxDB or Prometheus.

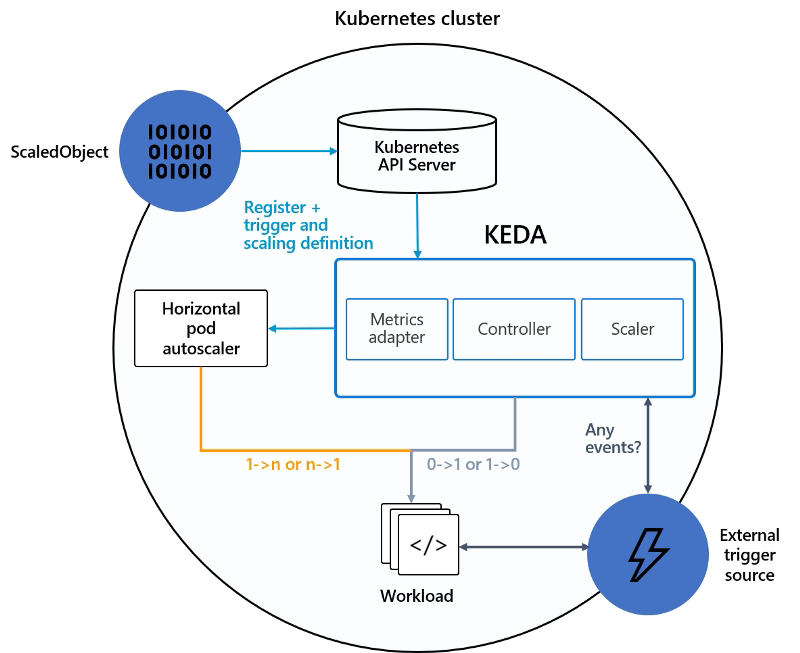

KEDA works as it is shown in the picture below:

We have our ScaledObject that links our external event source (i.e., Apache Kafka, Prometheus ..) with the Kubernetes Deployment we would like to scale and register that in the Kubernetes cluster.

KEDA will monitor the external source, and based on the metrics gathered, will communicate the Horizontal Pod Autoscaler to scale the workload as defined.

Testing the Approach with a Use-Case

So, now that we know how that works, we will do some tests to see it live. We are going to show how we can quickly scale one of our applications using this technology. And to do that, the first thing we need to do is to define our scenario.

In our case, the scenario will be a simple cloud-native application developed using a Flogo application exposing a REST service.

The first step we need to do is to deploy KEDA in our Kubernetes cluster, and there are several options to do that: Helm charts, Operation, or YAML files. In this case, we are going to use the Helm charts approach.

So, we are going to type the following commands to add the helm repository and update the charts available, and then deploy KEDA as part of our cluster configuration:

helm repo add kedacore https://kedacore.github.io/charts

helm repo update

helm install keda kedacore/kedaAfter running this command, KEDA is deployed in our K8S cluster, and it types the following command kubectl get all will provide a situation similar to this one:

pod/keda-operator-66db4bc7bb-nttpz 2/2 Running 1 10m pod/keda-operator-metrics-apiserver-5945c57f94-dhxth 2/2 Running 1 10m

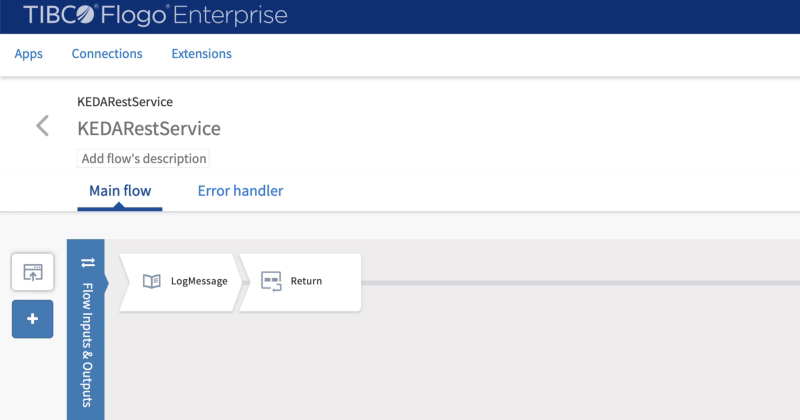

Now, we are going to deploy our application. As already commented to do that we are going to use our Flogo Application, and the flow will be as simple as this one:

- The application exposes a REST service using the /hello as the resource.

- Received requests are printed to the standard output and returned a message to the requester

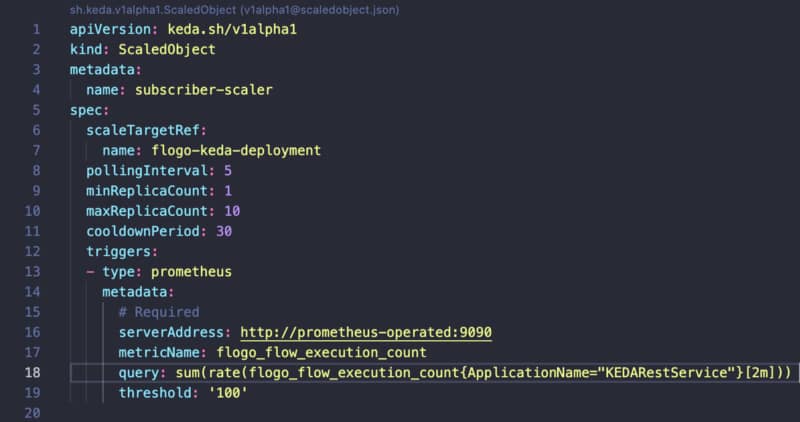

Once we have our application deployed on our Kubernetes application, we need to create a ScaledObject that is responsible for managing the scalability of that component:

We use Prometheus as a trigger, and because of that, we need to configure where our Prometheus server is hosted and what query we would like to do to manage the scalability of our component.

In our sample, we will use the flogo_flow_execution_count that is the metric that counts the number of requests that are received by this component, and when this has a rate higher than 100, it will launch a new replica.

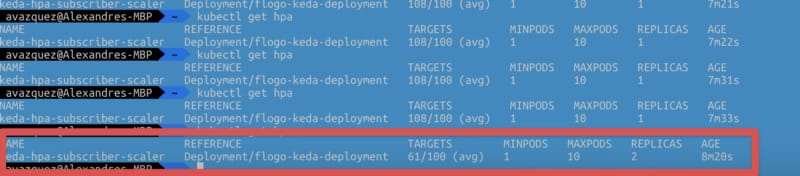

After hitting the service with a Load Test, we can see that as soon as the service reaches the threshold, it launch a new replica to start handling requests as expected.

All of the code and resources are hosted in the GitHub repository shown below:

https://kubernetes.io/docs/tasks/run-application/horizontal-pod-autoscale/

Summary

This post has shown that we have unlimited options in deciding the scalability options for our workloads. We can use the standard metrics like CPU and memory, but if we need to go beyond that, we can use different external sources of information to trigger that autoscaling.

📚 Want to dive deeper into Kubernetes? This article is part of our comprehensive Kubernetes Architecture Patterns guide, where you’ll find all fundamental and advanced concepts explained step by step.