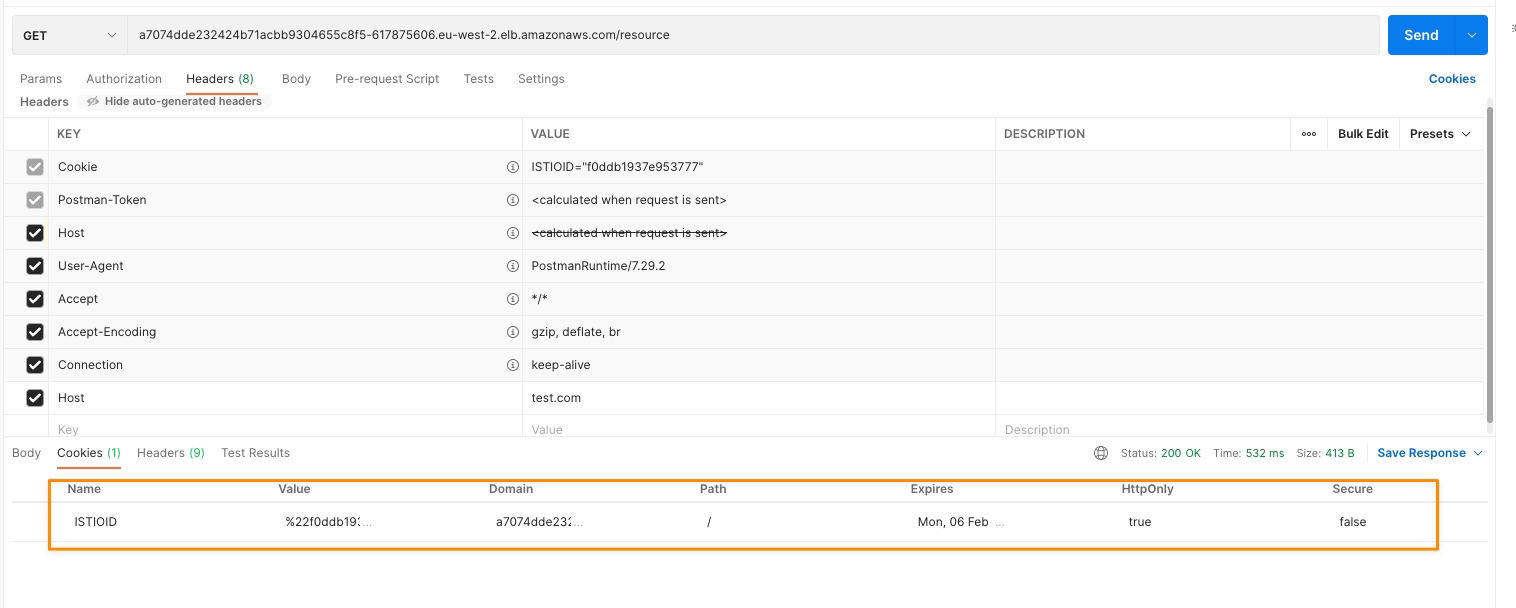

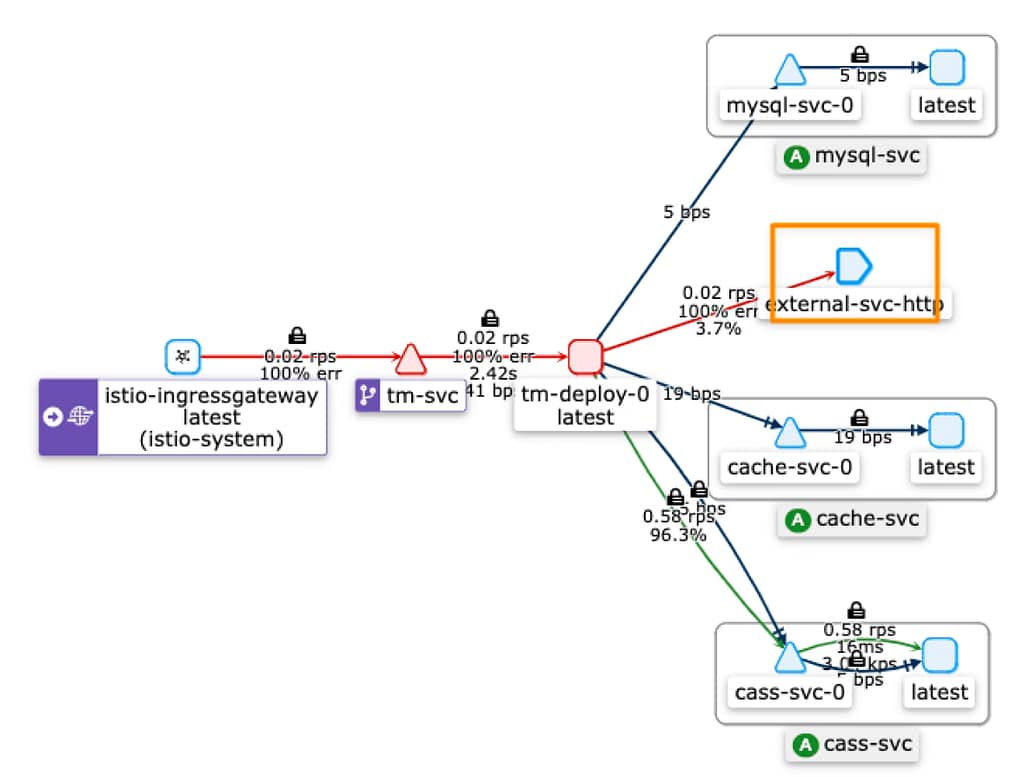

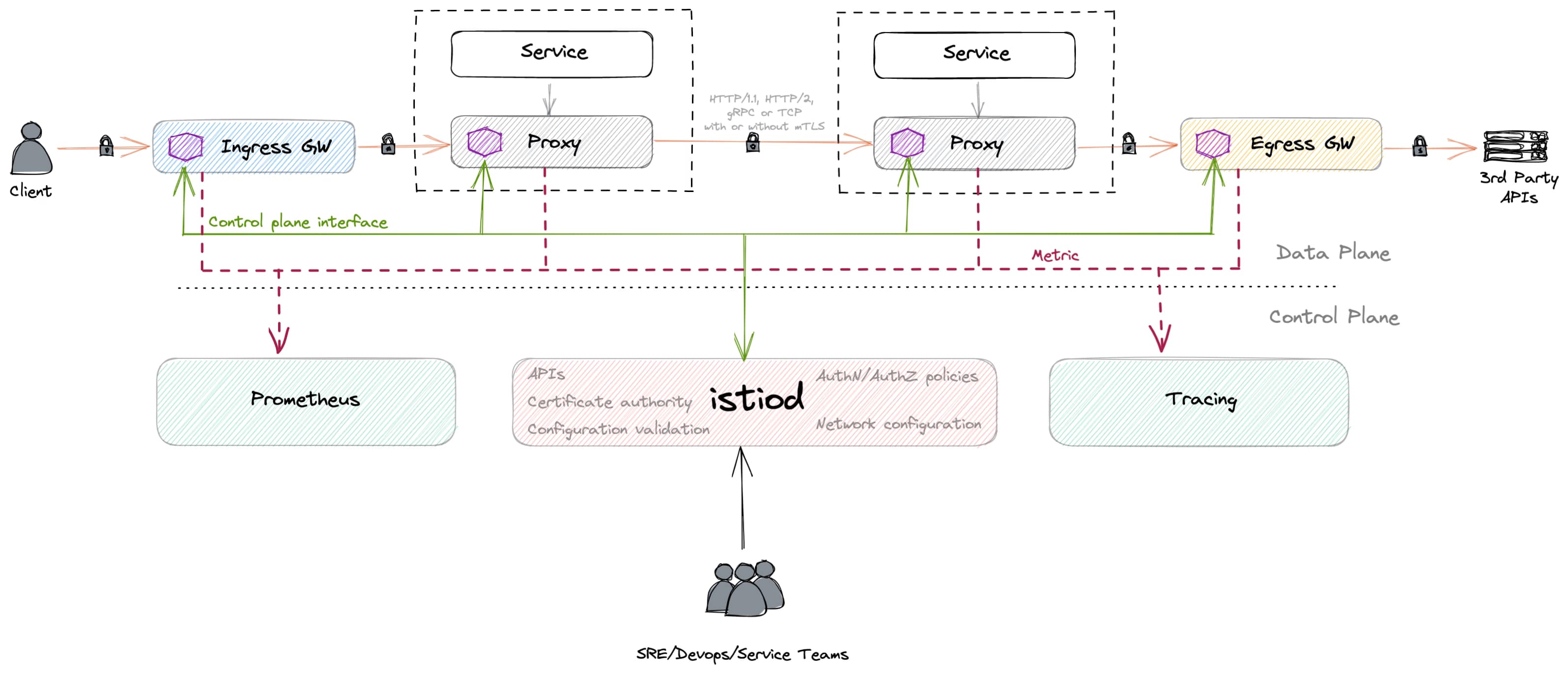

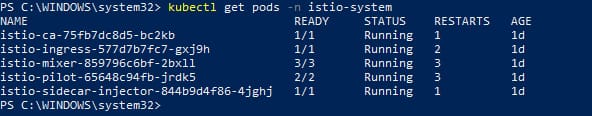

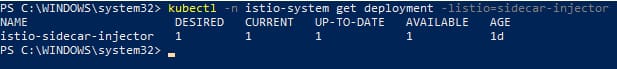

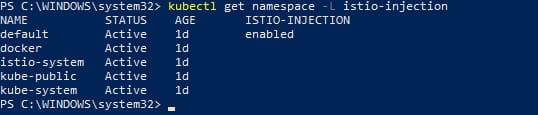

Istio has become an essential tool for managing HTTP traffic within Kubernetes clusters, offering advanced features such as Canary Deployments, mTLS, and end-to-end visibility. However, some tasks, like exposing a TCP port using the Istio IngressGateway, can be challenging if you’ve never done it before. This article will guide you through the process of exposing TCP ports with Istio Ingress Gateway, complete with real-world examples and practical use cases.

Understanding the Context

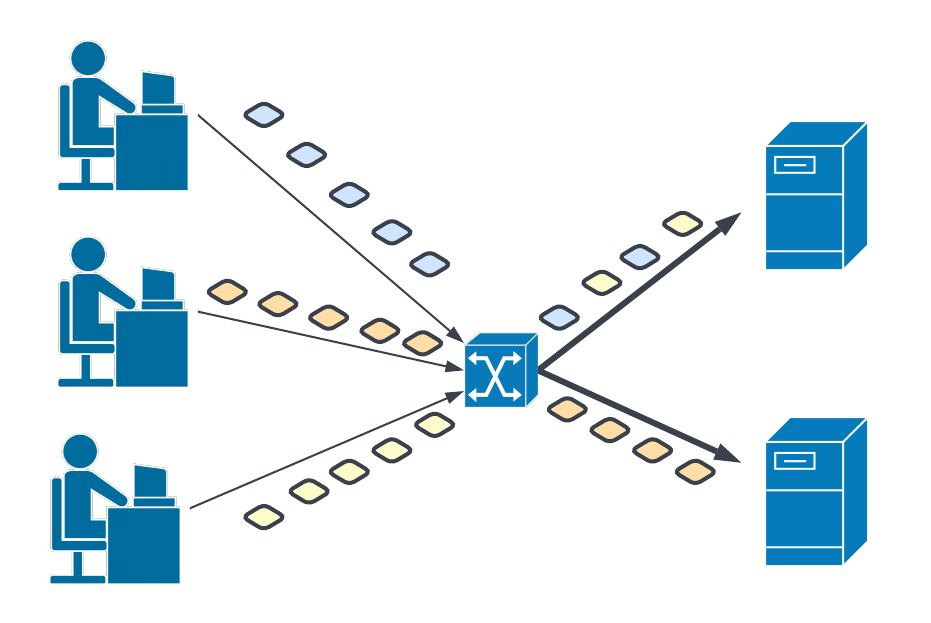

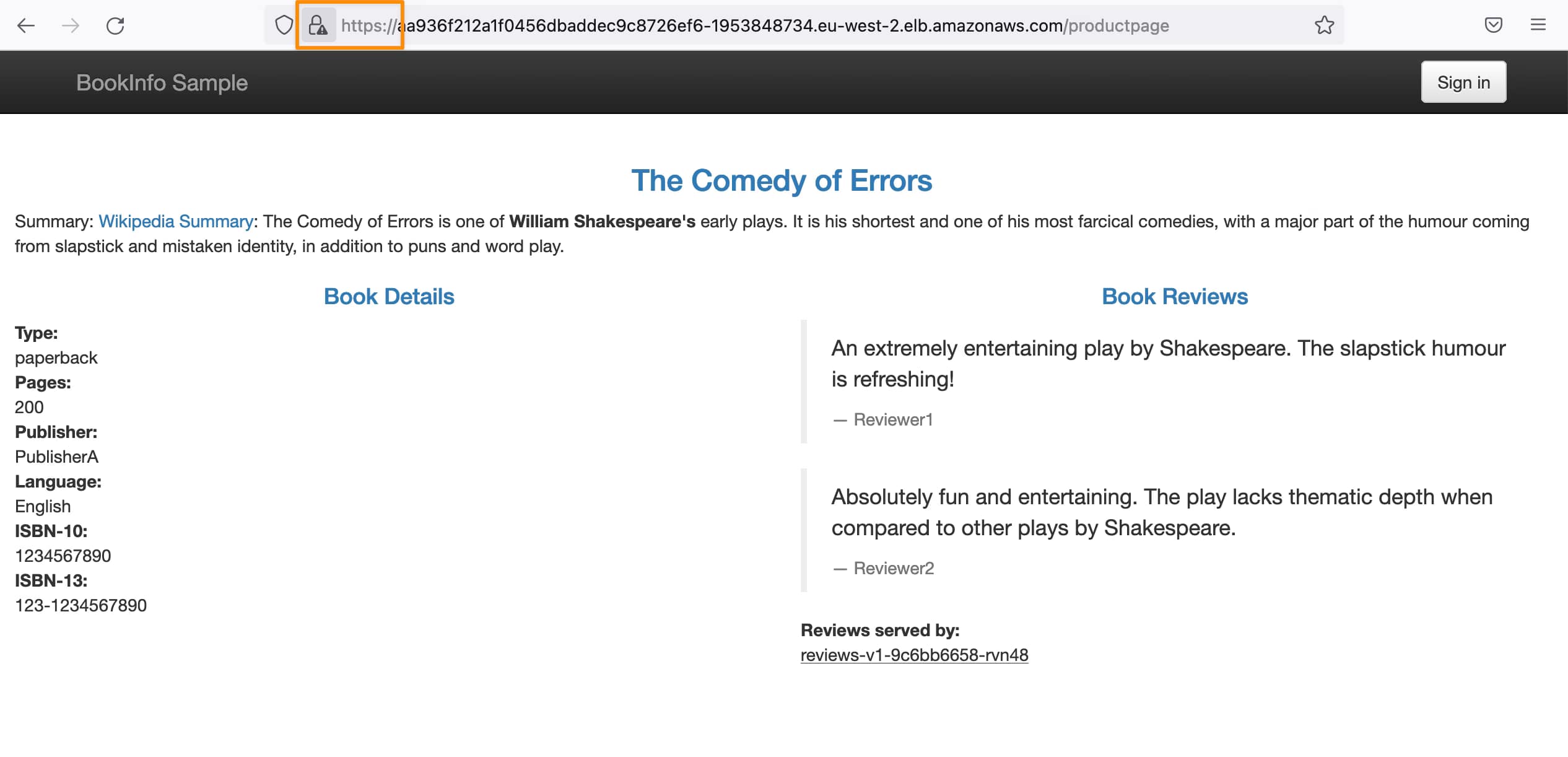

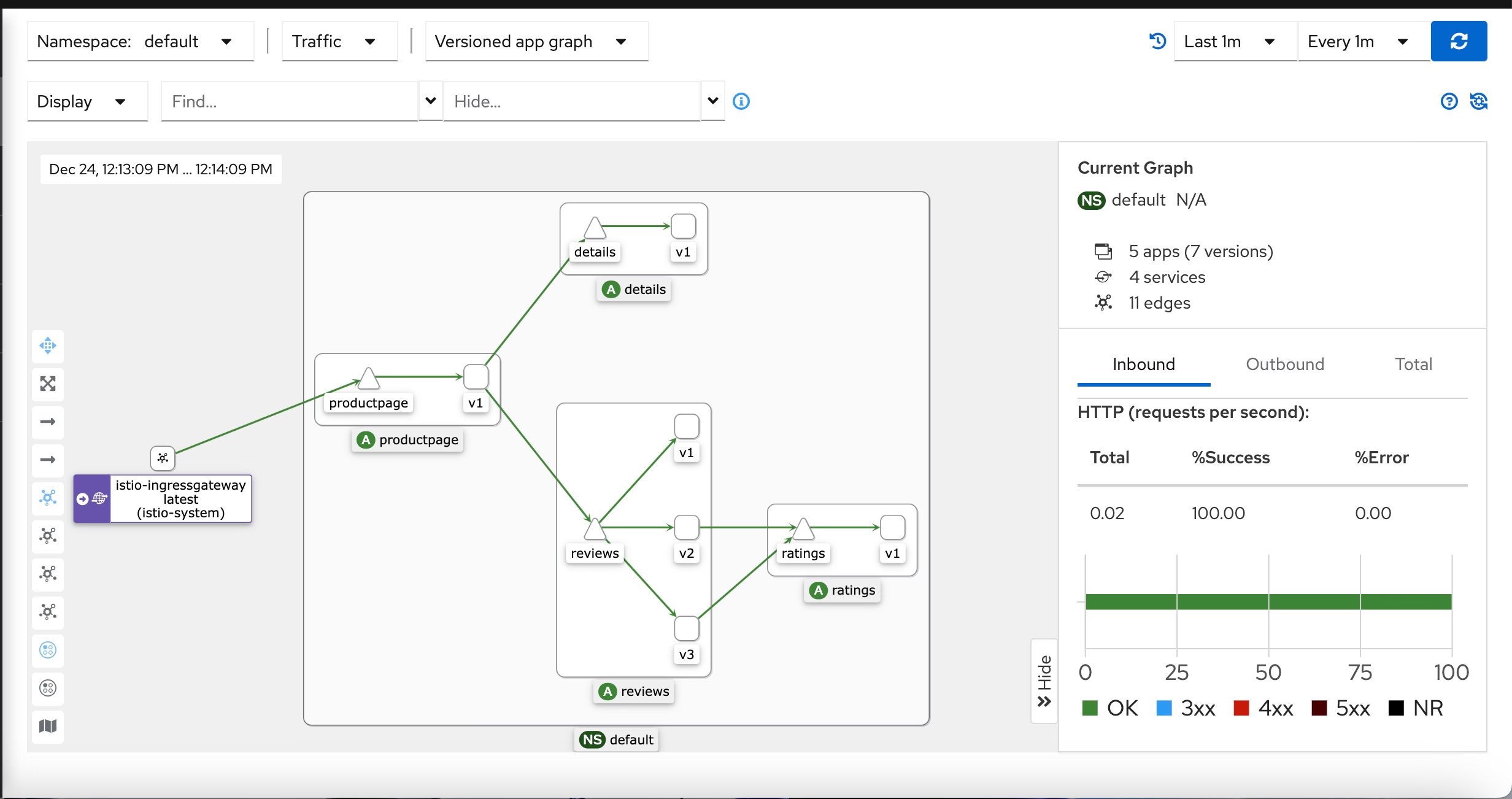

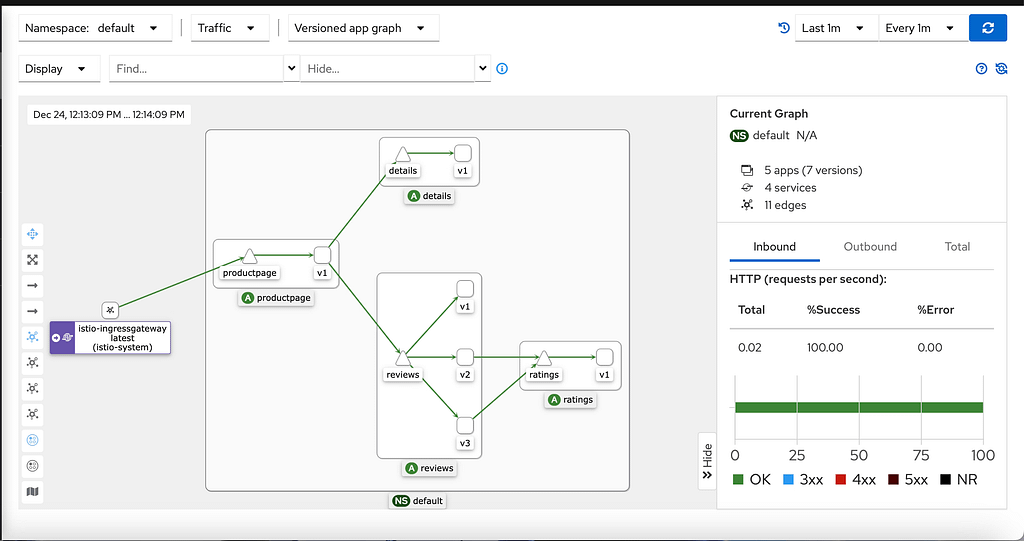

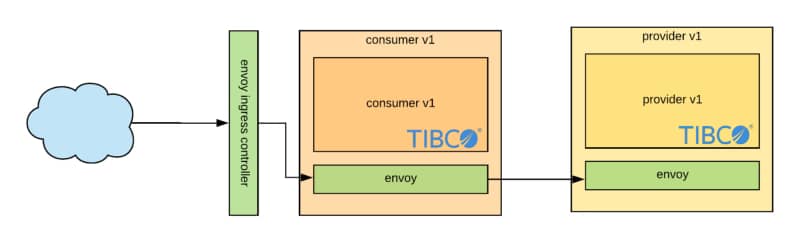

Istio is often used to manage HTTP traffic in Kubernetes, providing powerful capabilities such as traffic management, security, and observability. The Istio IngressGateway serves as the entry point for external traffic into the Kubernetes cluster, typically handling HTTP and HTTPS traffic. However, Istio also supports TCP traffic, which is necessary for use cases like exposing databases or other non-HTTP services running in the cluster to external consumers.

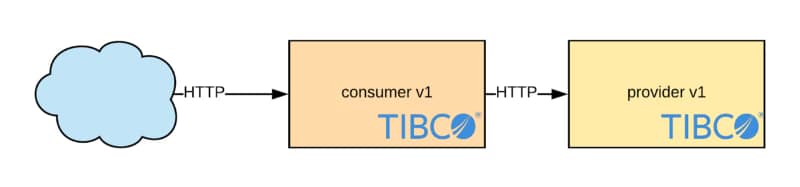

Exposing a TCP port through Istio involves configuring the IngressGateway to handle TCP traffic and route it to the appropriate service. This setup is particularly useful in scenarios where you need to expose services like TIBCO EMS or Kubernetes-based databases to other internal or external applications.

Steps to Expose a TCP Port with Istio IngressGateway

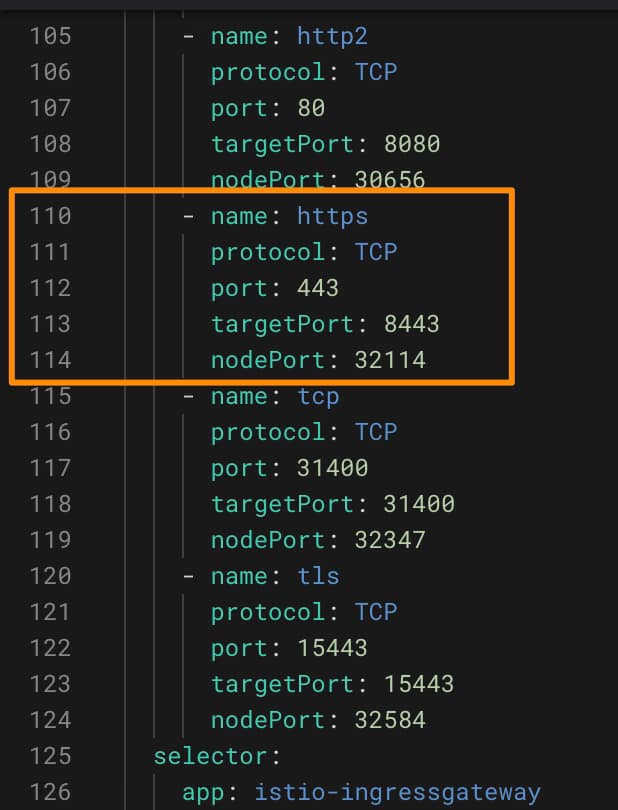

1.- Modify the Istio IngressGateway Service:

Before configuring the Gateway, you must ensure that the Istio IngressGateway service is configured to listen on the new TCP port. This step is crucial if you’re using a NodePort service, as this port needs to be opened on the Load Balancer.

apiVersion: v1

kind: Service

metadata:

name: istio-ingressgateway

namespace: istio-system

spec:

ports:

- name: http2

port: 80

targetPort: 80

- name: https

port: 443

targetPort: 443

- name: tcp

port: 31400

targetPort: 31400

protocol: TCP

2.- Update the Istio IngressGateway service to include the new port 31400 for TCP traffic.

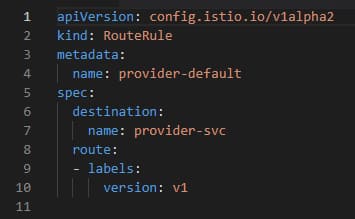

Configure the Istio IngressGateway: After modifying the service, configure the Istio IngressGateway to listen on the desired TCP port.

apiVersion: networking.istio.io/v1beta1

kind: Gateway

metadata:

name: tcp-ingress-gateway

namespace: istio-system

spec:

selector:

istio: ingressgateway

servers:

- port:

number: 31400

name: tcp

protocol: TCP

hosts:

- "*"

In this example, the IngressGateway is configured to listen on port 31400 for TCP traffic.

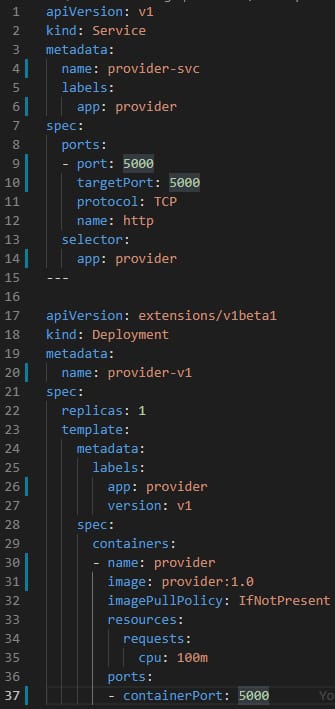

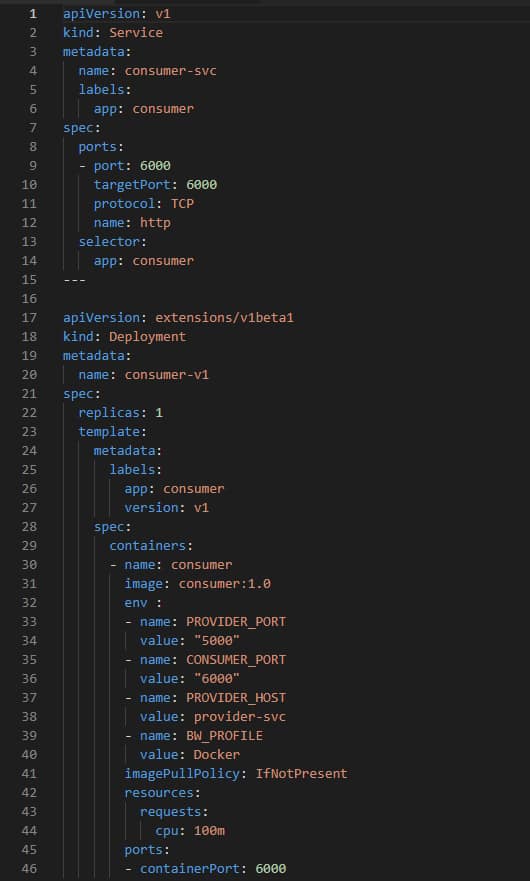

3.- Create a Service and VirtualService:

After configuring the gateway, you need to create a Service that represents the backend application and a VirtualService to route the TCP traffic.

apiVersion: v1

kind: Service

metadata:

name: tcp-service

namespace: default

spec:

ports:

- port: 31400

targetPort: 8080

protocol: TCP

selector:

app: tcp-app

The Service above maps port 31400 on the IngressGateway to port 8080 on the backend application.

apiVersion: networking.istio.io/v1beta1

kind: VirtualService

metadata:

name: tcp-virtual-service

namespace: default

spec:

hosts:

- "*"

gateways:

- tcp-ingress-gateway

tcp:

- match:

- port: 31400

route:

- destination:

host: tcp-service

port:

number: 8080

The VirtualService routes TCP traffic coming to port 31400 on the gateway to the tcp-service on port 8080.

4.- Apply the Configuration

Apply the above configurations using kubectl to create the necessary Kubernetes resources.

kubectl apply -f istio-ingressgateway-service.yaml

kubectl apply -f tcp-ingress-gateway.yaml

kubectl apply -f tcp-service.yaml

kubectl apply -f tcp-virtual-service.yaml

After applying these configurations, the Istio IngressGateway will expose the TCP port to external traffic.

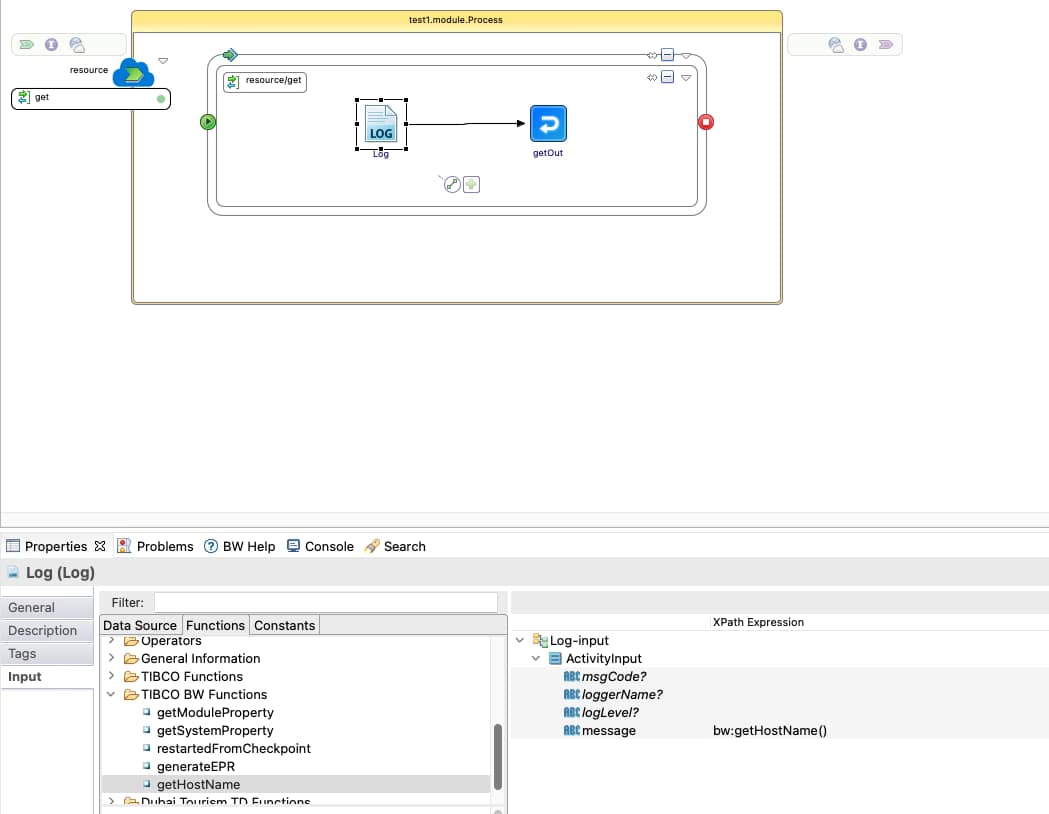

Practical Use Cases

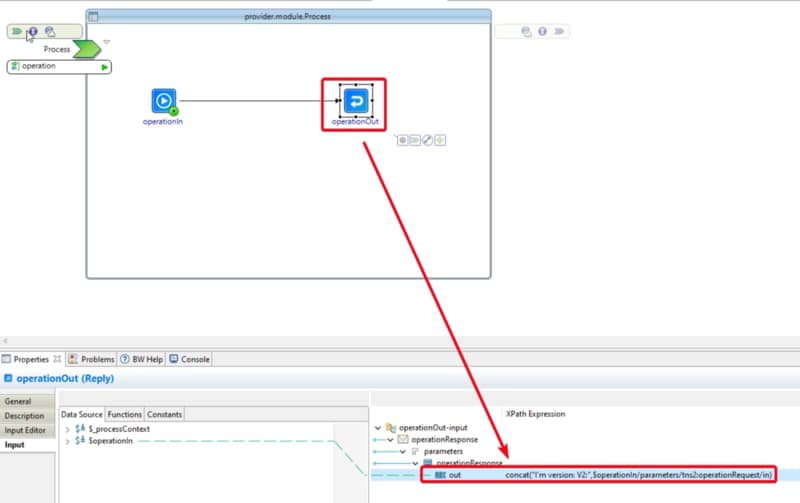

- Exposing TIBCO EMS Server: One common scenario is exposing a TIBCO EMS (Enterprise Message Service) server running within a Kubernetes cluster to other internal applications or external consumers. By configuring the Istio IngressGateway to handle TCP traffic, you can securely expose EMS’s TCP port, allowing it to communicate with services outside the Kubernetes environment.

- Exposing Databases: Another use case is exposing a database running within Kubernetes to external services or different clusters. By exposing the database’s TCP port through the Istio IngressGateway, you enable other applications to interact with it, regardless of their location.

- Exposing a Custom TCP-Based Service: Suppose you have a custom application running within Kubernetes that communicates over TCP, such as a game server or a custom TCP-based API service. You can use the Istio IngressGateway to expose this service to external users, making it accessible from outside the cluster.

Conclusion

Exposing TCP ports using the Istio IngressGateway can be a powerful technique for managing non-HTTP traffic in your Kubernetes cluster. With the steps outlined in this article, you can confidently expose services like TIBCO EMS, databases, or custom TCP-based applications to external consumers, enhancing the flexibility and connectivity of your applications.