Introduction

One such important security feature is the use of ReadOnlyRootFilesystem, a powerful tool that can significantly enhance the security posture of your containers.

In the rapidly evolving software development and deployment landscape, containers have emerged as a revolutionary technology. Offering portability, efficiency, and scalability, containers have become the go-to solution for packaging and delivering applications. However, with these benefits come specific security challenges that must be addressed to ensure the integrity of your containerized applications.

A ReadOnlyRootFilesystem is precisely what it sounds like a filesystem that can only be read from, not written to. In containerization, the contents of a container’s filesystem are locked in a read-only state, preventing any modifications or alterations during runtime.

Advantages of Using ReadOnlyRootFilesystem

- Reduced Attack Surface: One of the fundamental principles of cybersecurity is reducing the attack surface – the potential points of entry for malicious actors. Enforcing a ReadOnlyRootFilesystem eliminates the possibility of an attacker gaining write access to your container. This simple yet effective measure significantly limits their ability to inject malicious code, tamper with critical files, or install malware.

- Immutable Infrastructure: Immutable infrastructure is a concept where components are never changed once deployed. This approach ensures consistency and repeatability, as any changes are made by deploying a new instance rather than modifying an existing one. By applying a ReadOnlyRootFilesystem, you’re essentially embracing the principles of immutable infrastructure within your containers, making them more resistant to unauthorized modifications.

- Malware Mitigation: Malware often relies on gaining written access to a system to carry out its malicious activities. By employing a ReadOnlyRootFilesystem, you erect a significant barrier against malware attempting to establish persistence or exfiltrate sensitive data. Even if an attacker manages to compromise a container, their ability to install and execute malicious code is severely restricted.

- Enhanced Forensics and Auditing: In the unfortunate event of a security breach, having a ReadOnlyRootFilesystem in place can assist in forensic analysis. Since the filesystem remains unaltered, investigators can more accurately trace the attack vector, determine the extent of the breach, and identify the vulnerable entry points.

Implementation Considerations

Implementing a ReadOnlyRootFilesystem in your containerized applications requires a deliberate approach:

- Image Design: Build your container images with the ReadOnlyRootFilesystem concept in mind. Make sure to separate read-only and writable areas of the filesystem. This might involve creating volumes for writable data or using environment variables to customize runtime behavior.

- Runtime Configuration: Containers often require write access for temporary files, logs, or other runtime necessities. Carefully design your application to use designated directories or volumes for these purposes while keeping the critical components read-only.

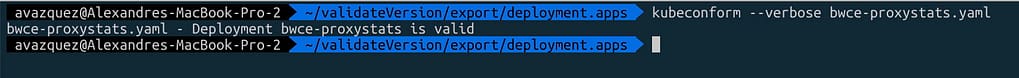

- Testing and Validation: Thoroughly test your containerized application with the ReadOnlyRootFilesystem configuration to ensure it functions as intended. Pay attention to any runtime errors, permission issues, or unexpected behavior that may arise.

How to Define a Pod to be ReadOnlyRootFilesystem?

To define a Pod as “ReadOnlyRootFilesystem,” this is one of the flags that belong to the securityContext section of the pod, as you can see in the sample below:

apiVersion: v1

kind: Pod

metadata:

name: <Pod name>

spec:

containers:

- name: <container name>

image: <image>

securityContext:

readOnlyRootFilesystem: true

Conclusion

As the adoption of containers continues to surge, so does the importance of robust security measures. Incorporating a ReadOnlyRootFilesystem into your container strategy is a proactive step towards safeguarding your applications and data. By reducing the attack surface, fortifying against malware, and enabling better forensics, you’re enhancing the overall security posture of your containerized environment.

As you embrace immutable infrastructure within your containers, you’ll be better prepared to face the ever-evolving landscape of cybersecurity threats. Remember, when it comes to container security, a ReadOnlyRootFilesystem can be the shield that protects your digital assets from potential harm.

📚 Want to dive deeper into Kubernetes? This article is part of our comprehensive Kubernetes Architecture Patterns guide, where you’ll find all fundamental and advanced concepts explained step by step.