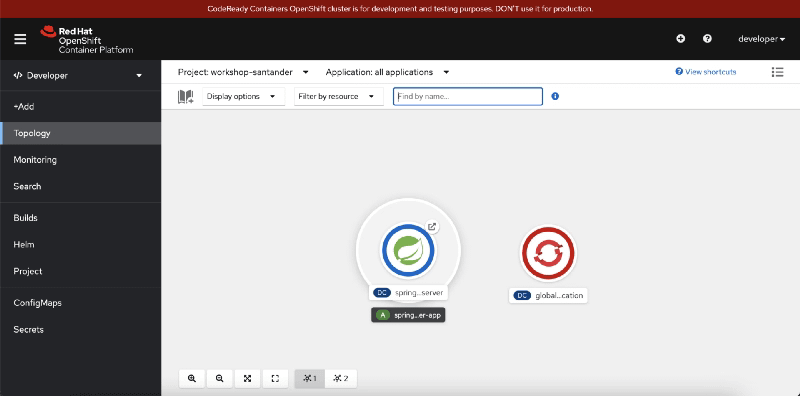

ConfigMaps is one of the most known and, at the same time, less used objects in the Kubernetes ecosystem. It is one of the primary objects that has been there from the beginning, even if we tried so many other ways to implement a Config Management solution (such as Consul, Spring Cloud Config, and others).

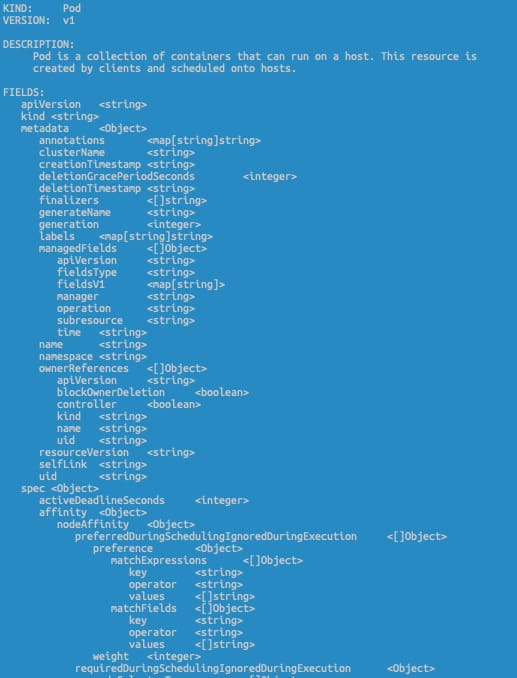

Based on its own documentation words:

A ConfigMap is an API object used to store non-confidential data in key-value pairs.

https://kubernetes.io/docs/concepts/configuration/configmap/

Its motivation was to provide a native solution for configuration management for cloud-native deployments. A way to manage and deploy configuration focusing on different code from the configuration. Now, I still remember the WAR files with the application.properties file inside of it.

ConfigMap is a resource as simple as you can see in the snippet below:

apiVersion: v1

kind: ConfigMap

metadata:

name: game-demo

data:

player_initial_lives: "3"

ui_properties_file_name: "user-interface.properties"

ConfigMaps are objects that belong to a namespace. They have a strong relationship with Deployment and Pod and enable the option to have different logical environments using namespace where they can deploy the same application. Still, with a specific configuration, so they will need a particular configMap to support that, even if it is based on the same resource YAML file.

From a technical perspective, the content of the ConfigMap is stored in the etcd database as it happens for any information that is related to the Kubernetes environment, and you should remember that etcd by default is not encrypted, so all the data can be retrieved for anyone that has access to it.

Purposes of ConfigMaps

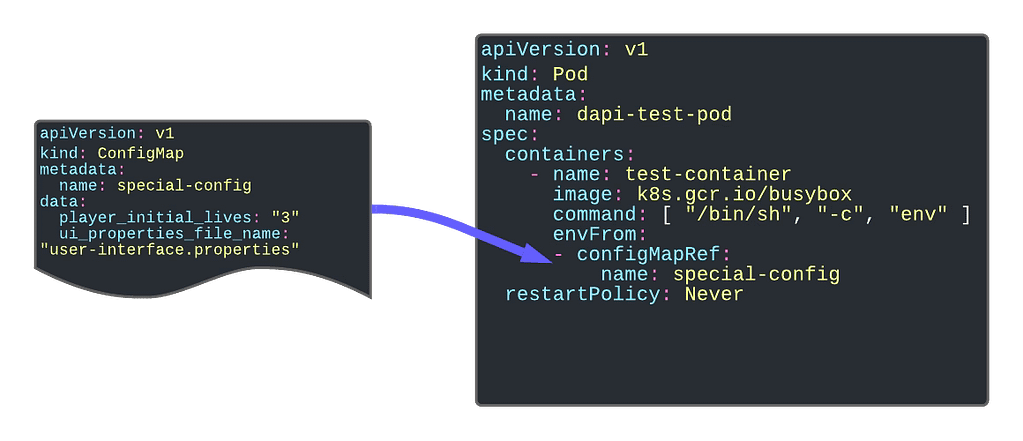

Configuration Parameters

The first and foremost purpose of the configMap is to provide configuration parameters to your workload. An industrialized way to remove the need for env variables is to link the environment configuration from your application.

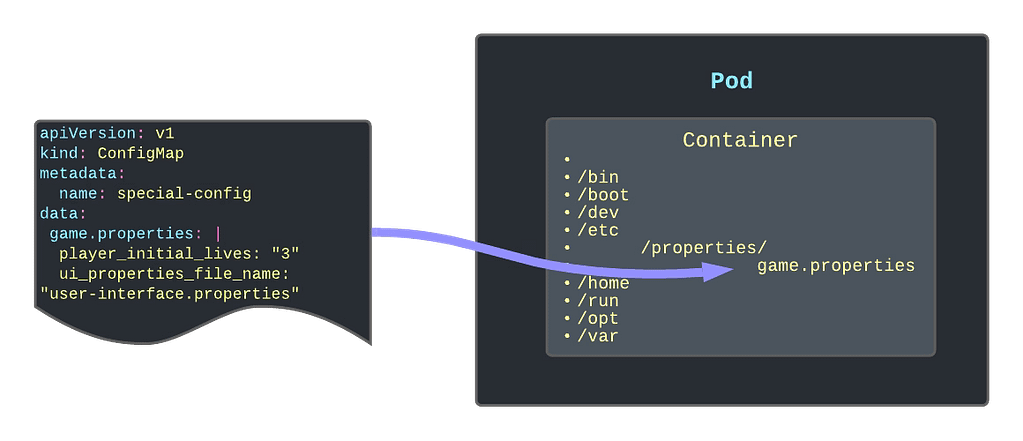

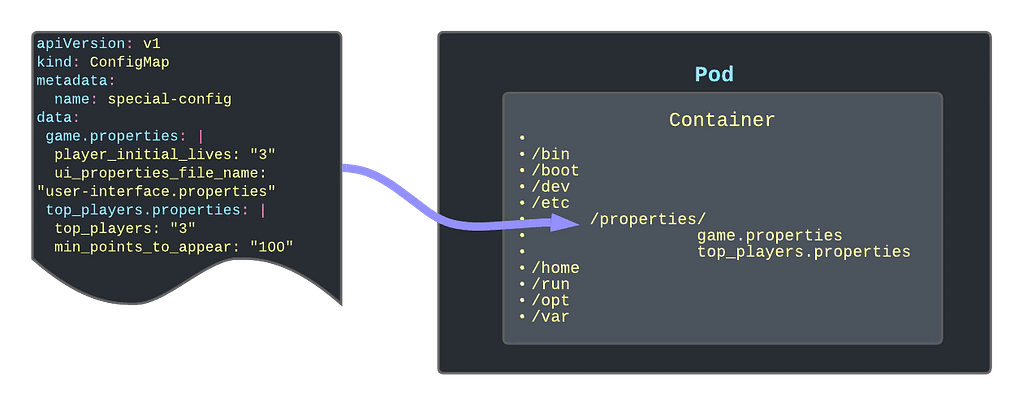

Providing Environment Dependent Files

Another significant usage is providing or replacing files inside your containers containing the critical configuration file. One of the primary samples that illustrate this is to give a logging configuration for your app if your app is using the logback library. In this case, you need to provide a logback.xml file, so it knows how to apply your logging configuration.

Other options can be properties. The file needs to be located there or even public-key certificates to handle SSL connections with safelisted servers only.

Read-Only Folders

Another option is to use the ConfigMap as a read-only folder to provide an immutable way to link information to the container. One use-case of this can be Grafana Dashboards that you are adding to your Grafana pod (if you are not using the Grafana operator)

Different ways to create a ConfigMap

You have several ways to create a ConfigMap using the interactive mode that simplifies its creation. Here are the ones that I use the most:

Create a configMap to host key-value pairs for configuration purposes

kubectl create configMap name --from-literal=key=valueCreate a configMap using a Java-like properties file to populate the ConfigMap in a key-value pair.

kubectl create configMap name --from-env-file=filepathCreate a configMap using a file to be part of the content of the ConfigMap

kubectl create configMap name --from-file=filepathConfigMap Refresh Lifecycle

ConfigMaps are updated in the same way as any other Kubernetes object, and you can use even the same commands such as kubctl apply to do that. But you need to be careful because one thing is to update the ConfigMap itself and another thing is that the resource using this ConfigMap is updated.

In all the use-cases that we have described here, the content of the ConfigMap depends on the Pod’s lifecycle. That means that the content of the ConfigMap is read on the initialization process of the Pod. So to update the ConfigMap data inside the pod, you will need to restart or bring a new instance of the pod after you have modified the ConfigMap object itself.

📚 Want to dive deeper into Kubernetes? This article is part of our comprehensive Kubernetes Architecture Patterns guide, where you’ll find all fundamental and advanced concepts explained step by step.