When marketing steals a technical word, it leads to madness and a complete change of its meaning.

API is the next on the list. It is always the same pattern regarding technical terms when they go beyond the normal really techy forum and reach a more “mainstream” level in the industry. As soon as this happens, the term starts to lose its meaning, and it starts to be like a wildcard word that can be very different things to very different people. If you don’t believe me come with me to this set of examples.

You can argue that terms need to evolve and that the same word can mean different things as long as the industry continues to evolve, and that is true. For example, the package term that in the past is referred to way to package software to be able to share it usually through mail or an FTP server as a TAR package it has been re-defined with the eclosion of the package managers in the 90’s and after that with the artifact management to handle dependencies with approaches such as Maven, npm and so on.

But I am not talking about these examples. I am talking about when a term is used a lot because it is fancy and means evolution, or modernization, so you try to use it as much as possible, even to mean different things. And one of these terms is API.

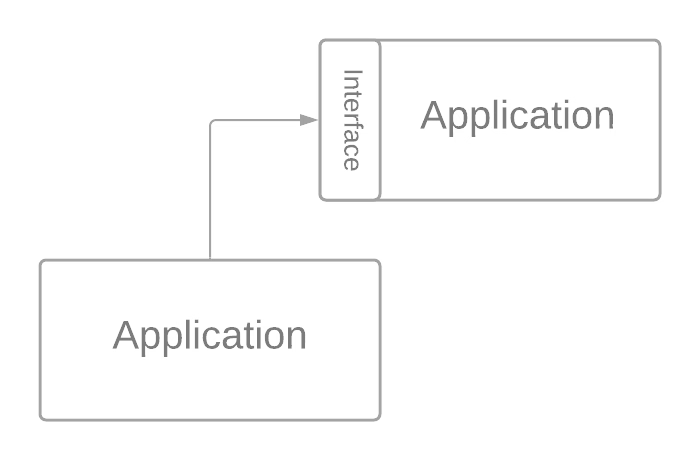

API stands for Application Programming Interface, and as its name states, it is an interface. Since the beginning of computer time, it has been created to reference the contract and how you need to interact with a specific application program. However, the term was mainly used for libraries to define their contract for other applications that needed the capability.

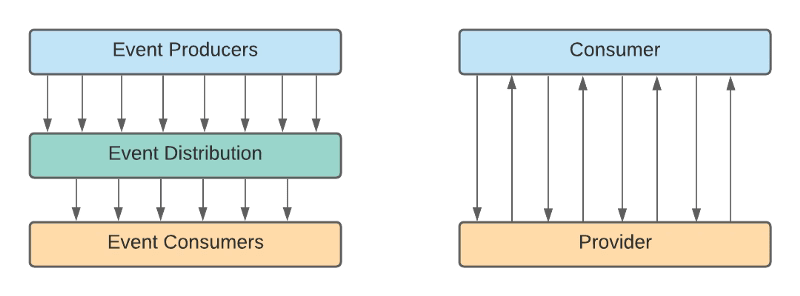

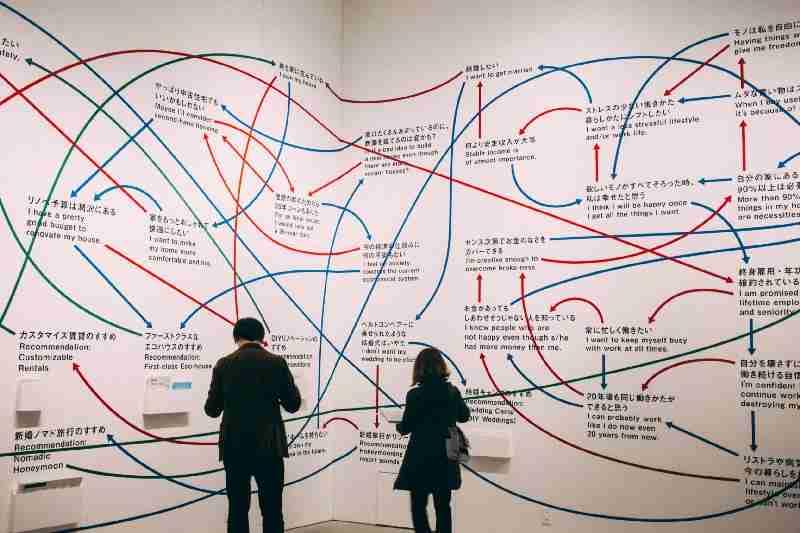

So If we would like to show this in a graphical form, this is the API referring to:

With the eclosion of the REST Services and mobile apps, the term of API will expand beyond its normal usage and become a normal word in today’s world because all devs need some API to do work. Starting from the common capabilities such as Authentication until just concrete capabilities are needed to perform its work.

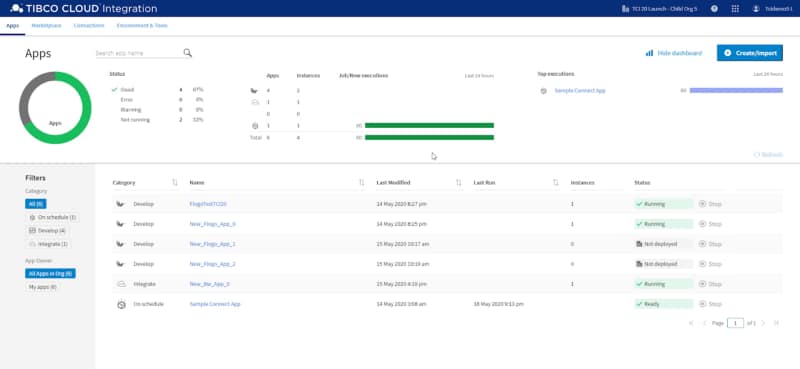

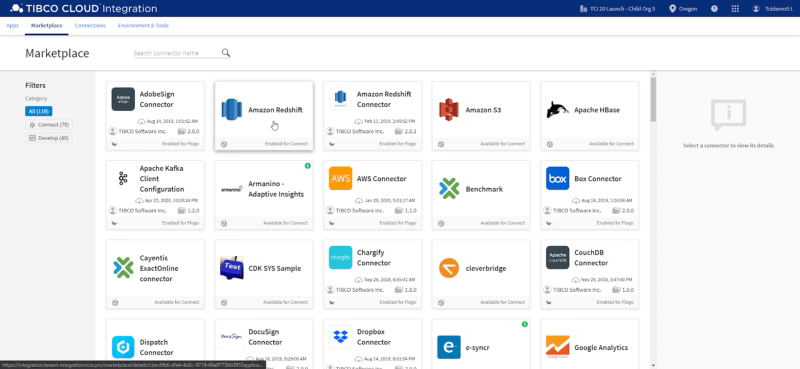

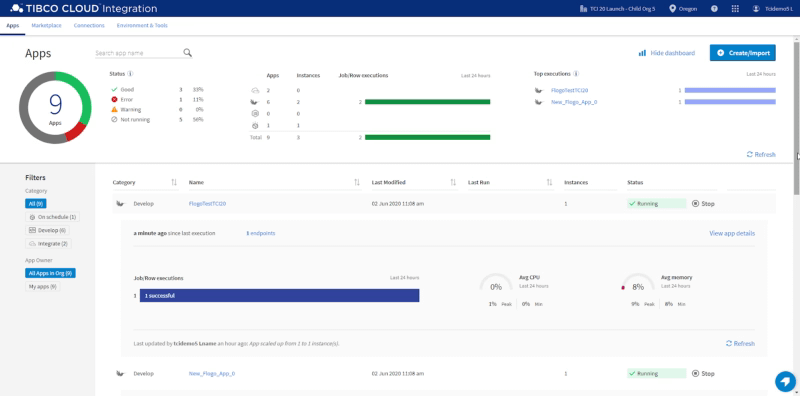

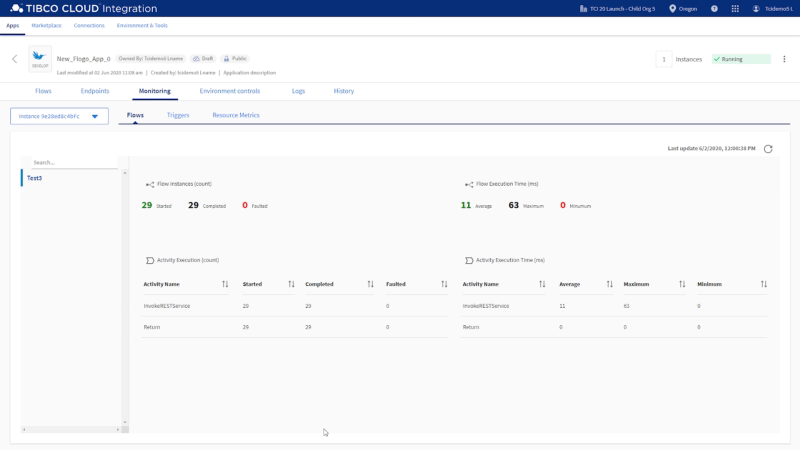

The explosion of services that exposed their own API required a way to provide central management to exposed interfaces, especially when we start to publish some of these capabilities to the outside world. We needed to secure them, identify who was using them and at what level, and a way for devs to find the needed documentation to be able to use their services. And because of that, we have the rise of API Management solutions.

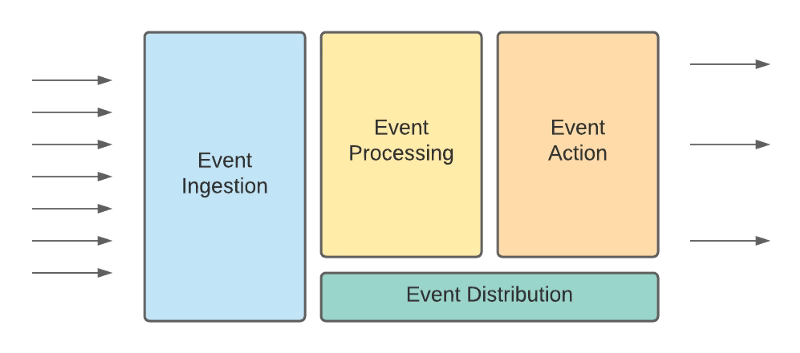

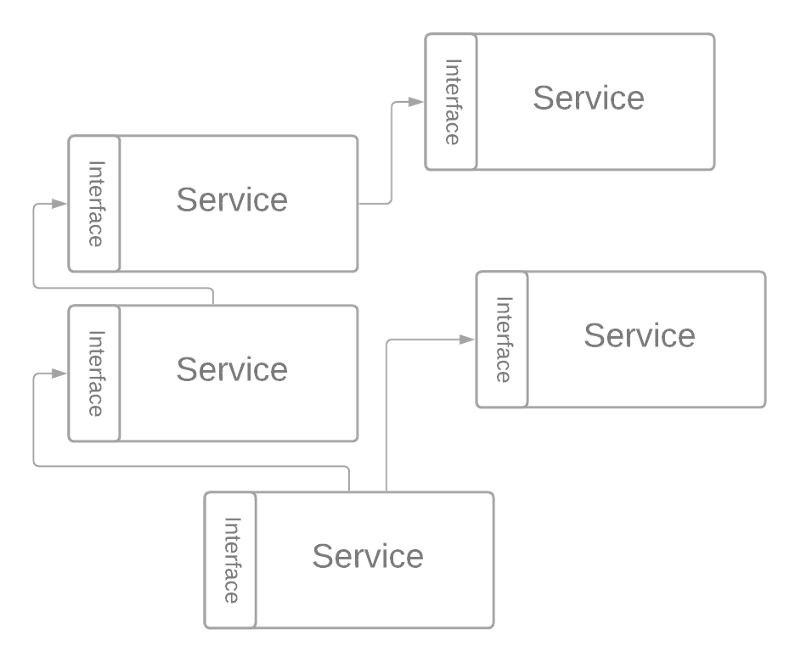

And then microservices came to revolutionize how applications are performed, and that suppose that now we have more services each of them providing its own API at a level that pretty much we have one service for one capability and because of that one API for one capability something as you can see in the picture below:

And the usage of API became so popular that some people started to use the term to refer to the interface and the whole service implementing this API, which leads and is leading to a lot of confusion. So because of that, when we talk now about API Development, we can talk about very different things:

- We can talk about the definition and model of the interface itself and its management.

- We can talk about a service implementation with an API exposed to be used and managed appropriately.

- We can even talk about a service that uses several APIs as part of its capability implementation.

And the main problem when we use the same term to differ to so many different things is that the word loses all its meaning and with that to complicate our understanding in any conversation and that leads to many problems we could avoid just using the proper words and try to keep all the buzz and marketing a little bit out of the technical conversations.