In today’s digital landscape, where data breaches and cyber threats are becoming increasingly sophisticated, ensuring the security of your servers is paramount. One of the critical security concerns that organizations must address is “Server Information Disclosure.” Server Information Disclosure occurs when sensitive information about a server’s configuration, technology stack, or internal structure is inadvertently exposed to unauthorized parties. Hackers can exploit this vulnerability to gain insights into potential weak points and launch targeted attacks. Such breaches can lead to data theft, service disruption, and reputation damage.

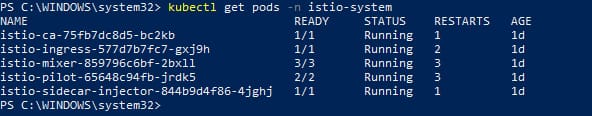

Information Disclosure and Istio Service Mesh

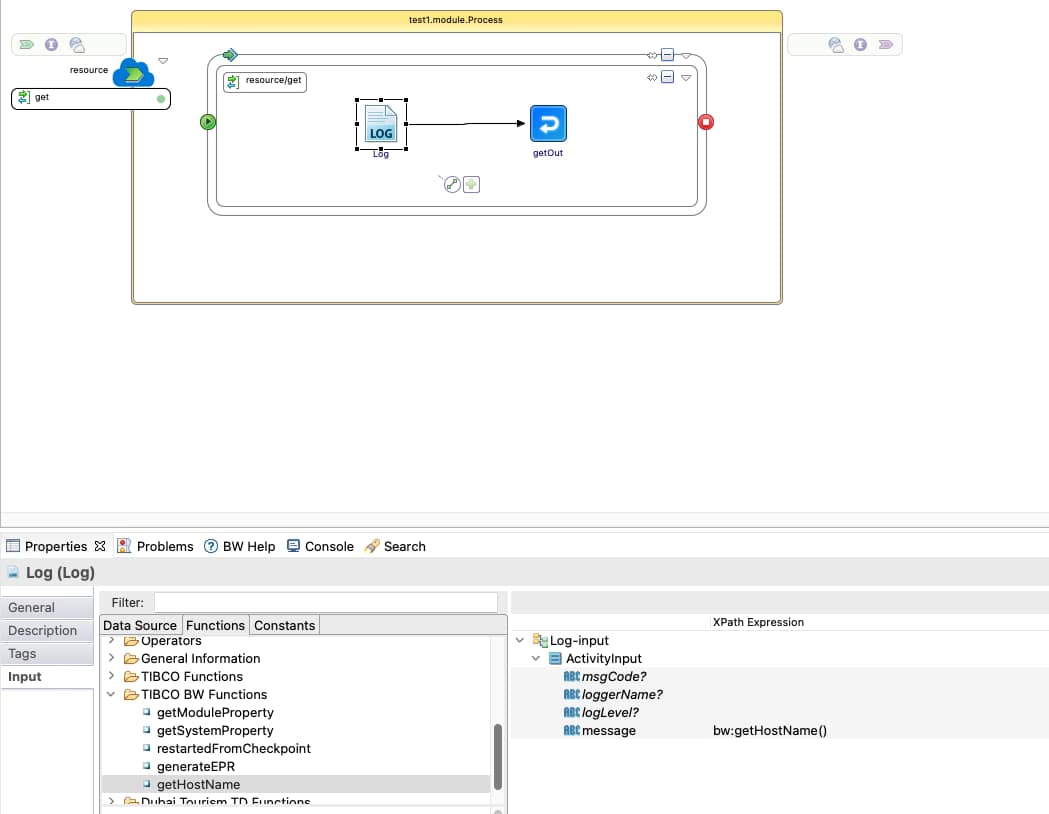

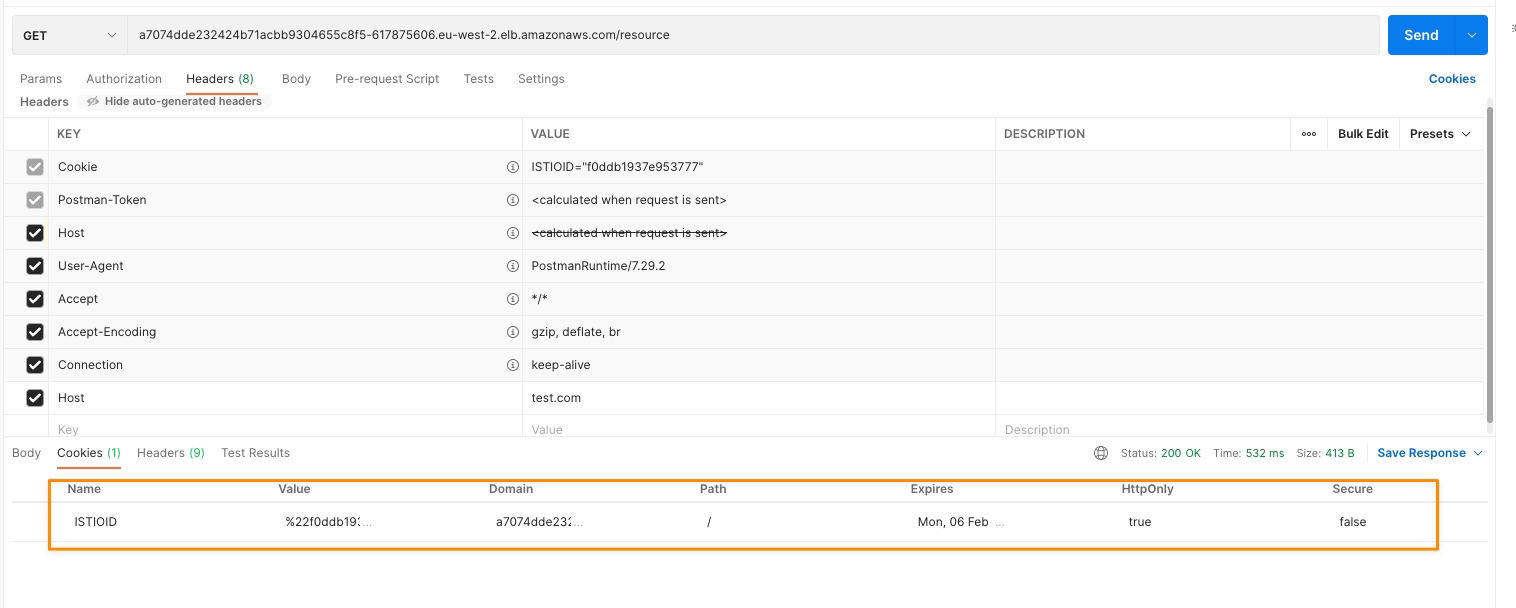

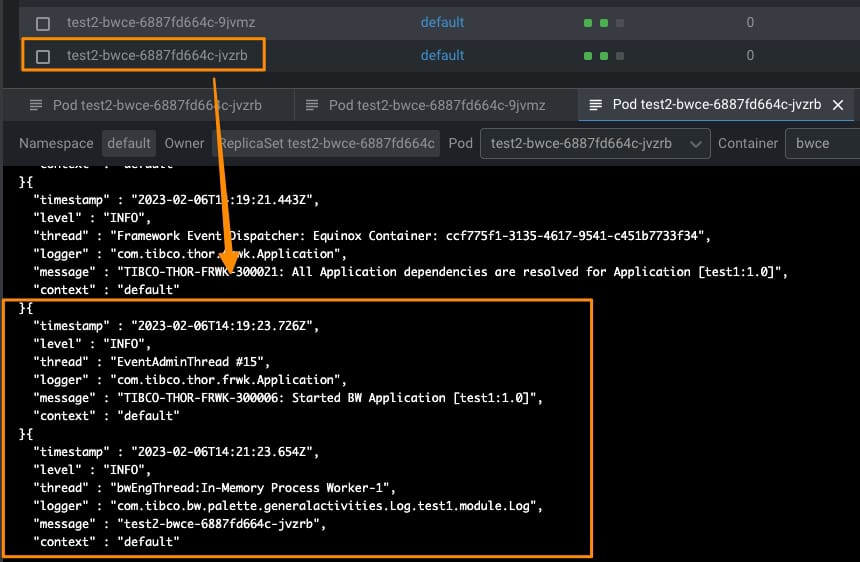

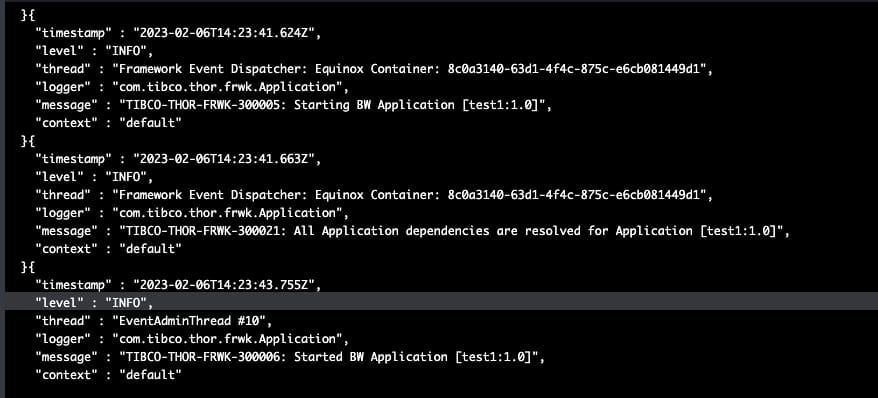

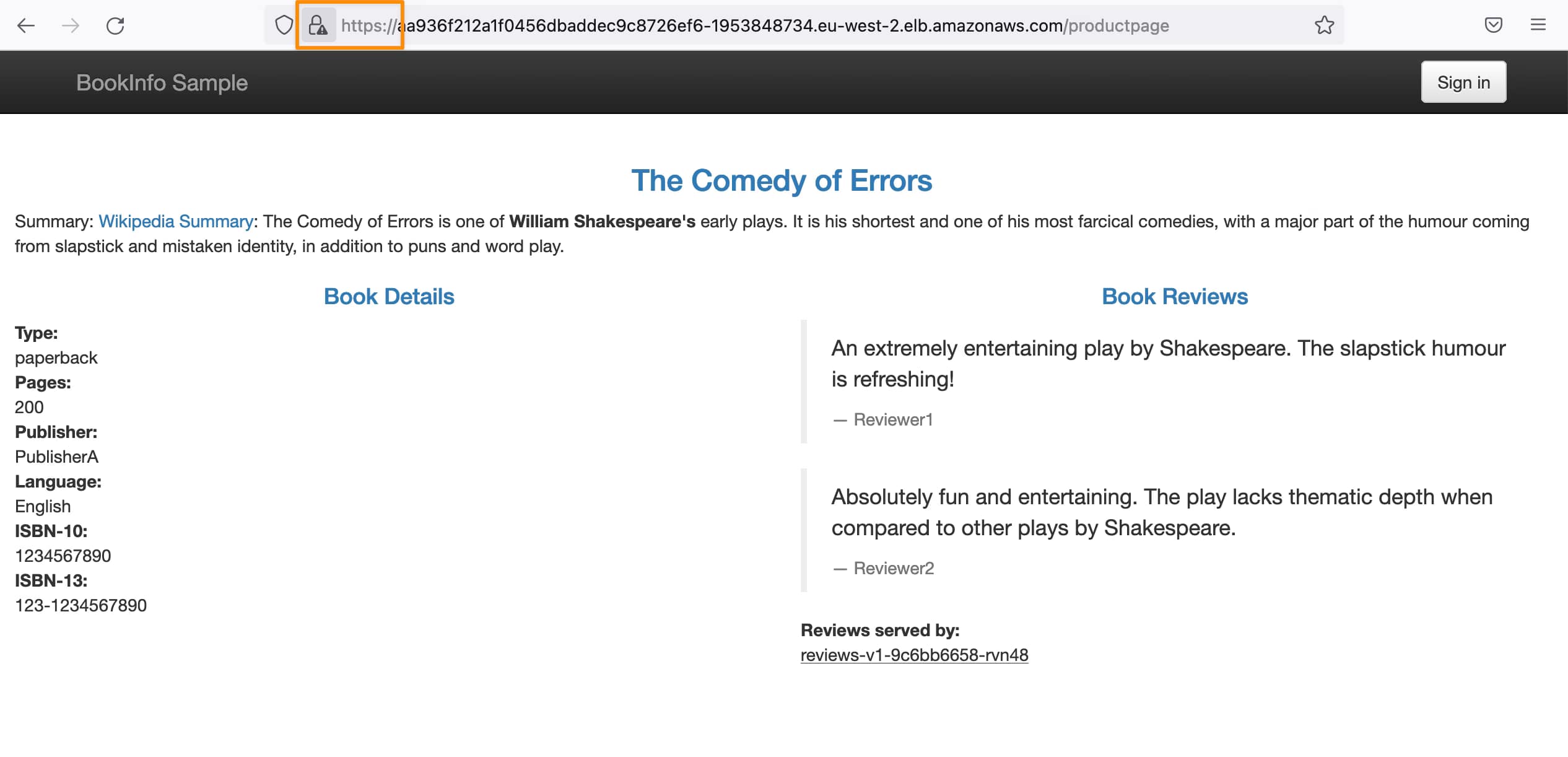

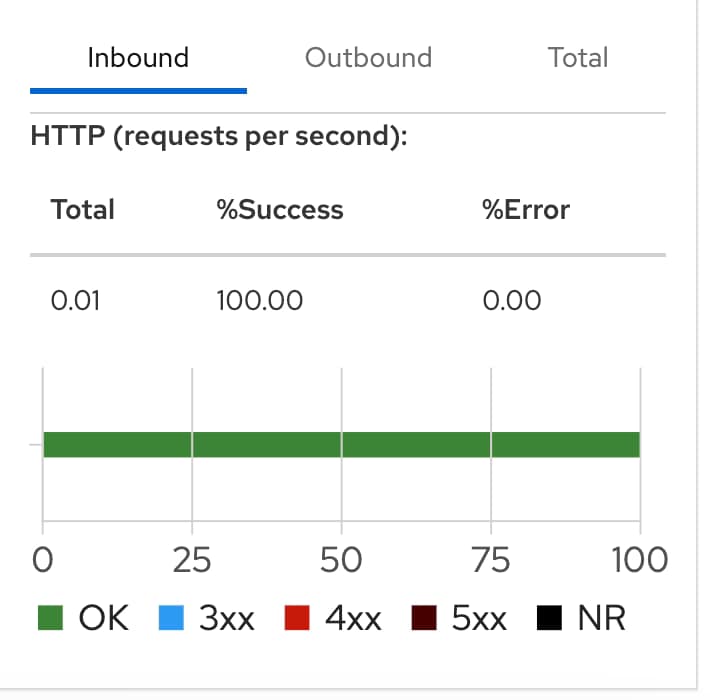

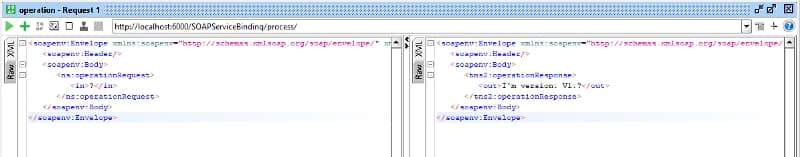

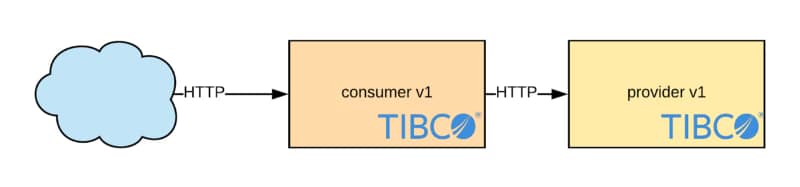

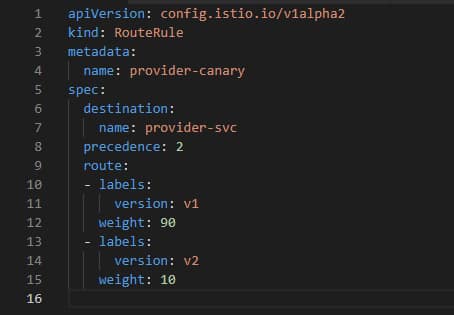

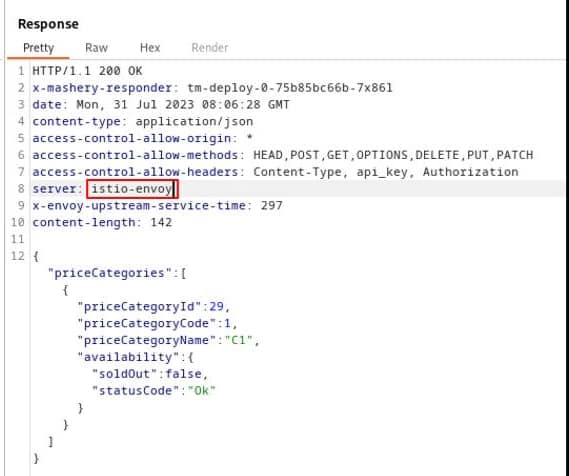

One example is the Server HTTP Header, usually included in most of the HTTP responses where you have the server that is providing this response. The values can vary depending on the stack, but matters such as Jetty, Tomcat, or similar ones are usually seen. But also, if you are using a Service Mesh such as Istio, you will see the header with a value of istio-envoy, as you can see here:

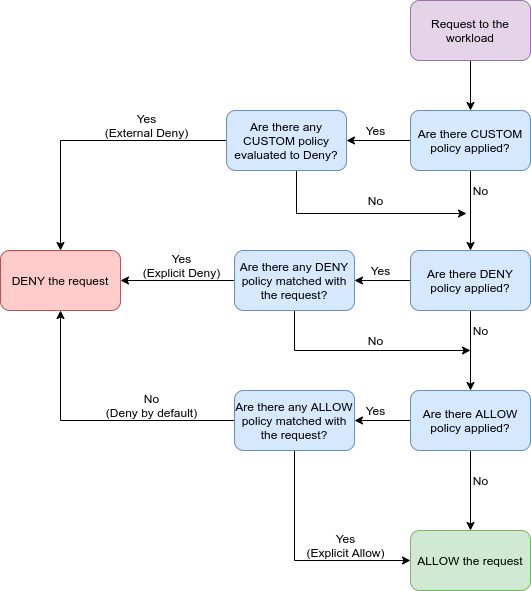

As commented, this is of such importance for several levels of security, such as:

- Data Privacy: Server information leakage can expose confidential data, undermining user trust and violating data privacy regulations such as GDPR and HIPAA.

- Reduced Attack Surface: By concealing server details, you minimize the attack surface available to potential attackers.

- Security by Obscurity: While not a foolproof approach, limiting disclosure adds an extra layer of security, making it harder for hackers to gather intelligence.

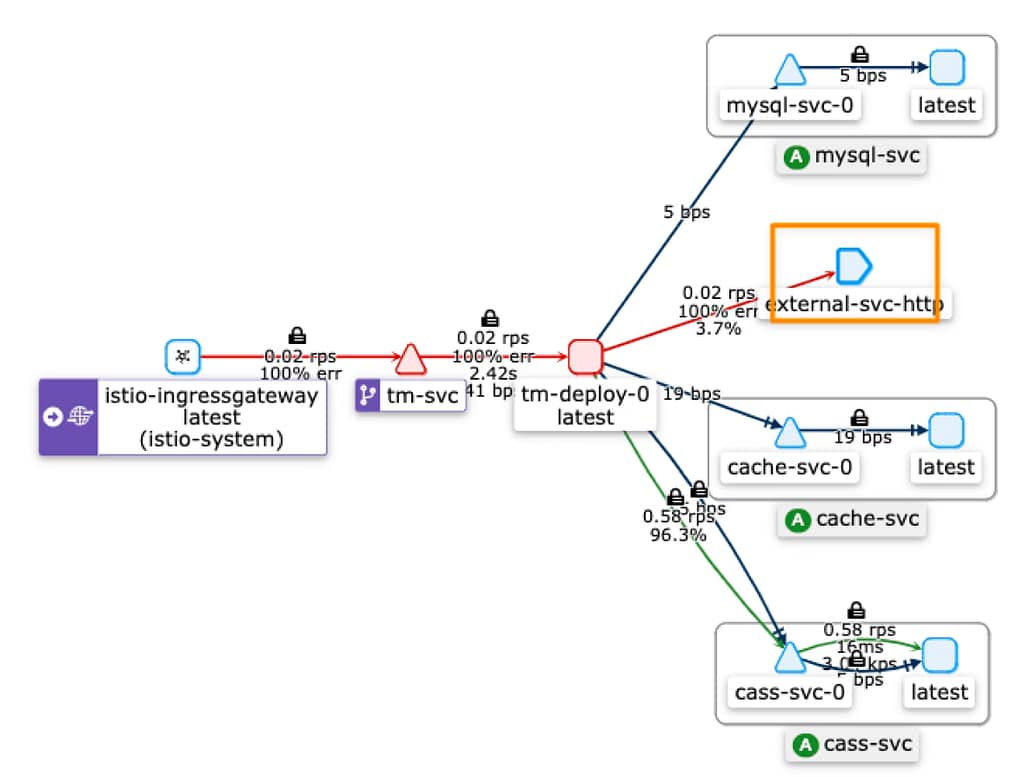

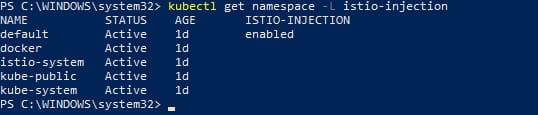

How to mitigate that with Istio Service Mesh?

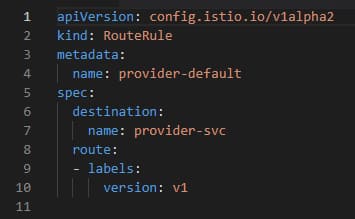

When using Istio, we can define different rules to add and remove HTTP headers based on our needs, as you can see in the following documentation here: https://discuss.istio.io/t/remove-header-operation/1692 using simple clauses to the definition of your VirtualService as you can see here:

apiVersion: networking.istio.io/v1alpha3

kind: VirtualService

metadata:

name: k8snode-virtual-service

spec:

hosts:

- "example.com"

gateways:

- k8snode-gateway

http:

headers:

response:

remove:

- "x-my-fault-source"

- route:

- destination:

host: k8snode-service

subset: version-1

Unfortunately, this is not useful for all HTTP headers, especially the “main” ones, so the ones that are not custom added by your workloads but the ones that are mainly used and defined in the HTTP W3C standard https://www.w3.org/Protocols/

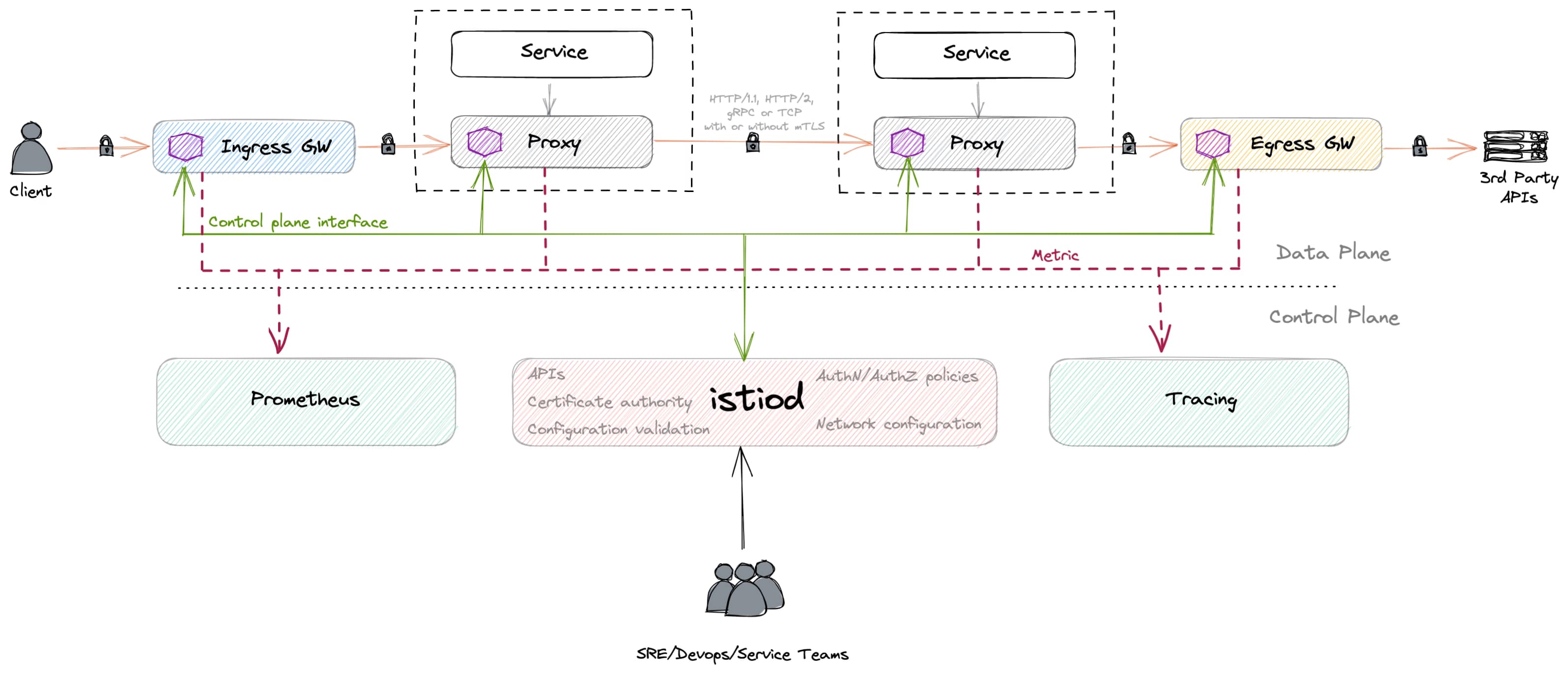

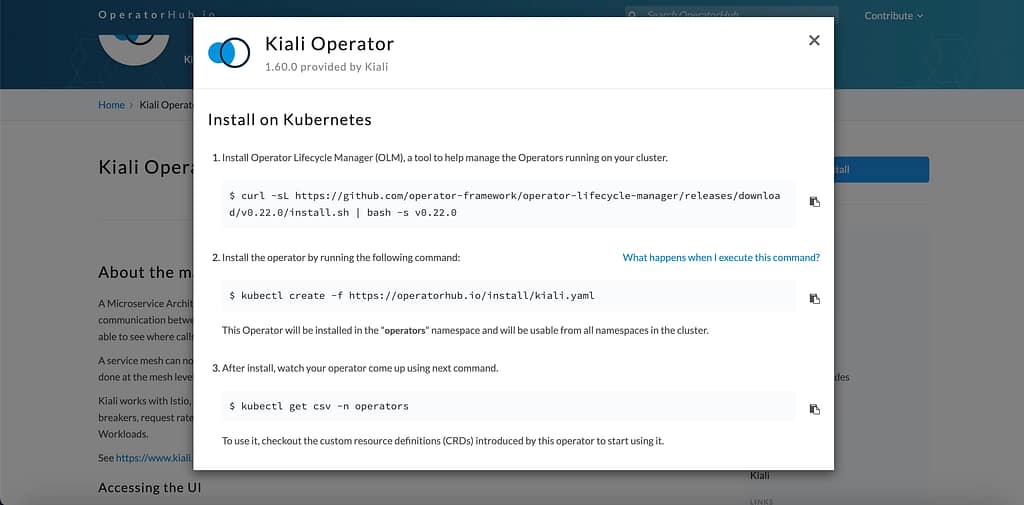

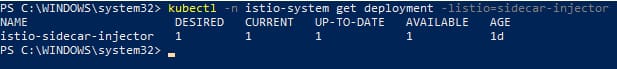

So, in the case of the Server HTTP header is a little bit more complex to do, and you need to use an EnvoyFilter, one of the most sophisticated objects part of the Istio Service Mesh. Based on the words in the official Istio documentation, an EnvoyFilter provides a mechanism to customize the Envoy configuration generated by Istio Pilot. So, you can use EnvoyFilter to modify values for certain fields, add specific filters, or even add entirely new listeners, clusters, etc.

EnvoyFilter Implementation to Remove Header

So now that we know that we need to create a custom EnvoyFilter let’s see which one we need to use to remove the Server header and how this is made to get more knowledge about this component. Here you can see the EnvoyFilter for that job:

---

apiVersion: networking.istio.io/v1alpha3

kind: EnvoyFilter

metadata:

name: gateway-response-remove-headers

namespace: istio-system

spec:

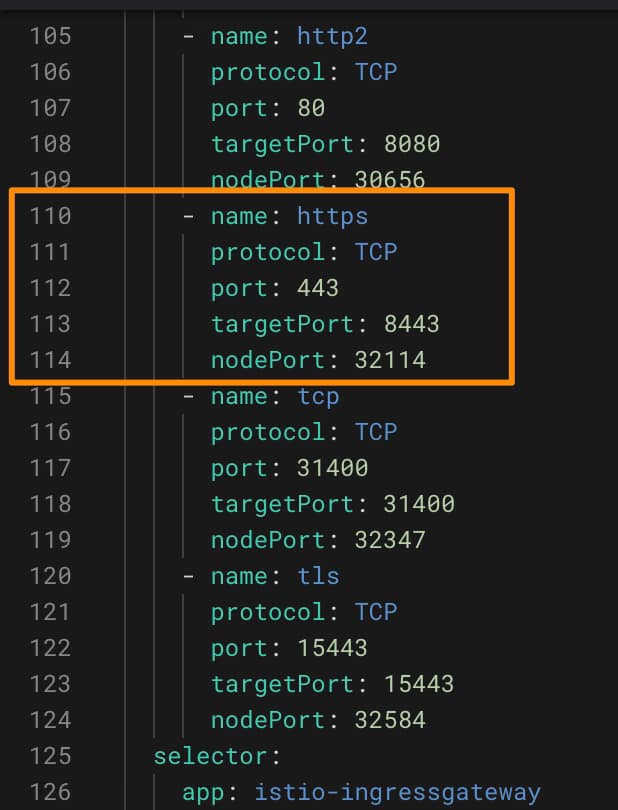

workloadSelector:

labels:

istio: ingressgateway

configPatches:

- applyTo: NETWORK_FILTER

match:

context: GATEWAY

listener:

filterChain:

filter:

name: "envoy.filters.network.http_connection_manager"

patch:

operation: MERGE

value:

typed_config:

"@type": "type.googleapis.com/envoy.extensions.filters.network.http_connection_manager.v3.HttpConnectionManager"

server_header_transformation: PASS_THROUGH

- applyTo: ROUTE_CONFIGURATION

match:

context: GATEWAY

patch:

operation: MERGE

value:

response_headers_to_remove:

- "server"

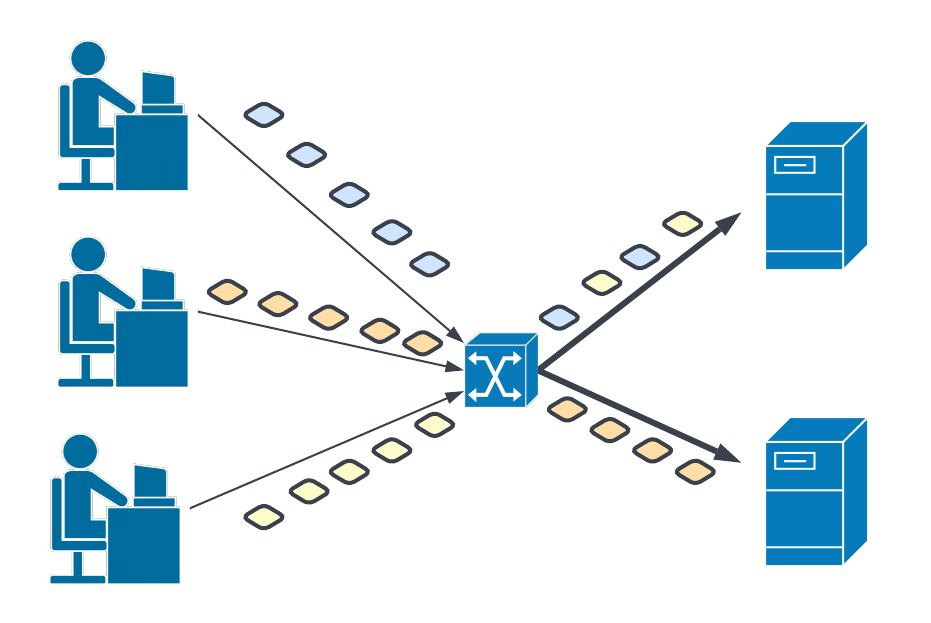

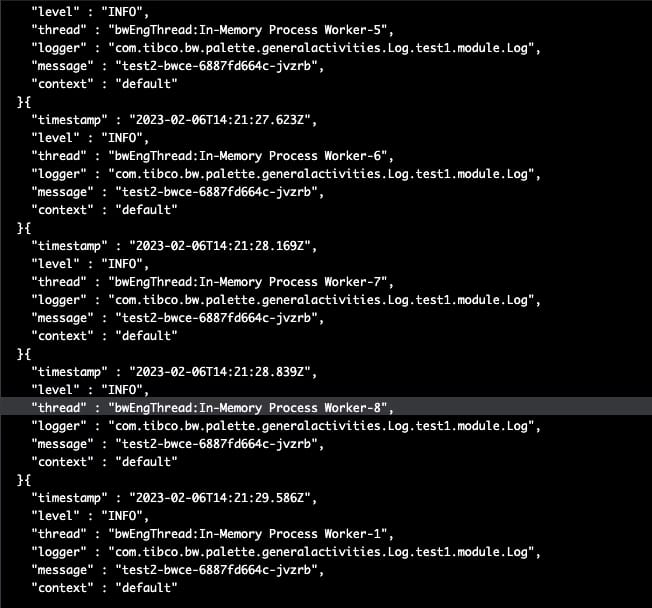

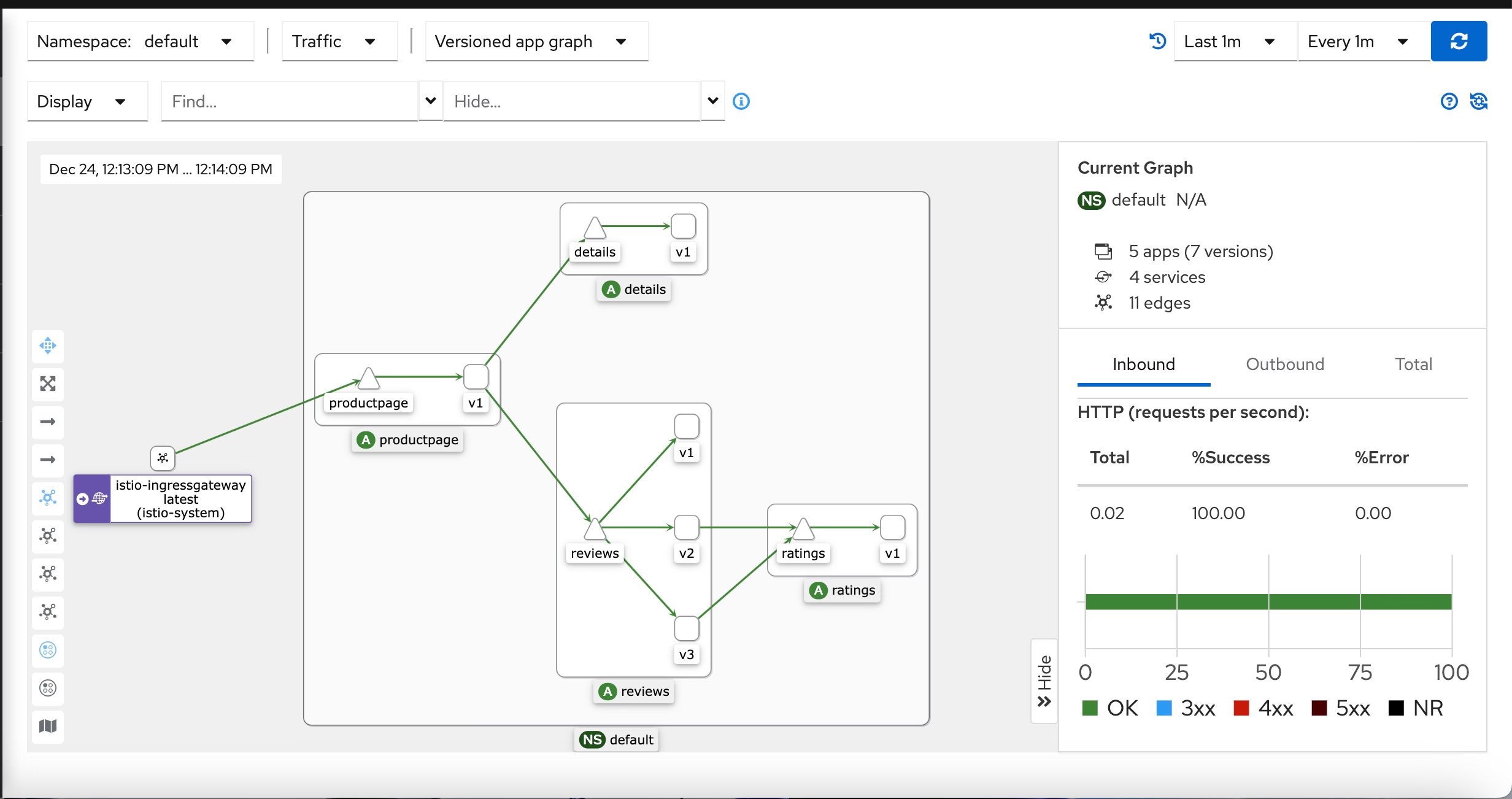

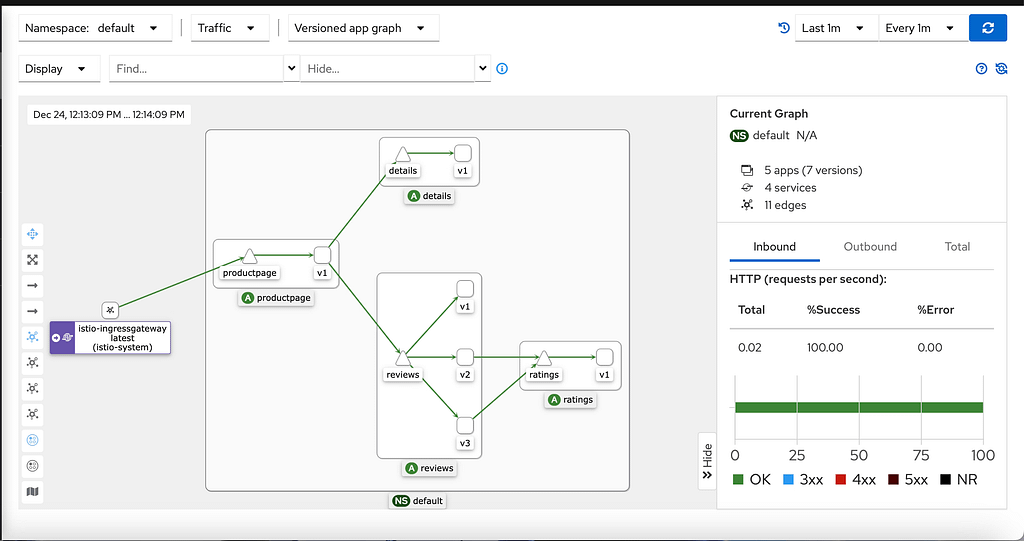

So let’s focus on the parts of the specification of the EnvoyFilter where we can get for one side the usual workloadSelector, to know where this component will be applied, that in this case will be the istio ingressgateway. Then we enter into the configPatches section, that are the sections where we use the customization that we need to do, and in our case, we have two of them:

Both act on the context: GATEWAY and apply to two different objects: NETWORK\_FILTER AND ROUTE\_CONFIGURATION. You can also use filters on sidecars to affect the behavior of them. The first bit what it does is including the custom filter http\_connection\_maanger that allows the manipulation of the HTTP context, including for our primary purpose also the HTTP header, and then we have the section bit that acts on the ROUTE\_CONFIGURATION removing the server header as we can see by using the option response_header_to_remove

Conclusion

As you can see, this is not easy to implement. Still, at the same time, it is evidence of the power and low-level capabilities that you have when using a robust service mesh such as Istio to interact and modify the behavior of any tiny detail that you want for your benefit and, in this case, also to improve and increase the security of your workloads deployed behind the Service Mesh scope.

In the ever-evolving landscape of cybersecurity threats, safeguarding your servers against information disclosure is crucial to protect sensitive data and maintain your organization’s integrity. Istio empowers you to fortify your server security by providing robust tools for traffic management, encryption, and access control.

Remember, the key to adequate server security is a proactive approach that addresses vulnerabilities before they can be exploited. Take the initiative to implement Istio and elevate your server protection.

📚 Want to dive deeper into Kubernetes? This article is part of our comprehensive Kubernetes Architecture Patterns guide, where you’ll find all fundamental and advanced concepts explained step by step.