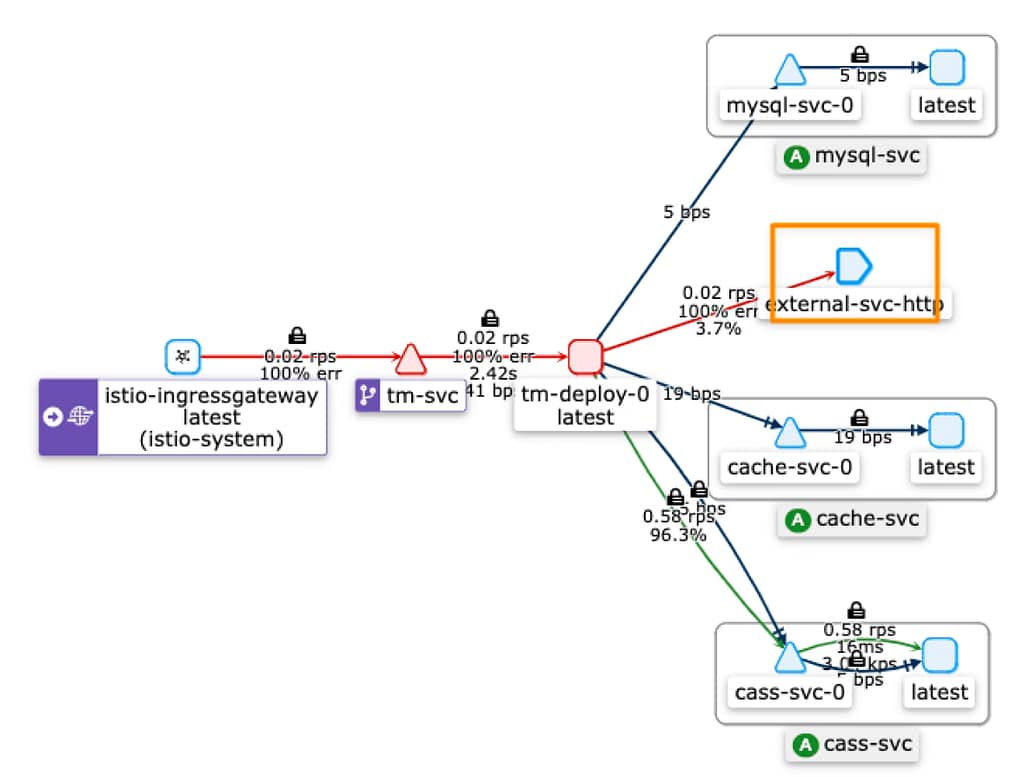

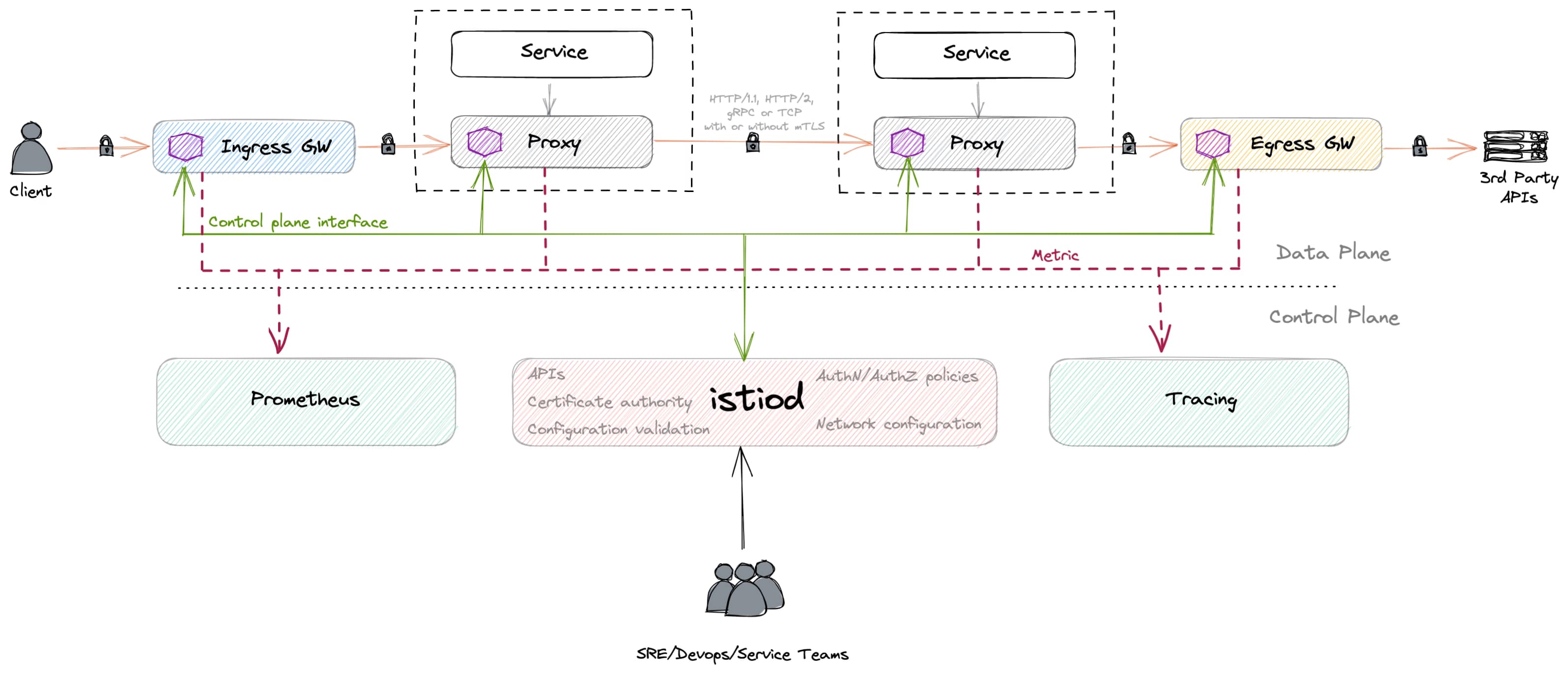

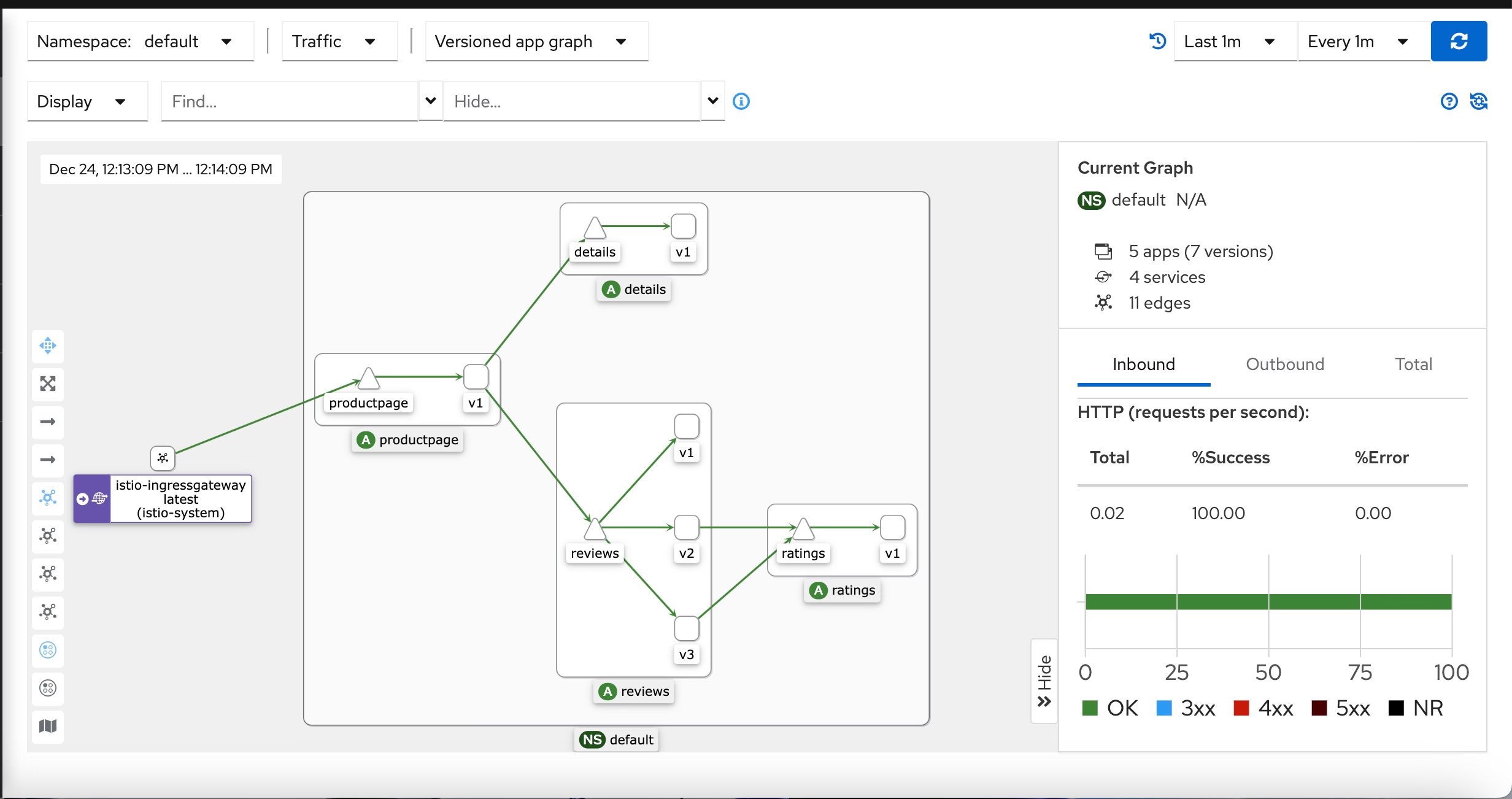

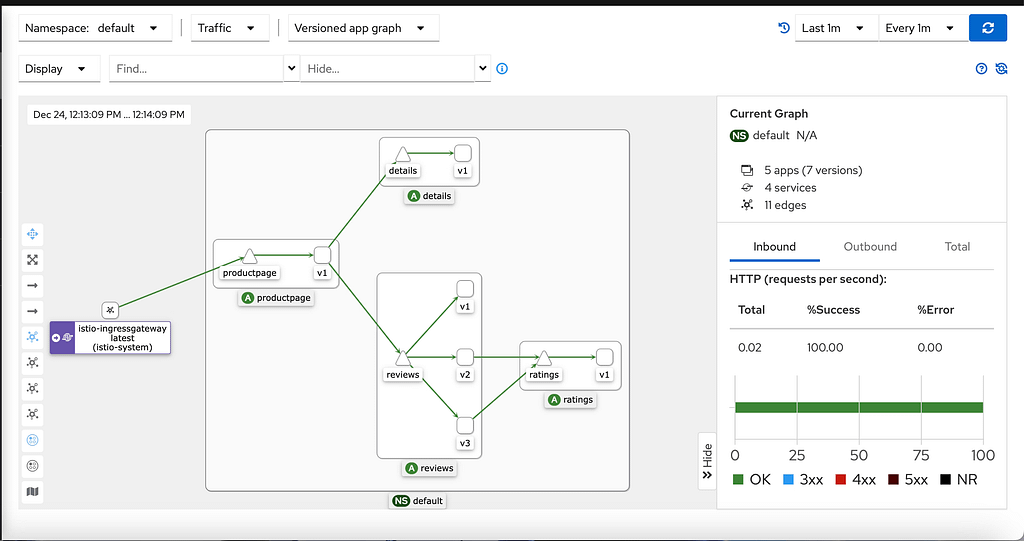

Istio te permite configurar Sticky Session, entre otras características de red, para tus cargas de trabajo de Kubernetes. Como hemos comentado en varios artículos sobre Istio, istio despliega una malla de servicios que proporciona un plano de control central para tener toda la configuración relacionada con los aspectos de red de tus cargas de trabajo de Kubernetes. Esto cubre muchos aspectos diferentes de la comunicación dentro de la plataforma de contenedores, como la seguridad que cubre el transporte seguro, la autenticación o la autorización, y, al mismo tiempo, características de red, como el enrutamiento y la distribución de tráfico, que es el tema principal del artículo de hoy.

Estas capacidades de enrutamiento son similares a lo que un Balanceador de Carga tradicional de Nivel 7 puede proporcionar. Cuando hablamos de Nivel 7, nos referimos a los niveles convencionales que componen la pila OSI, donde el nivel 7 está relacionado con el Nivel de Aplicación.

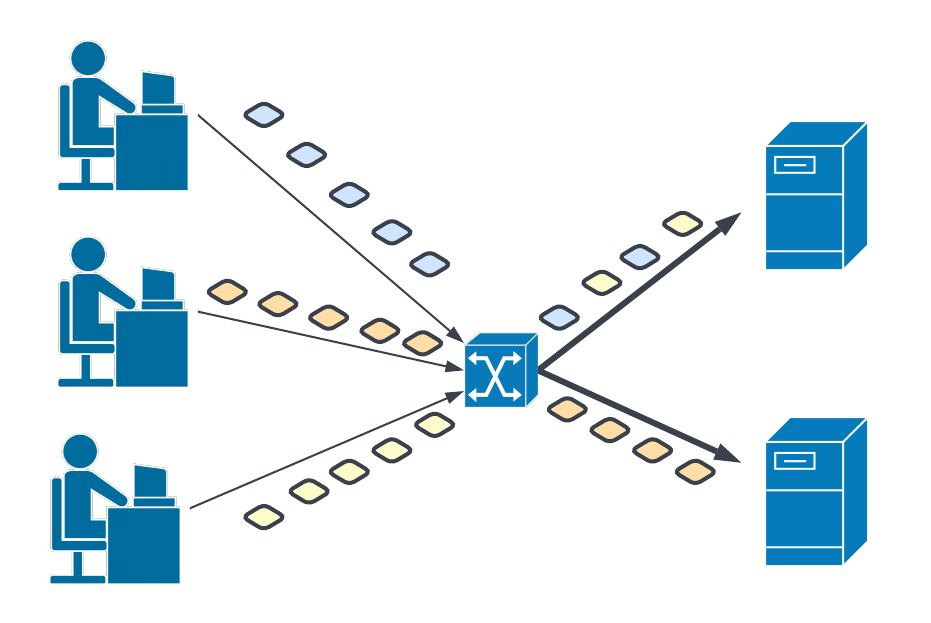

Una configuración de Sticky Session o Afinidad de Sesión es una de las características más comunes que puedes necesitar implementar en este escenario. El caso de uso es el siguiente:

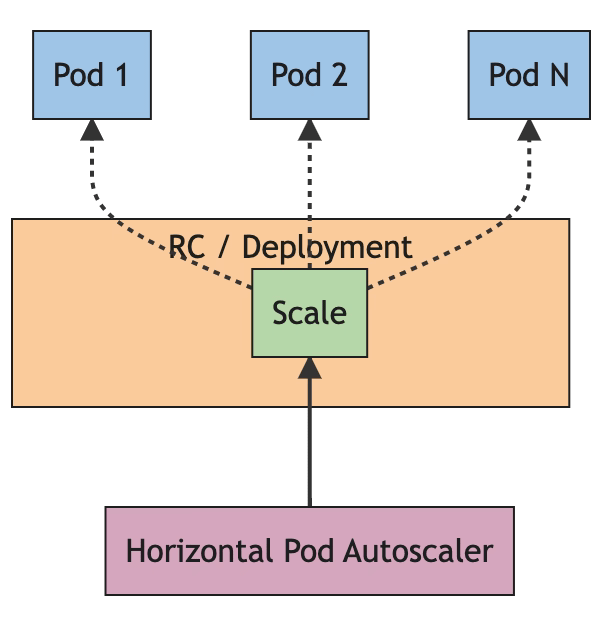

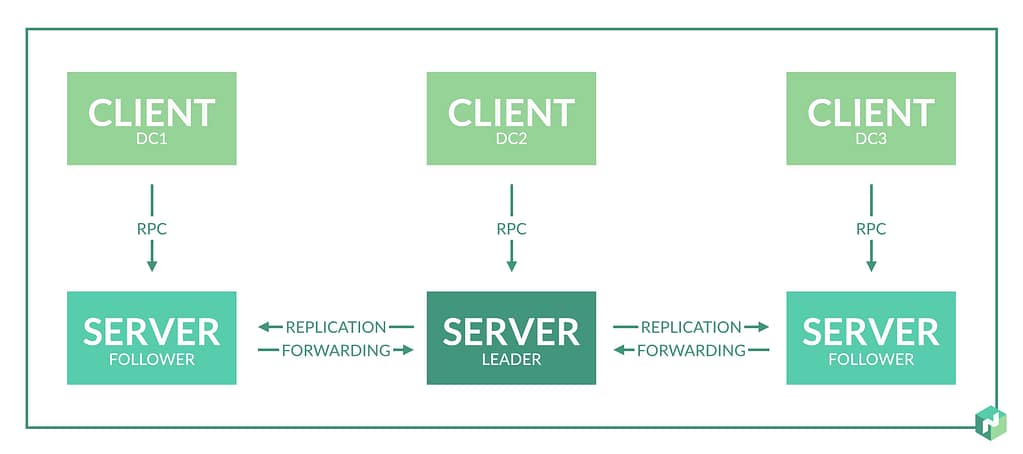

Tienes varias instancias de tus cargas de trabajo, por lo que diferentes réplicas de pods en una situación de Kubernetes. Todos estos pods detrás del mismo servicio. Por defecto, redirigirá las solicitudes de manera round-robin entre las réplicas de pods en un estado Ready, por lo que Kubernetes entiende que están listas para recibir la solicitud a menos que lo definas de manera diferente.

Pero en algunos casos, principalmente cuando estás tratando con una aplicación web o cualquier aplicación con estado que maneja el concepto de una sesión, podrías querer que la réplica que procesa la primera solicitud también maneje el resto de las solicitudes durante la vida útil de la sesión.

Por supuesto, podrías hacer eso fácilmente simplemente enrutando todo el tráfico a una solicitud, pero en ese caso, perderíamos otras características como el balanceo de carga de tráfico y HA. Entonces, esto generalmente se implementa usando políticas de Afinidad de Sesión o Sticky Session que proporcionan lo mejor de ambos mundos: la misma réplica manejando todas las solicitudes de un usuario, pero distribución de tráfico entre diferentes usuarios.

¿Cómo funciona Sticky Session?

El comportamiento detrás de esto es relativamente fácil. Veamos cómo funciona.

Primero, lo importante es que necesitas «algo» como parte de tus solicitudes de red que identifique todas las solicitudes que pertenecen a la misma sesión, para que el componente de enrutamiento (en este caso, este rol lo desempeña istio) pueda determinar qué parte necesita manejar estas solicitudes.

Este «algo» que usamos para hacer eso, puede ser diferente dependiendo de tu configuración, pero generalmente, esto es una Cookie o un Encabezado HTTP que enviamos en cada solicitud. Por lo tanto, sabemos que la réplica maneja todas las solicitudes de ese tipo específico.

¿Cómo implementa Istio el soporte para Sticky Session?

En el caso de usar Istio para desempeñar este rol, podemos implementar eso usando una Regla de Destino específica que nos permite, entre otras capacidades, definir la política de tráfico para definir cómo queremos que se divida el tráfico y para implementar el Sticky Session necesitamos usar la característica “consistentHash”, que permite que todas las solicitudes que se computan al mismo hash sean enviadas a la réplica.

Cuando definimos las características de consistentHash, podemos decir cómo se creará este hash y, en otras palabras, qué componentes se usarán para generar este hash, y esto puede ser una de las siguientes opciones:

- httpHeaderName: Usa un Encabezado HTTP para hacer la distribución de tráfico

- httpCookie: Usa una Cookie HTTP para hacer la distribución de tráfico

- httpQueryParameterName: Usa una Cadena de Consulta para hacer la Distribución de Tráfico.

- maglev: Usa el Balanceador de Carga Maglev de Google para hacer la determinación. Puedes leer más sobre Maglev en el artículo de Google.

- ringHash: Usa un enfoque de hash basado en anillo para el balanceo de carga entre los pods disponibles.

Entonces, como puedes ver, tendrás muchas opciones diferentes. Aún así, solo las tres primeras serían las más utilizadas para implementar una sesión persistente, y generalmente, la opción de Cookie HTTP (httpCookie) será la preferida, ya que se basaría en el enfoque HTTP para gestionar la sesión entre clientes y servidores.

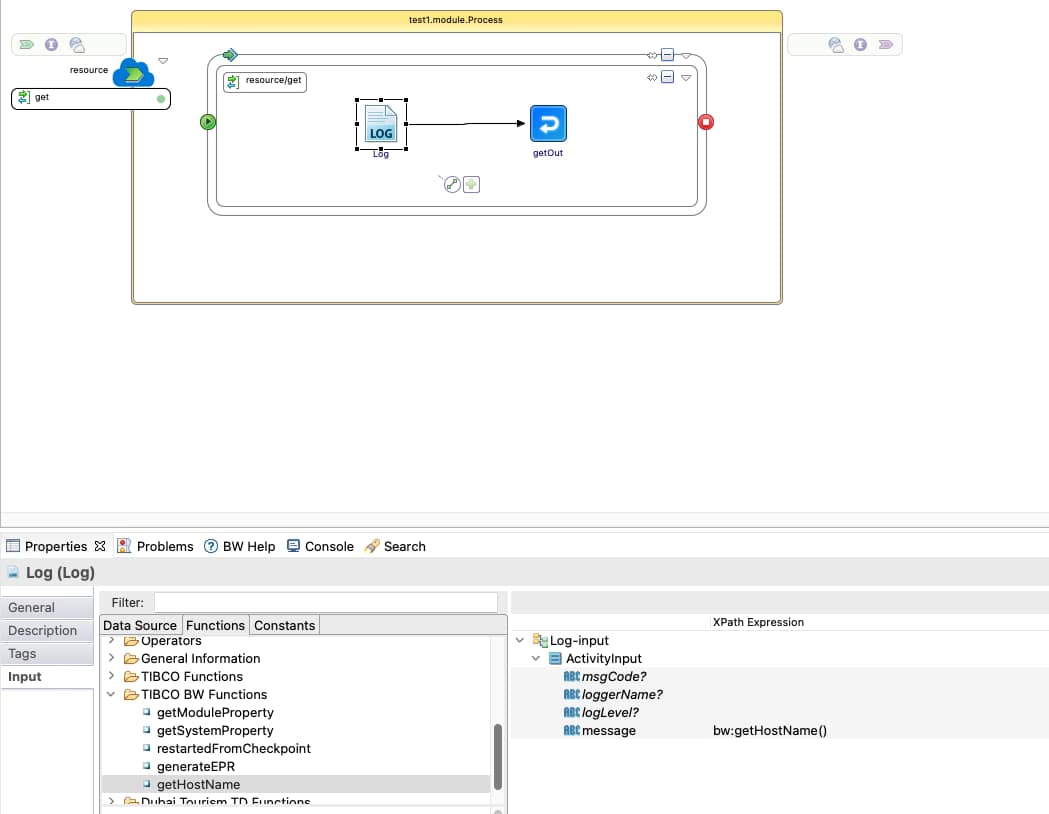

Ejemplo de Implementación de Sticky Session usando TIBCO BW

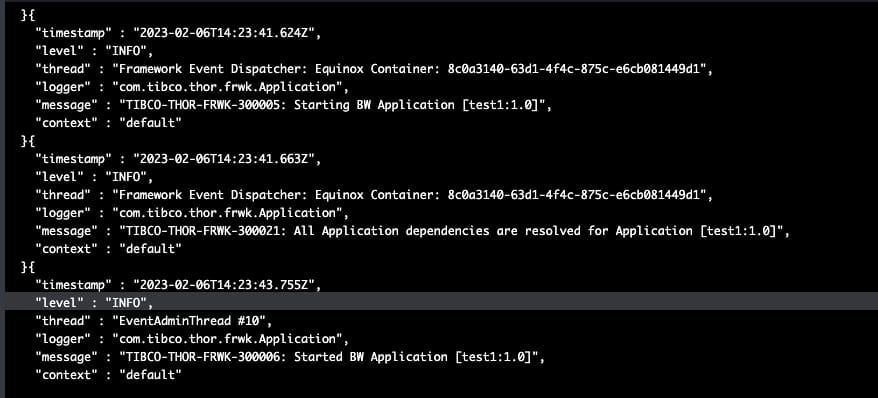

Definiremos una carga de trabajo TIBCO BW muy simple para implementar un servicio REST, sirviendo una respuesta GET con un valor codificado. Para simplificar el proceso de validación, la aplicación registrará el nombre de host del pod para que rápidamente podamos ver quién está manejando cada una de las solicitudes:

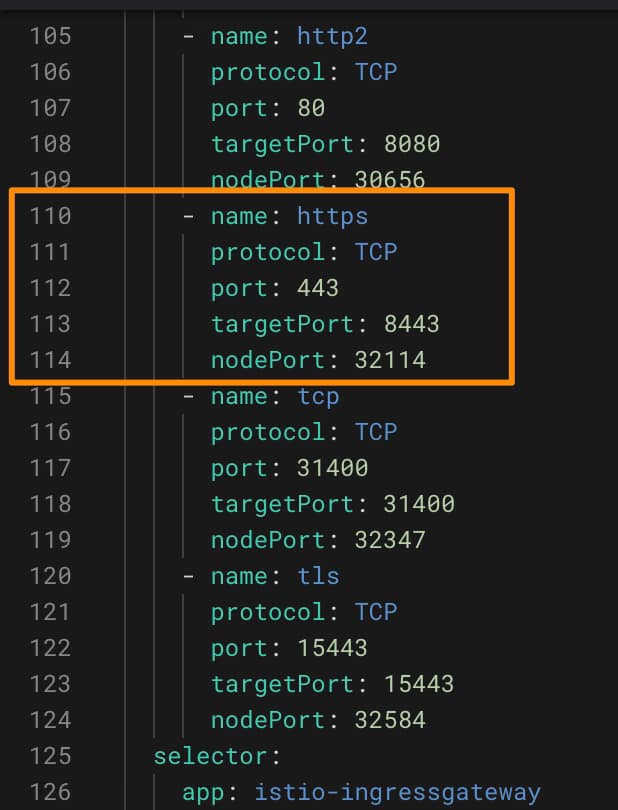

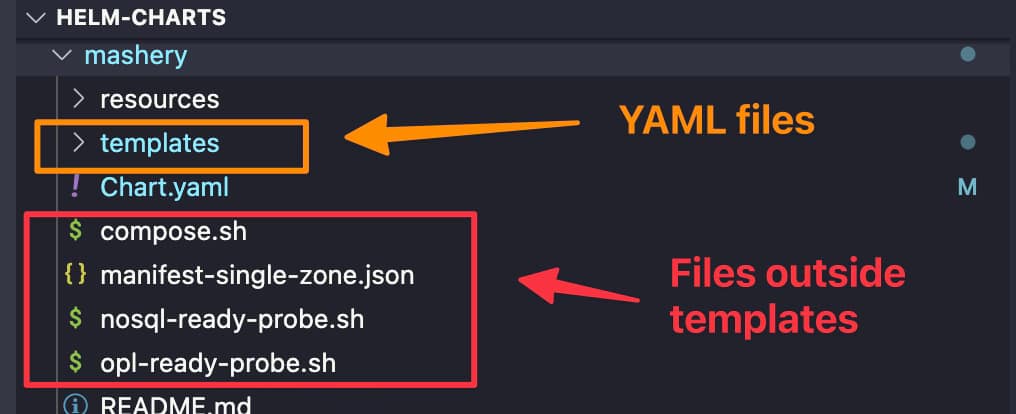

Desplegamos esto en nuestro clúster de Kubernetes y lo exponemos usando un servicio de Kubernetes; en nuestro caso, el nombre de este servicio será test2-bwce-srv

Además de eso, aplicamos la configuración de istio, que requerirá tres (3) objetos de istio: gateway, servicio virtual y la regla de destino. Como nuestro enfoque está en la regla de destino, intentaremos mantenerlo lo más simple posible en los otros dos objetos:

apiVersion: networking.istio.io/v1beta1

kind: Gateway

metadata:

name: default-gw

spec:

selector:

istio: ingressgateway

servers:

- hosts:

- '*'

port:

name: http

number: 80

protocol: HTTPServicio Virtual:

apiVersion: networking.istio.io/v1beta1

kind: VirtualService

metadata:

name: test-vs

spec:

gateways:

- default-gw

hosts:

- test.com

http:

- match:

- uri:

prefix: /

route:

- destination:

host: test2-bwce-srv

port:

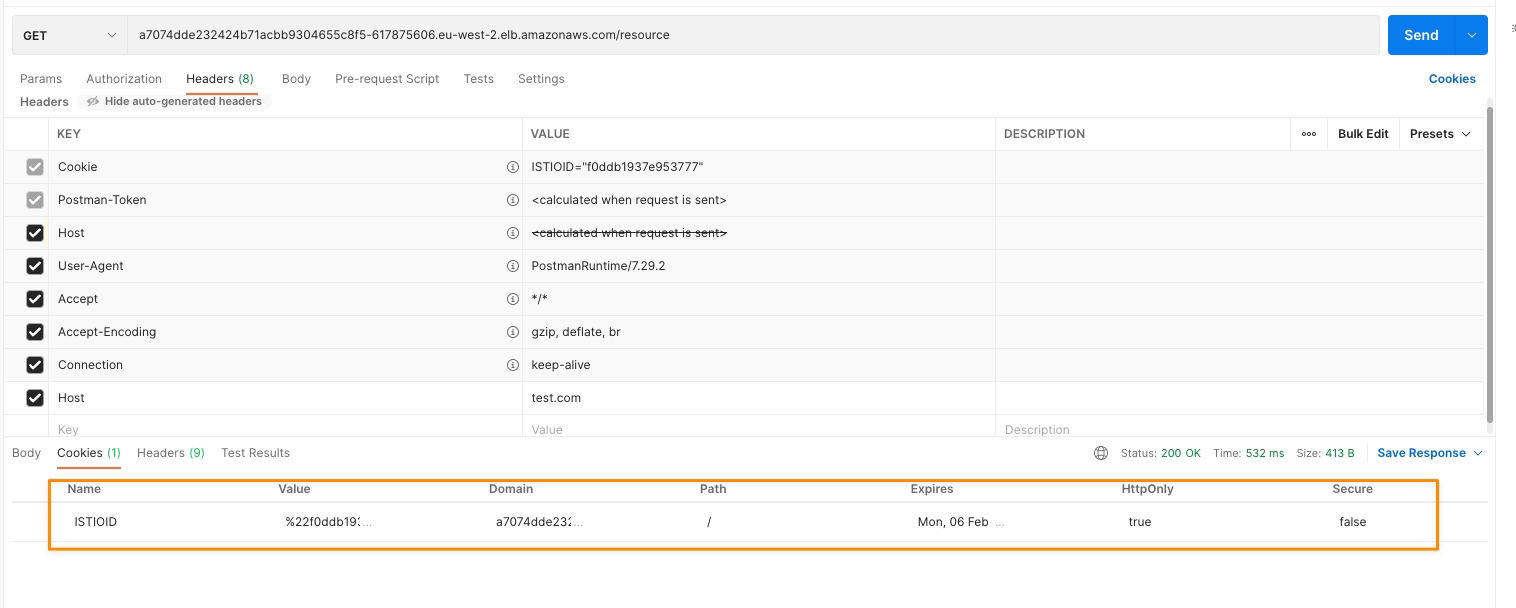

number: 8080Y finalmente, la DestinationRule usará una httpCookie que llamaremos ISTIOD, como puedes ver en el fragmento a continuación:

apiVersion: networking.istio.io/v1beta1

kind: DestinationRule

metadata:

name: default-sticky-dr

namespace: default

spec:

host: test2-bwce-srv.default.svc.cluster.local

trafficPolicy:

loadBalancer:

consistentHash:

httpCookie:

name: ISTIOID

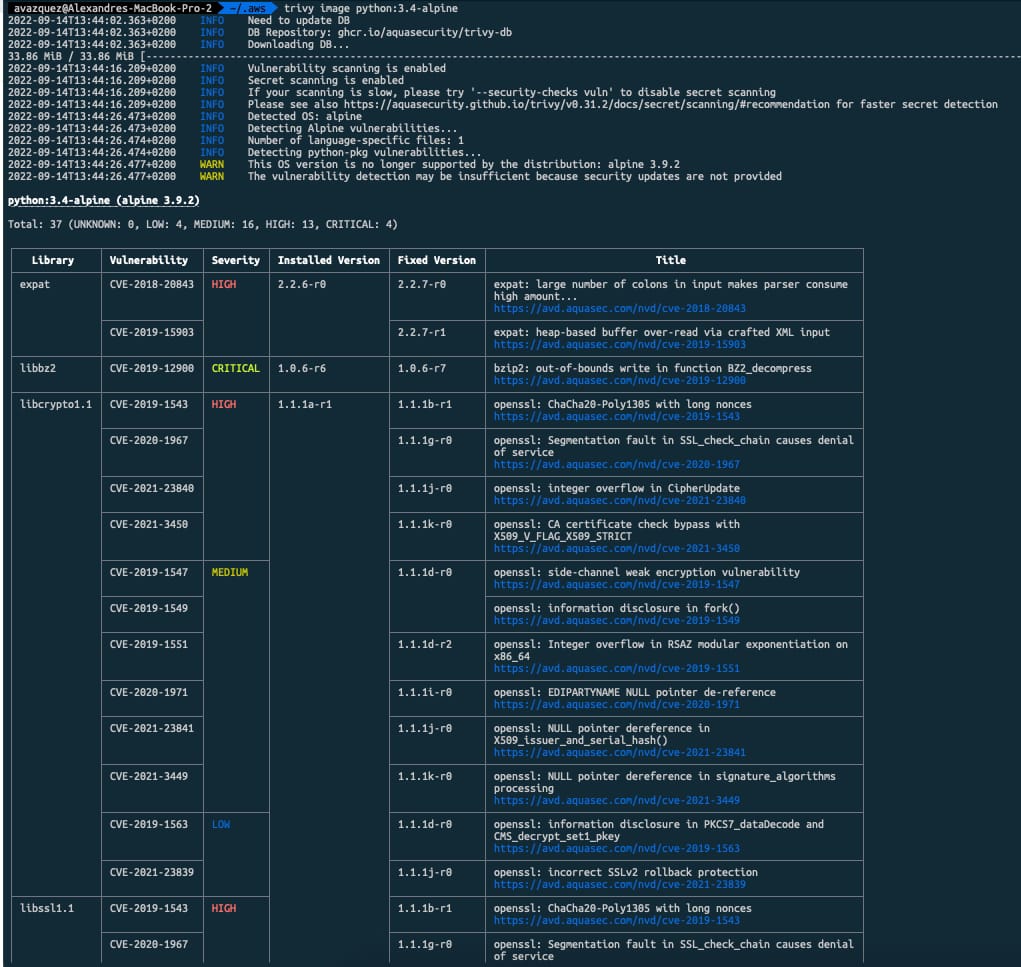

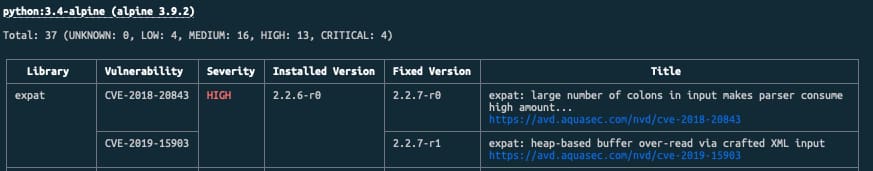

ttl: 60sAhora, que ya hemos comenzado nuestra prueba, y después de lanzar la primera solicitud, obtenemos una nueva Cookie que es generada por istio mismo que se muestra en la ventana de respuesta de Postman:

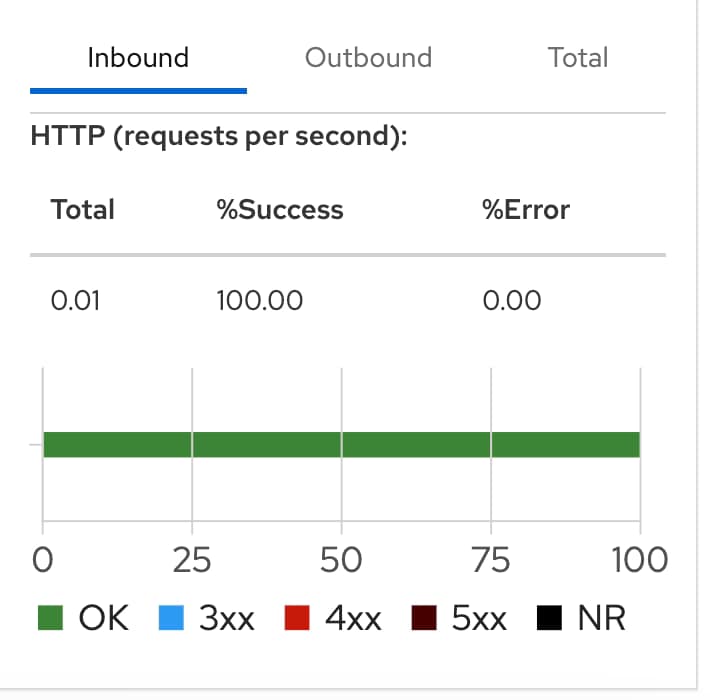

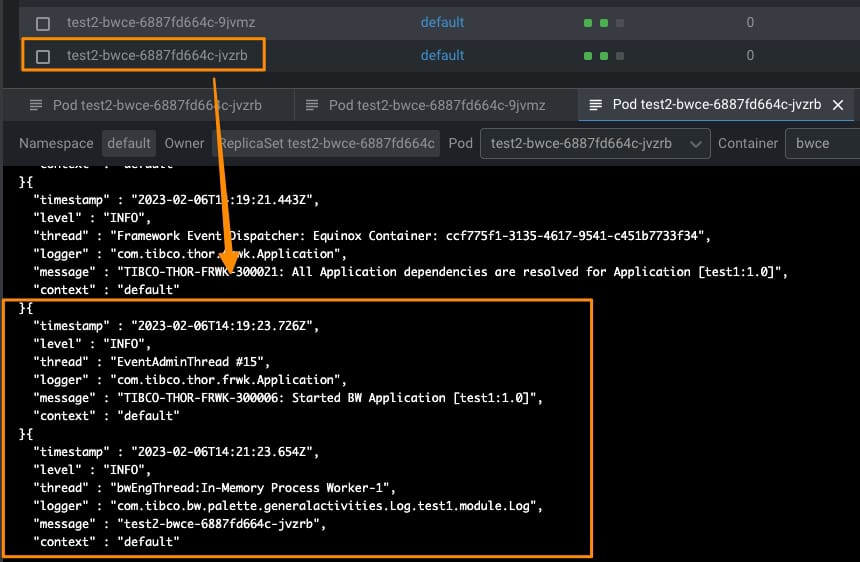

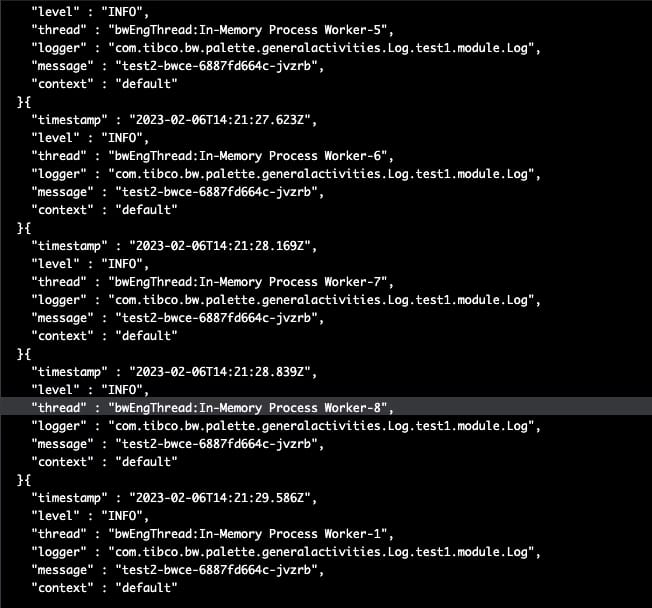

Esta solicitud ha sido manejada por una de las réplicas disponibles del servicio, como puedes ver aquí:

Todas las solicitudes subsiguientes de Postman ya incluyen la cookie, y todas ellas son manejadas desde el mismo pod:

Mientras que el registro de la otra réplica está vacío, ya que todas las solicitudes han sido enrutadas a ese pod específico.

Resumen

Cubrimos en este artículo la razón detrás de la necesidad de una sesión persistente en la carga de trabajo de Kubernetes y cómo podemos lograr eso usando las capacidades de la Malla de Servicios de Istio. Así que, espero que esto pueda ayudar a implementar esta configuración en tus cargas de trabajo que puedas necesitar hoy o en el futuro.

📚 Want to dive deeper into Kubernetes? This article is part of our comprehensive Kubernetes Architecture Patterns guide, where you’ll find all fundamental and advanced concepts explained step by step.