Save time and money on your application platform deploying applications differently in Kubernetes.

We have come from a time where we deploy an application using an apparent and straight-line process. The traditional way is pretty much like this:

- Wait until a weekend or some time where the load is low, and business can tolerate some service unavailability.

- We schedule the change and warn all the teams involved for that time to be ready to manage the impact.

- We deploy the new version and we have all the teams during the functional test they need to do to ensure that is working fine, and we wait for the real load to happen.

- We monitor during the first hours to see if something wrong happens, and in case it does, we establish a rollback process.

- As soon as everything goes fine, we wait until the next release in 3–4 months.

But this is not valid anymore. Business demands IT to be agile, change quickly, and not afford to do that kind of resource effort each week or, even worse, each day. Do you think that it’s possible to gather all teams each night to deploy the latest changes? It is not feasible at all.

So, technology advance to help us solve that issue better, and here is where Canarying came here to help us.

Introducing Canary Deployments

Canary deployments (or just Canarying as you prefer) are not something new, and a lot of people has been talking a lot about it:

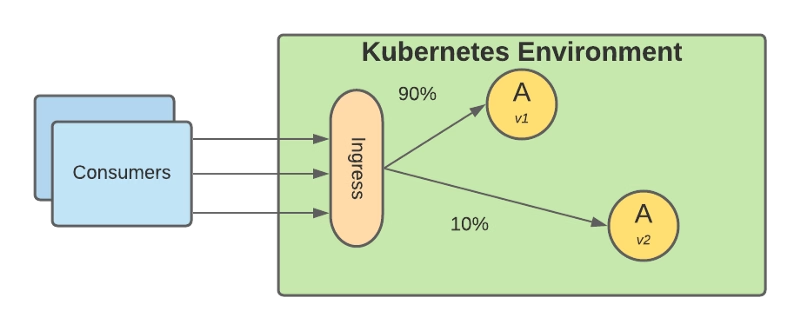

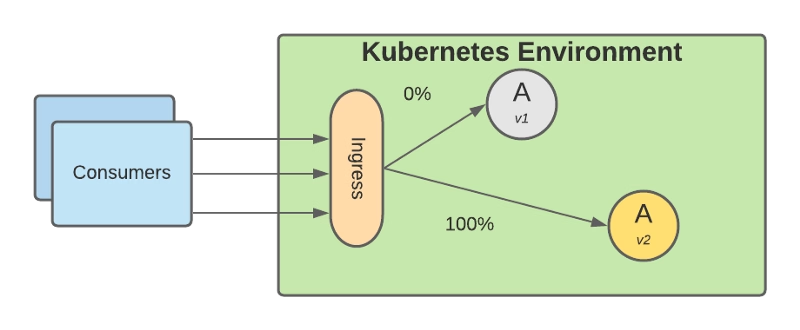

It has been here for some time, but before, it was neither easy nor practical to implement it. Basically is based on deploying the new version into production, but you still keeping the traffic pointing to the old version of the application and you just start shifting some of the traffic to the new version.

Based on that small subset of requests you monitor how the new version performs at different levels, functional level, performance level, and so on. Once you feel comfortable with the performance that is providing you just shift all the traffic to the new version, and you deprecate the old version

The benefits that come with this approach are huge:

- You don’t need a big staging environment as before because you can do some of the tests with real data into the production while not affecting your business and the availability of your services.

- You can reduce time to market and increase the frequency of deployments because you can do it with less effort and people involved.

- Your deployment window has been extended a lot as you do not need to wait for a specific time window, and because of that, you can deploy new functionality more frequently.

Implementing Canary Deployment in Kubernetes

To implement Canary Deployment in Kubernetes, we need to provide more flexibility to how the traffic is routed among our internal components, which is one of the capabilities that get extended from using a Service Mesh.

We already discussed the benefits of using a Service Mesh as part of your environment, but if you would like to retake a look, please refer to this article:

We have several technology components that can provide those capabilities, but this is how you will be able to create the traffic routes to implement this. To see how you can take a look at the following article about one of the default options that is Istio:

But be able to route the traffic is not enough to implement a complete canary deployment approach. We also need to be able to monitor and act based on those metrics to avoid manual intervention. To do this, we need to include different tools to provide those capabilities:

Prometheus is the de-facto option to monitor workloads deployed on the Kubernetes environment, and here you can get more info about how both projects play together.

And to manage the overall process, you can have a Continuous Deployment tool to put some governance around that using options like Spinnaker or using our of the extensions for the Continuous integration tools like GitLab or GitHub:

Summary

In this article, we covered how we can evolve a traditional deployment model to keep pace with innovation that businesses require today and how canary deployment techniques can help us on that journey, and the technology components needed to set up this strategy in your own environment.