Introduction

Ingresses have been, since the early versions of Kubernetes, the most common way to expose applications to the outside. Although their initial design was simple and elegant, the success of Kubernetes and the growing complexity of use cases have turned Ingress into a problematic piece: limited, inconsistent between vendors, and difficult to govern in enterprise environments.

In this article, we analyze why Ingresses have become a constant source of friction, how different Ingress Controllers have influenced this situation, and why more and more organizations are considering alternatives like Gateway API.

What Ingresses are and why they were designed this way

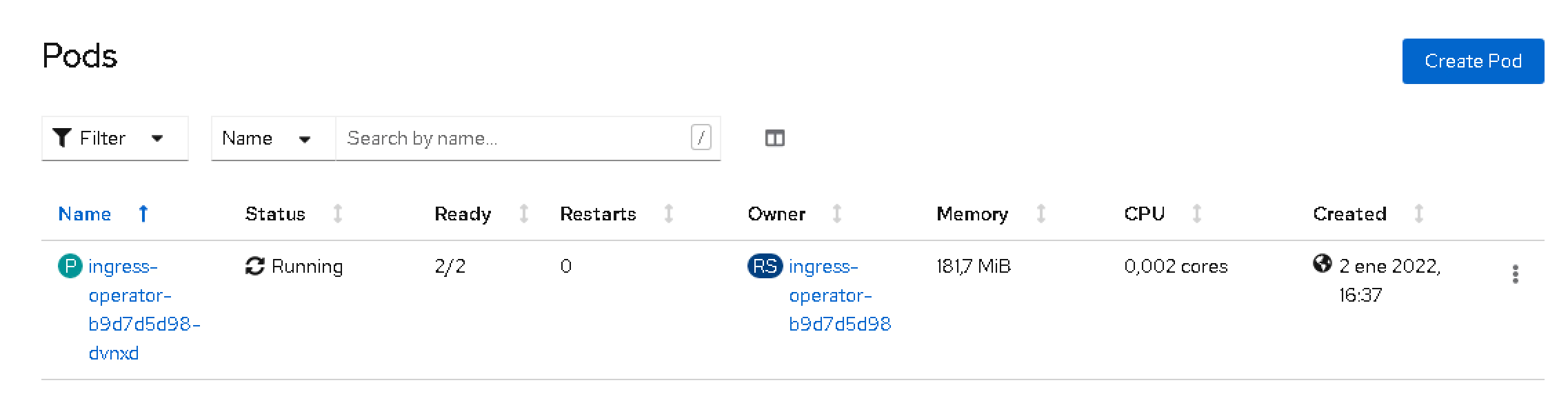

The Ingress ecosystem revolves around two main resources:

🏷️ IngressClass

Defines which controller will manage the associated Ingresses. Its scope is cluster-wide, so it is usually managed by the platform team.

🌐 Ingress

It is the resource that developers use to expose a service. It allows defining routes, domains, TLS certificates, and little more.

Its specification is minimal by design, which allowed for rapid adoption, but also laid the foundation for current problems.

The problem: a standard too simple for complex needs

As Kubernetes became an enterprise standard, users wanted to replicate advanced configurations of traditional proxies: rewrites, timeouts, custom headers, CORS, etc.

But Ingress did not provide native support for all this.

Vendors reacted… and chaos was born.

Annotations vs CRDs: two incompatible paths

Different Ingress Controllers have taken very different paths to add advanced capabilities:

📝 Annotations (NGINX, HAProxy…)

Advantages:

- Flexible and easy to use

- Directly in the Ingress resource

Disadvantages:

- Hundreds of proprietary annotations

- Fragmented documentation

- Non-portable configurations between vendors

📦 Custom CRDs (Traefik, Kong…)

Advantages:

- More structured and powerful

- Better validation and control

Disadvantages:

- Adds new non-standard objects

- Requires installation and management

- Less interoperability

Result?

Infrastructures deeply coupled to a vendor, complicating migrations, audits, and automation.

The complexity for development teams

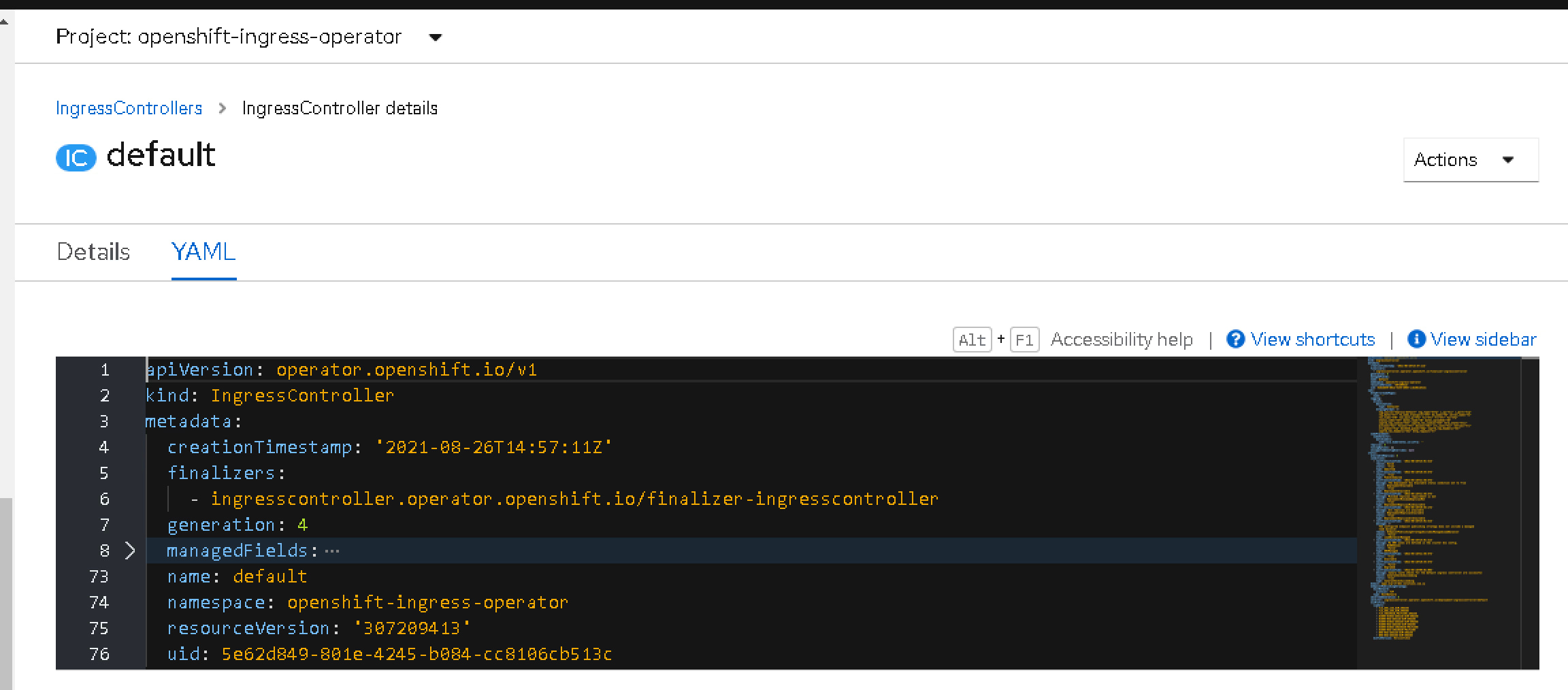

The design of Ingress implies two very different responsibilities:

- Platform: defines IngressClass

- Application: defines Ingress

But the reality is that the developer ends up making decisions that should be the responsibility of the platform area:

- Certificates

- Security policies

- Rewrite rules

- CORS

- Timeouts

- Corporate naming practices

This causes:

- Inconsistent configurations

- Bottlenecks in reviews

- Constant dependency between teams

- Lack of effective standardization

In large companies, where security and governance are critical, this is especially problematic.

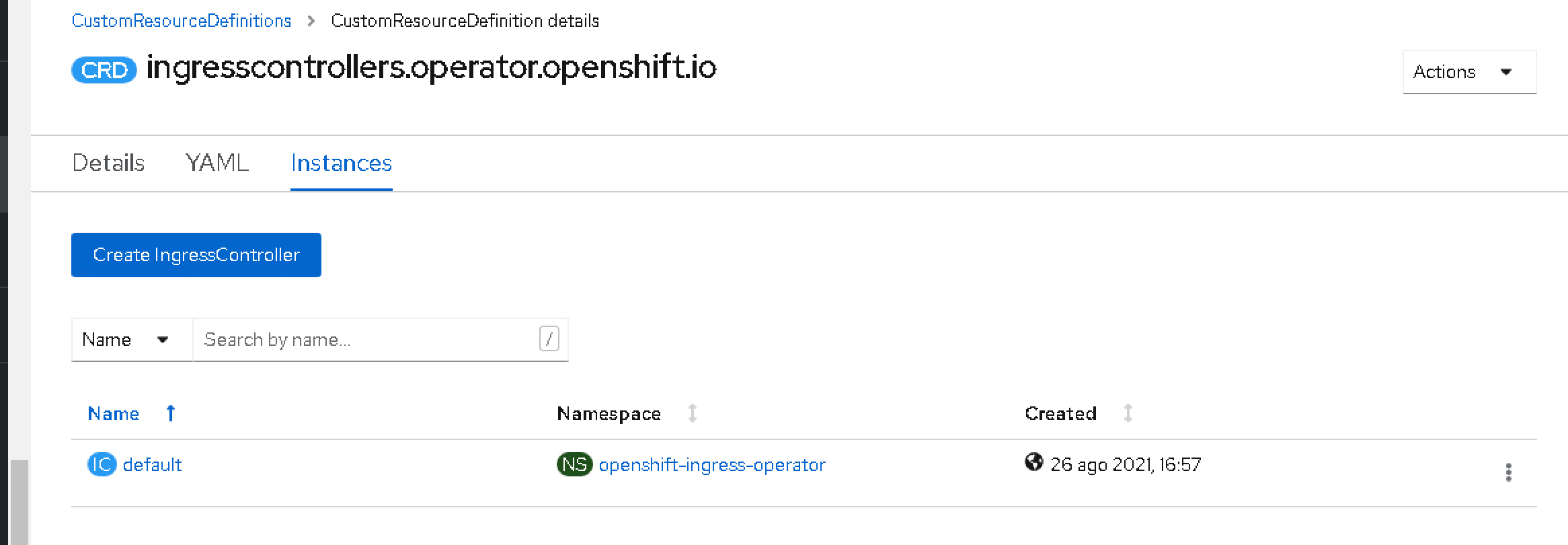

NGINX Ingress: the decommissioning that reignited the debate

The recent decommissioning of the NGINX Ingress Controller has highlighted the fragility of the ecosystem:

- Thousands of clusters depend on it

- Multiple projects use its annotations

- Migrating involves rewriting entire configurations

This has reignited the conversation about the need for a real standard… and there appears Gateway API.

Gateway API: a promising alternative (but not perfect)

Gateway API was born to solve many of the limitations of Ingress:

- Clear separation of responsibilities (infrastructure vs application)

- Standardized extensibility

- More types of routes (HTTPRoute, TCPRoute…)

- Greater expressiveness without relying on proprietary annotations

But it also brings challenges:

- Requires gradual adoption

- Not all vendors implement the same

- Migration is not trivial

Even so, it is shaping up to be the future of traffic management in Kubernetes.

Conclusion

Ingresses have been fundamental to the success of Kubernetes, but their own simplicity has led them to become a bottleneck. The lack of interoperability, differences between vendors, and complex governance in enterprise environments make it clear that it is time to adopt more mature models.

Gateway API is not perfect, but it moves in the right direction.

Organizations that want future stability should start planning their transition.

📚 Want to dive deeper into Kubernetes? This article is part of our comprehensive Kubernetes Architecture Patterns guide, where you’ll find all fundamental and advanced concepts explained step by step.