Introduction

OpenShift, Red Hat’s Kubernetes platform, has its own way of exposing services to external clients. In vanilla Kubernetes, you would typically use an Ingress resource along with an ingress controller to route external traffic to services. OpenShift, however, introduced the concept of a Route and an integrated Router (built on HAProxy) early on, before Kubernetes Ingress even existed. Today, OpenShift supports both Routes and standard Ingress objects, which can sometimes lead to confusion about when to use each and how they relate.

This article explores how OpenShift handles Kubernetes Ingress resources, how they translate to Routes, the limitations of this approach, and guidance on when to use Ingress versus Routes.

OpenShift Routes and the Router: A Quick Overview

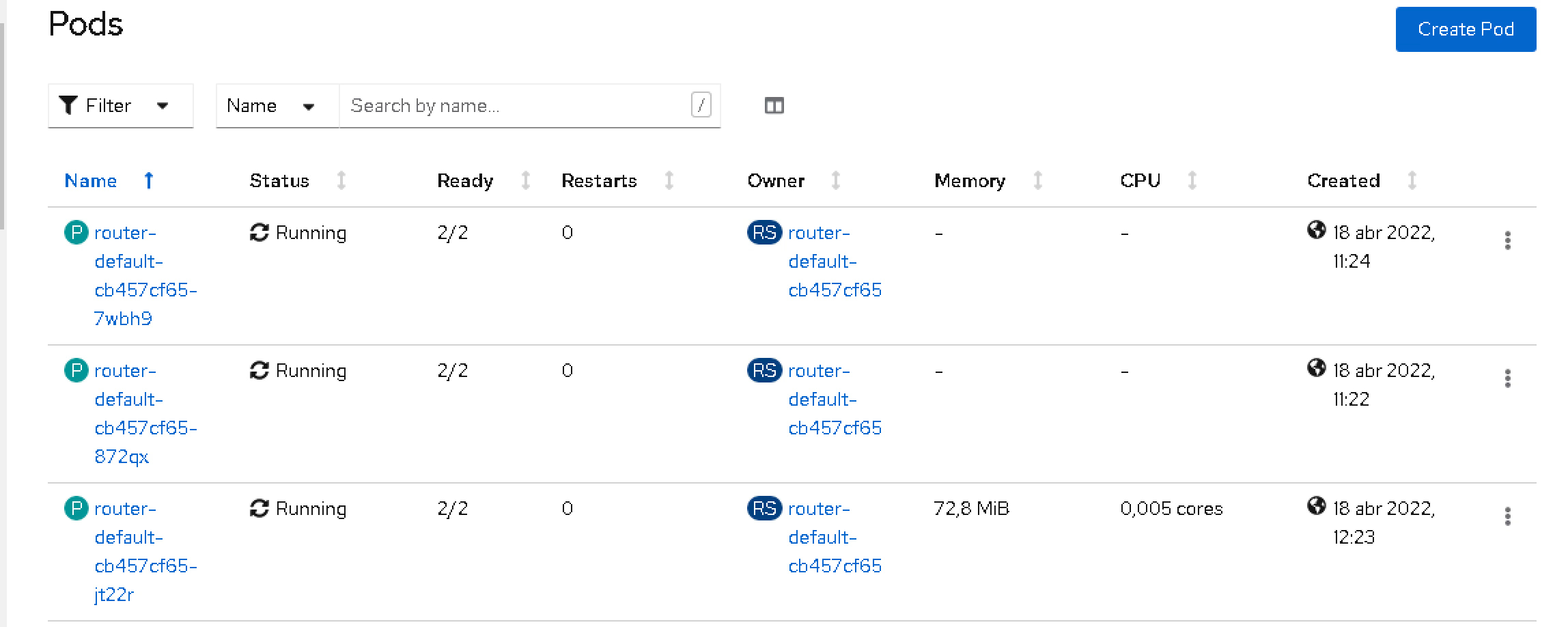

OpenShift Routes are OpenShift-specific resources designed to expose services externally. They are served by the OpenShift Router, which is an HAProxy-based proxy running inside the cluster. Routes support advanced features such as:

- Weighted backends for traffic splitting

- Sticky sessions (session affinity)

- Multiple TLS termination modes (edge, passthrough, re-encrypt)

- Wildcard subdomains

- Custom certificates and SNI

- Path-based routing

Because Routes are OpenShift-native, the Router understands these features natively and can be configured accordingly. This tight integration enables powerful and flexible routing capabilities tailored to OpenShift environments.

Using Kubernetes Ingress in OpenShift (Default Behavior)

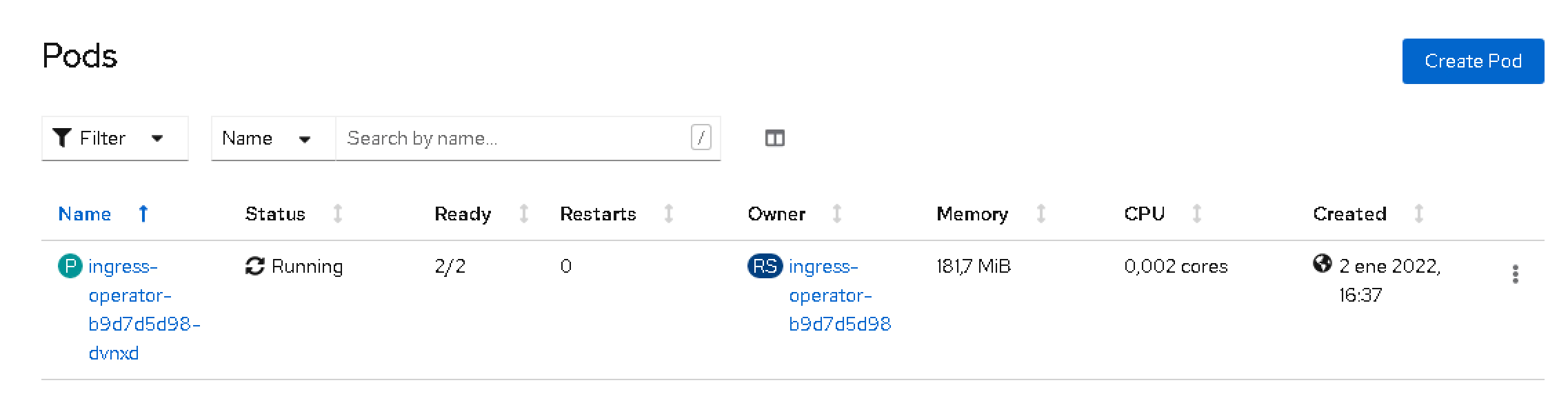

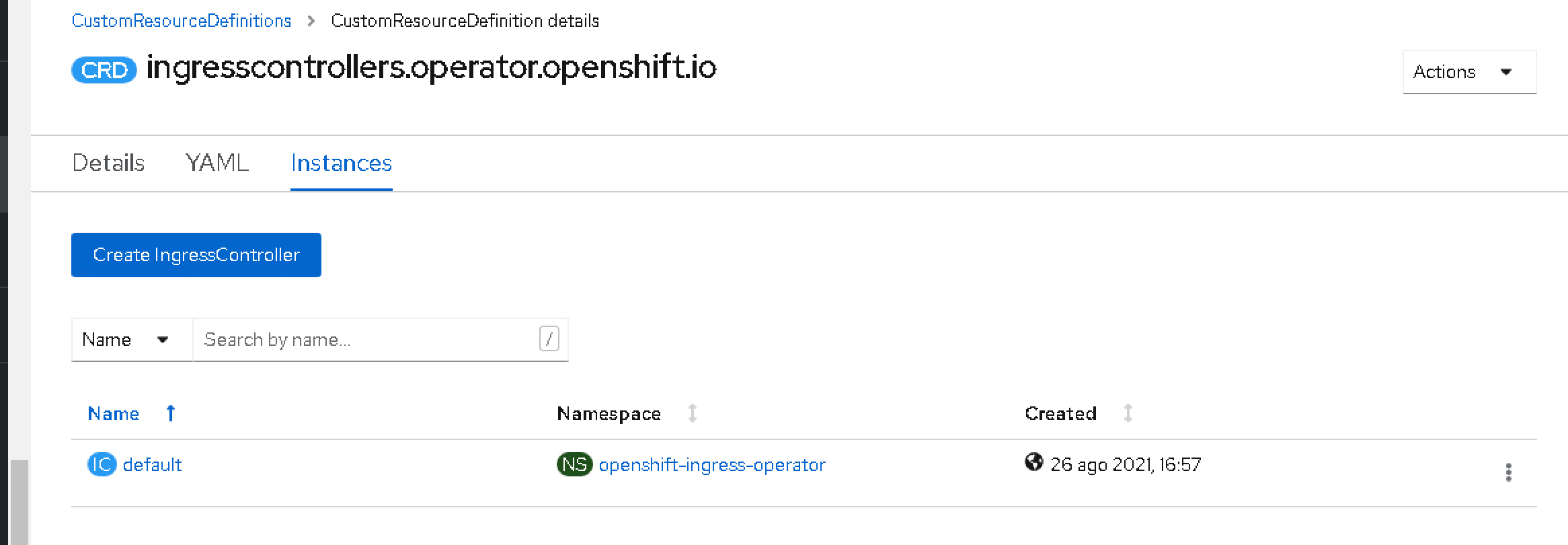

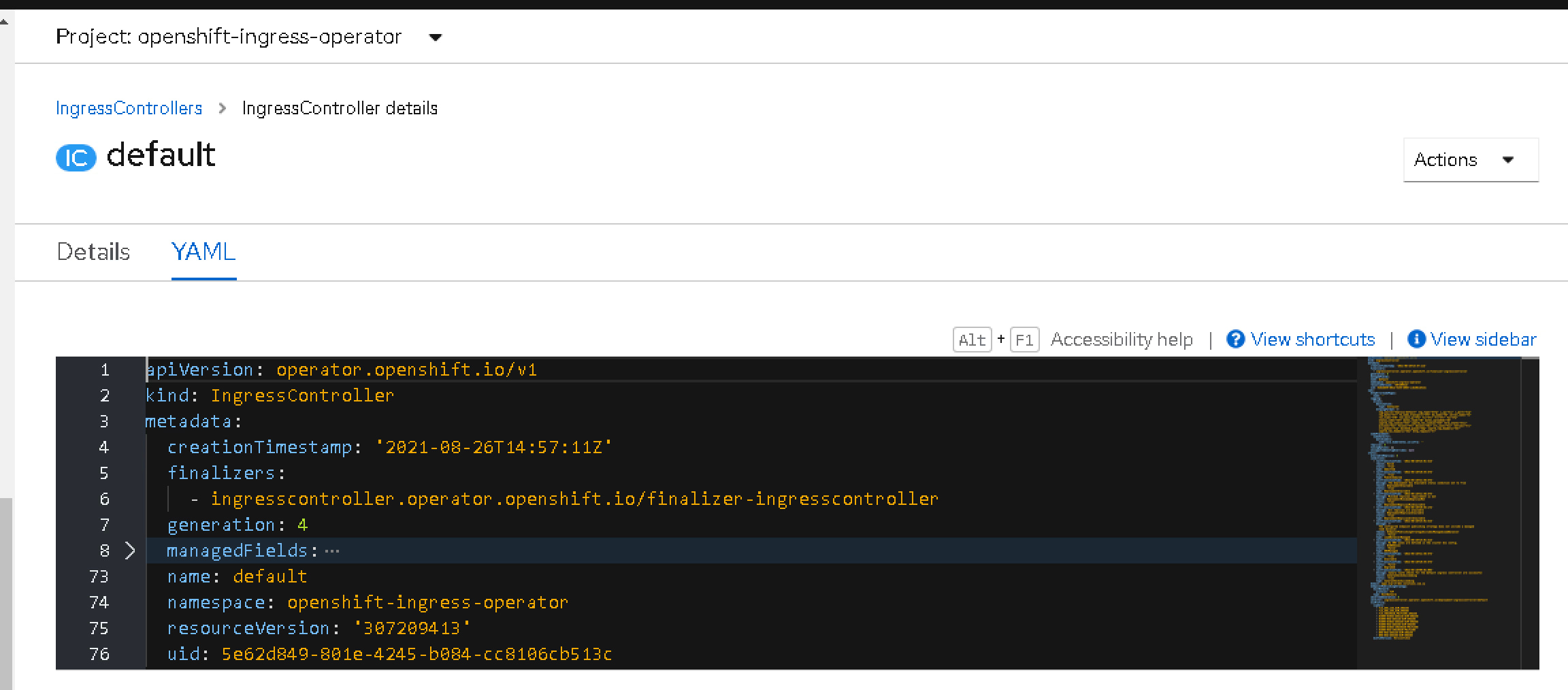

Starting with OpenShift Container Platform (OCP) 3.10, Kubernetes Ingress resources are supported. When you create an Ingress, OpenShift automatically translates it into an equivalent Route behind the scenes. This means you can use standard Kubernetes Ingress manifests, and OpenShift will handle exposing your services externally by creating Routes accordingly.

Example: Kubernetes Ingress and Resulting Route

Here is a simple Ingress manifest:

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: example-ingress

annotations:

nginx.ingress.kubernetes.io/rewrite-target: /

spec:

rules:

- host: www.example.com

http:

paths:

- path: /testpath

pathType: Prefix

backend:

service:

name: test-service

port:

number: 80

OpenShift will create a Route similar to:

apiVersion: route.openshift.io/v1

kind: Route

metadata:

name: example-route

spec:

host: www.example.com

path: /testpath

to:

kind: Service

name: test-service

weight: 100

port:

targetPort: 80

tls:

termination: edge

This automatic translation simplifies migration and supports basic use cases without requiring Route-specific manifests.

Tuning Behavior with Annotations (Ingress ➝ Route)

When you use Ingress on OpenShift, only OpenShift-aware annotations are honored during the Ingress ➝ Route translation. Controller-specific annotations for other ingress controllers (e.g., nginx.ingress.kubernetes.io/*) are ignored by the OpenShift Router. The following annotations are commonly used and supported by the OpenShift router to tweak the generated Route:

| Purpose | Annotation | Typical Values | Effect on Generated Route |

|---|---|---|---|

| TLS termination | route.openshift.io/termination | edge · reencrypt · passthrough | Sets Route spec.tls.termination to the chosen mode. |

| HTTP→HTTPS redirect (edge) | route.openshift.io/insecureEdgeTerminationPolicy | Redirect · Allow · None | Controls spec.tls.insecureEdgeTerminationPolicy (commonly Redirect). |

| Backend load-balancing | haproxy.router.openshift.io/balance | roundrobin · leastconn · source | Sets HAProxy balancing algorithm for the Route. |

| Per-route timeout | haproxy.router.openshift.io/timeout | duration like 60s, 5m | Configures HAProxy timeout for requests on that Route. |

| HSTS header | haproxy.router.openshift.io/hsts_header | e.g. max-age=31536000;includeSubDomains;preload | Injects HSTS header on responses (edge/re-encrypt). |

Note: Advanced features like weighted backends/canary or wildcard hosts are not expressible via standard Ingress. Use a Route directly for those.

Example: Ingress with OpenShift router annotations

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: example-ingress-https

annotations:

route.openshift.io/termination: edge

route.openshift.io/insecureEdgeTerminationPolicy: Redirect

haproxy.router.openshift.io/balance: leastconn

haproxy.router.openshift.io/timeout: 60s

haproxy.router.openshift.io/hsts_header: max-age=31536000;includeSubDomains;preload

spec:

rules:

- host: www.example.com

http:

paths:

- path: /

pathType: Prefix

backend:

service:

name: test-service

port:

number: 80

This Ingress will be realized as a Route with edge TLS and an automatic HTTP→HTTPS redirect, using least connections balancing and a 60s route timeout. The HSTS header will be added by the router on HTTPS responses.

Limitations of Using Ingress to Generate Routes

While convenient, using Ingress to generate Routes has limitations:

- Missing advanced features: Weighted backends and sticky sessions require Route-specific annotations and are not supported via Ingress.

- TLS passthrough and re-encrypt modes: These require OpenShift-specific annotations on Routes and are not supported through standard Ingress.

- Ingress without host: An Ingress without a hostname will not create a Route; Routes require a host.

- Wildcard hosts: Wildcard hosts (e.g.,

*.example.com) are only supported via Routes, not Ingress. - Annotation compatibility: Some OpenShift Route annotations do not have equivalents in Ingress, leading to configuration gaps.

- Protocol support: Ingress supports only HTTP/HTTPS protocols, while Routes can handle non-HTTP protocols with passthrough TLS.

- Config drift risk: Because Routes created from Ingress are managed by OpenShift, manual edits to the generated Route may be overwritten or cause inconsistencies.

These limitations mean that for advanced routing configurations or OpenShift-specific features, using Routes directly is preferable.

When to Use Ingress vs. When to Use Routes

Choosing between Ingress and Routes depends on your requirements:

- Use Ingress if:

- You want portability across Kubernetes platforms.

- You have existing Ingress manifests and want to minimize changes.

- Your application uses only basic HTTP or HTTPS routing.

- You prefer platform-neutral manifests for CI/CD pipelines.

- Use Routes if:

- You need advanced routing features like weighted backends, sticky sessions, or multiple TLS termination modes.

- Your deployment is OpenShift-specific and can leverage OpenShift-native features.

- You require stability and full support for OpenShift routing capabilities.

- You need to expose non-HTTP protocols or use TLS passthrough/re-encrypt modes.

- You want to use wildcard hosts or custom annotations not supported by Ingress.

In many cases, teams use a combination: Ingress for portability and Routes for advanced or OpenShift-specific needs.

Conclusion

On OpenShift, Kubernetes Ingress resources are automatically converted into Routes, enabling basic external service exposure with minimal effort. This allows users to leverage existing Kubernetes manifests and maintain portability. However, for advanced routing scenarios and to fully utilize OpenShift’s powerful Router features, using Routes directly is recommended.

Both Ingress and Routes coexist seamlessly on OpenShift, allowing you to choose the right tool for your application’s requirements.

📚 Want to dive deeper into Kubernetes? This article is part of our comprehensive Kubernetes Architecture Patterns guide, where you’ll find all fundamental and advanced concepts explained step by step.