Learn some tips about efficiently creating your API and dealing with the actual work simultaneously.

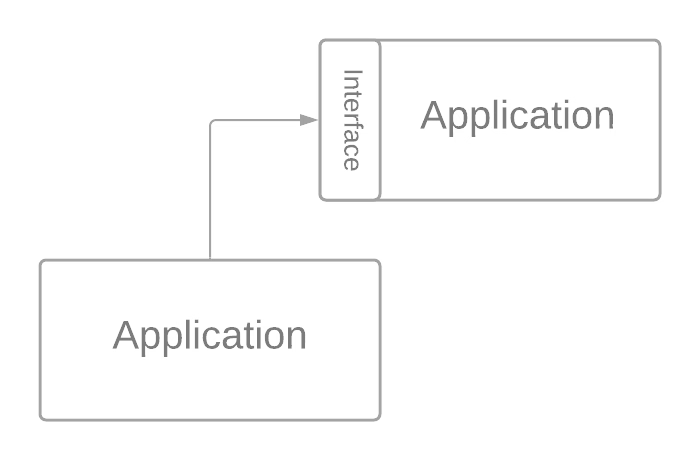

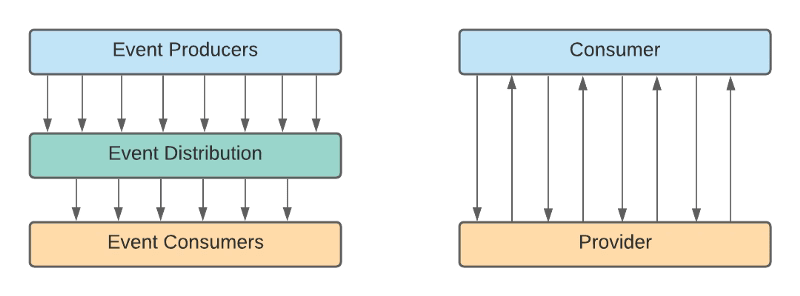

When creating an API to expose a capability or integrate different systems, there are mainly two ways to do it: Contract-first or Contract-Last approach. The difference is about the methodology you will follow to create the API.

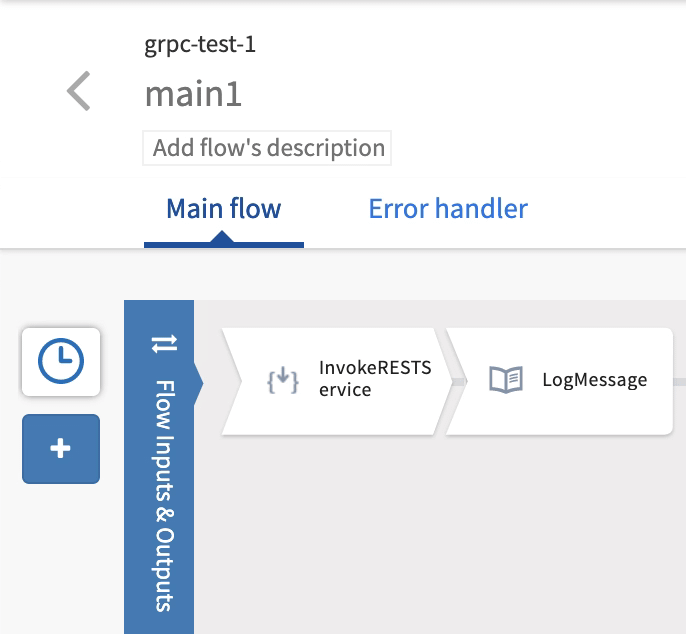

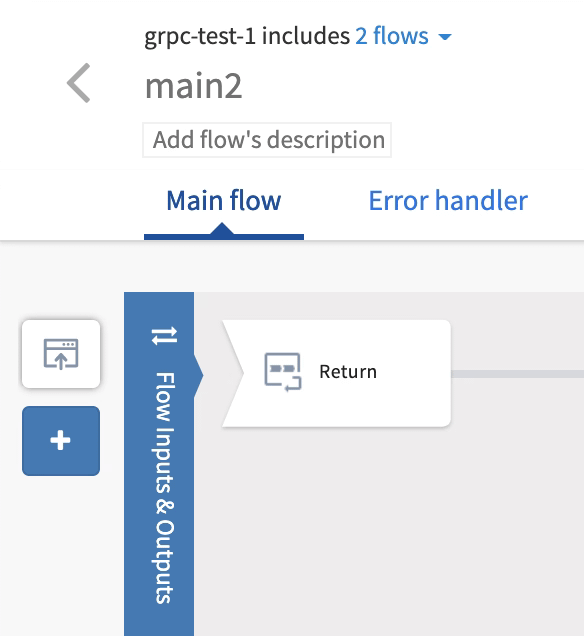

In a contract-first approach, the definition of the contract is the starting point. It does not matter which language or technology you are using. This reality has been the same since the beginning of the distributed system in times of RMI and CORBA and continues to be the same in the extraordinary times of gRPC and GraphQL.

You start with the definition of the contract between both parties: the one that exposes the capability and the initial consumer of the information. That implies the definition of several aspects of it:

- Purpose of the operations.

- Fields that each operation has.

- Return information depending on each scenario.

- Error information reported, and so on.

After that, you will start to design the API itself and the implementation to meet the definition agreed between the parties.

This approach has several advantages and disadvantages, but today it is the most “acceptable” way of developing API. As advantages we can comment about the following ones:

- Reducing Rework Activities: As you start defining the contract, you can quickly validate that all parties are OK with the contract before writing any implementation work. That would avoid any re-coding activity or re-work because of a misunderstanding or just adaption of the expectations and become more efficient.

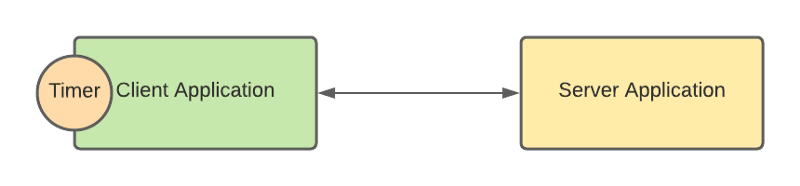

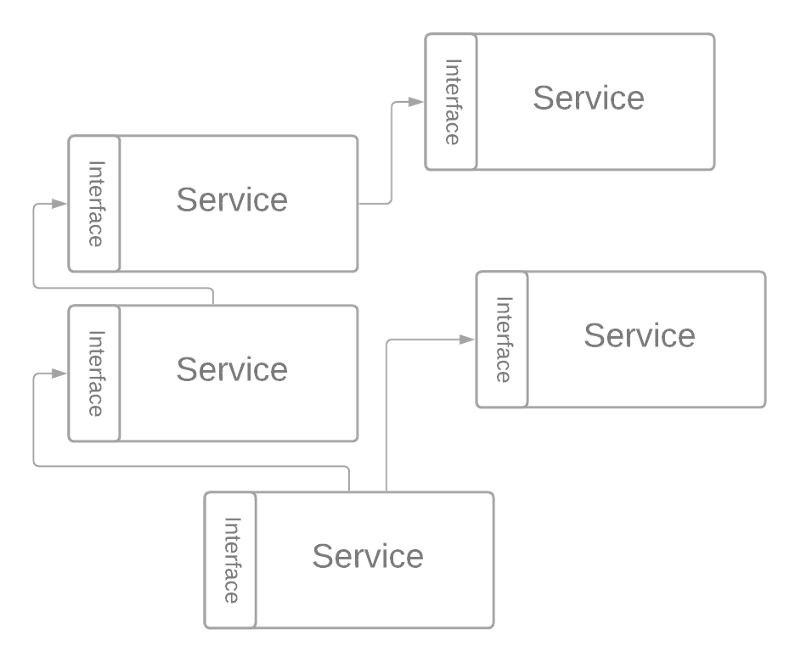

- Separation of Duties: It will also provide the separation of duties for both parties, the provider and the consumers. Because as soon as you have the contract, both teams can start working on that. Even you can provide a mock for the consumer to test any scenario quickly without the need to wait for the actual service to be created.

But the contract First approach has some requirements or assumptions to be successful that are not very easy to meet in a real-world scenario. This situation is expected. There are a lot of methodologies, tips, or advices that you learn when you are studying that are not applicable in real-life. To validate that comment, let me ask you a question:

Did you create an API and the interface you created was 100% the same one you had at the end?

The answer to that question in my case is “No, never.” And you can think that I am a lousy API designer, and you can be right. I am sure that most people reading this article would define their contracts much better than I do, but this is not the point. Because when we are on the implementation phase, we usually detect something that we didn’t think about in the design phase, or when we try to do a low-level design, there are other concepts that we did not contemplate at the point that makes another solution the best suited for the scenario so that you will impact the API, and that has a cost.

It can be possible that you mitigate that risk by just spending more time on the contract definition phase to make sure that nothing is well-considered or even create some prototypes to ensure that the API generated will be the final one. But if you do this, you are just lowering the probability for this to happen, never removing it, and at the same time, you are reducing the benefits of the approach.

One of the critical points we commented on above was efficiency. Suppose we think about the efficiency now when you will spend more time on that phase. That means that it will be less efficient. Also, we commented on the great thing of doing separation of Duties: but in this case, while the interface creation time is extended, it is also extended the time that both teams need to wait until they can work on their parts.

But implementing the other approach will not provide much benefit. It can lead to even more expensive work because you will get no validation for the customer until the API is implemented. And again, another question:

Did you ever share something with your customer for the first time and they didn’t ask for any change?

Again, the answer is the same: “No, never.” And that cost will always be higher than the one talking about the change in the definition, because as you know, the change is much more costly the further you detect it in the development cycle, and it is not a linear increase. It is much more close to an exponential rise.

So, what is my recommendation here? Follow the contract-first approach and accept real life. So do your best shot of defining the API and have an agreement between parties and if you detect something that can impact the API, notify it as soon as possible to the parties. In the end, this is nothing else than an interactive approach also for the API definition, and there is nothing wrong with it.

Let’s be honest there is no silver bullet that will provide the green path in your daily work, and that is the great thing about doing it and why we enjoyed it so much. Because in each of our work decision as it happens in any other aspect of life, there is so many aspects, so many situations, so many details that always impacts the awesome beautiful methodology that you can see in an article, a paper, a class, or a tweet.