Table of Contents

Introduction

Kubernetes Autoscaling has suffered a dramatic change. Since the Kubernetes 1.26 release, all components should migrate their HorizontalPodAutoscaler objects from the v1 to the new release v2that has been available since Kubernetes 1.23.

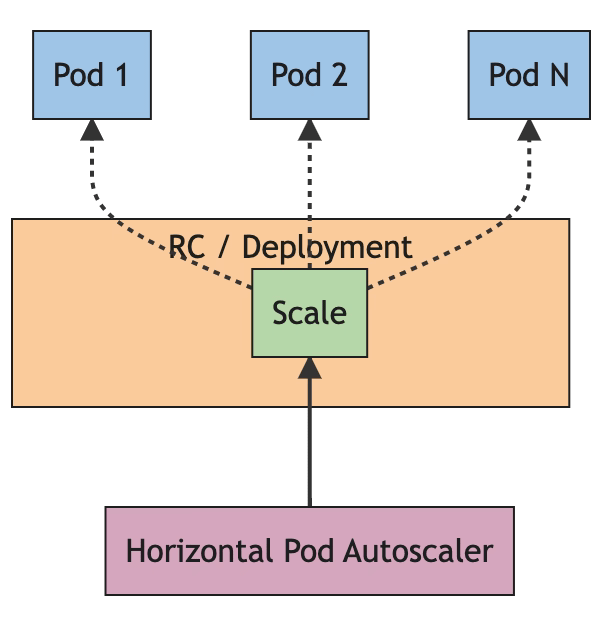

HorizontalPodAutoscaler is a crucial component in any workload deployed on a Kubernetes cluster, as the scalability of this solution is one of the great benefits and key features of this kind of environment.

A little bit of History

Kubernetes has introduced a solution for the autoscaling capability since the version Kubernetes 1.3 a long time ago, in 2016. And the solution was based on a control loop that runs at a specific interval that you can configure with the property --horizontal-pod-autoscaler-sync-period parameters that belong to the kube-controller-manager.

So, once during this period, it will get the metrics and evaluate through the condition defined on the HorizontalPodAutoscaler component. Initially, it was based on the compute resources used by the pod, main memory, and CPU.

This provided an excellent feature, but with the past of time and adoption of the Kubernetes environment, it has been shown as a little narrow to handle all the scenarios that we should have, and here is where other awesome projects we have discussed here, such as KEDA brings into the picture to provide a much more flexible set of features.

Kubernetes AutoScaling Capabilities Introduced v2

With the release of the v2 of the Autoscaling API objects, we have included a range of capabilities to upgrade the flexibility and options available now. There most relevant ones are the following:

- Scaling on custom metrics: With the new release, you can configure an

HorizontalPodAutoscalerobject to scale using custom metrics. When we talk about custom metrics, we talk about any metric generated from Kubernetes. You can see a detailed walkthrough about using Custom metrics in the official documentation - Scaling on multiple metrics: With the new release, you also have the option to scale based on more than one metric. So now the

HorizontalPodAutoscalerwill evaluate each scaling rule condition, propose a new scale value for each of them, and take the maximum value as the final one. - Support for Metrics API: With the new release, the controller from the HoriztalPodAutoscaler components retrieves metrics from a series of registered APIs, such as

metrics.k8s.io,custom.metrics.k8s.io,external.metrics.k8s.io. For more information on the different metrics available, you can take a look at the design proposal - Configurable Scaling Behavior: With the new release, you have a new field,

behavior, that allows configuring how the component will behave in terms of scaling up or scaling down activity. So, you can define different policies for the scaling up and others for the scaling down, limit the max number of replicas that can be added or removed in a specific period, to handle the issues with the spikes of some components as Java workloads, among others. Also, you can define a stabilization window to avoid stress when the metric is still fluctuating.

Kubernetes Autoscaling v2 vs KEDA

We have seen all the new benefits that Autoscaling v2 provides, so I’m sure that most of you are asking the same question: Is Kubernetes Autoscaling v2 killing KEDA?

Since the latest releases of KEDA, KEDA already includes the new objects under the autoscaling/v2 group as part of their development, as KEDA relies on the native objects from Kubernetes, and simplify part of the process you need to do when you want to use custom metric or external ones as they have scalers available for pretty much everything you could need now or even in the future.

But, even with that, there are still features that KEDA provides that are not covered here, such as the scaling “from zero” and “to zero” capabilities that are very relevant for specific kinds of workloads and to get a very optimized use of resources. Still, it’s safe to say that with the new features included in the autoscaling/v2 release, the gap is now smaller. Depending on your needs, you can go with the out-of-the-box capabilities without including a new component in your architecture.

📚 Want to dive deeper into Kubernetes? This article is part of our comprehensive Kubernetes Architecture Patterns guide, where you’ll find all fundamental and advanced concepts explained step by step.