Increasing the HTTP logs in TIBCO BusinessWorks when you are debugging or troubleshooting an HTTP-based integration that could be related to a REST or SOAP service is one of the most used and helpful things you can do when developing with TIBCO BusinessWorks.

This article is part of my comprehensive TIBCO Integration Platform Guide where you can find more patterns and best practices for TIBCO integration platforms.

The primary purpose of increasing the HTTP logs is to get complete knowledge about what information you are sending and which communication you are receiving from your partner communicator to help understand an error or unexpected behavior.

What are the primary use cases for increasing the HTTP logs?

In the end, all the different use cases are variations of the primary use case: “Get full knowledge about the HTTP exchange communication between both parties.” Still, some more detailed ones can be listed below:

- Understand why a backend server is rejecting a call that could be related to Authentication or Authorization, and you need to see the detailed response by the backend server.

- Verify the value of each HTTP Header you are sending that could affect the communication’s compression or accepted content type.

- See why you’re rejecting a call from a consumer

Splitting the communication based on the source

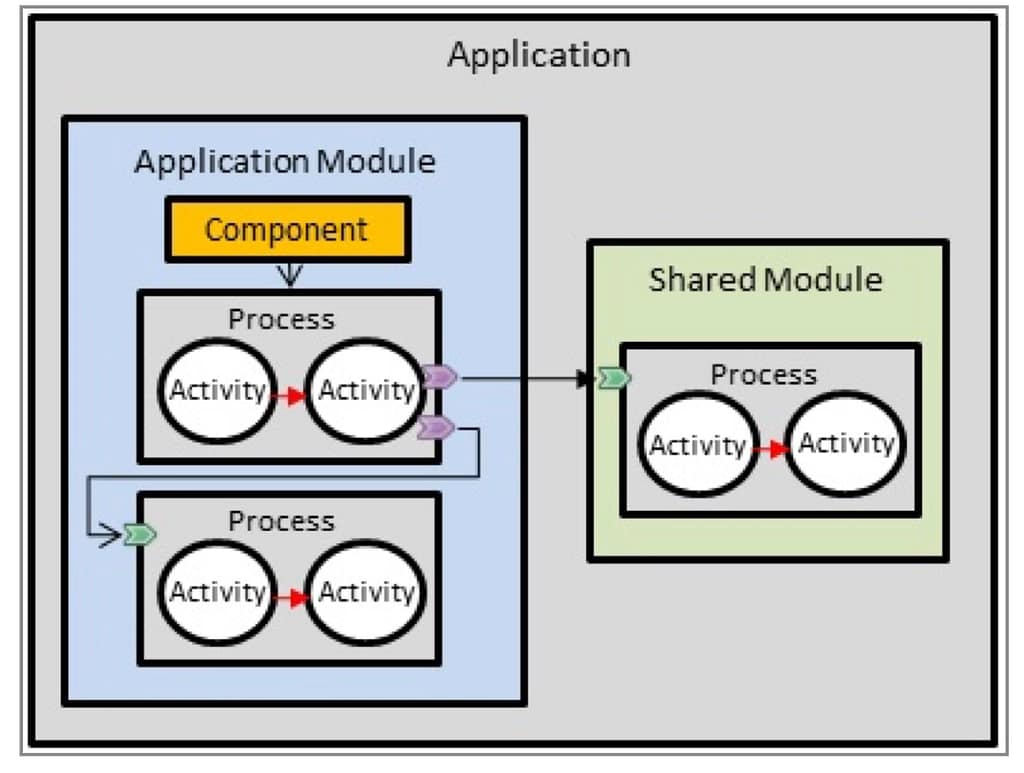

The most important thing to understand is that the logs usually depend on the library you are using, and it is not the same library used to expose an HTTP-based Server as the library you use to consume an HTTP-based service such as REST or a SOAP service.

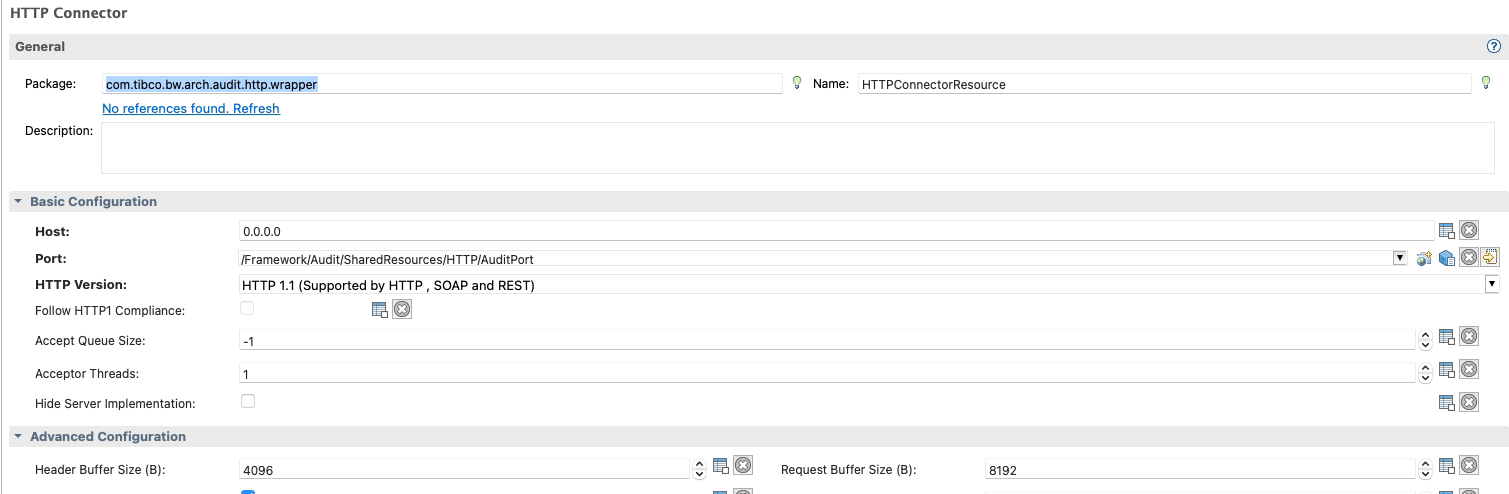

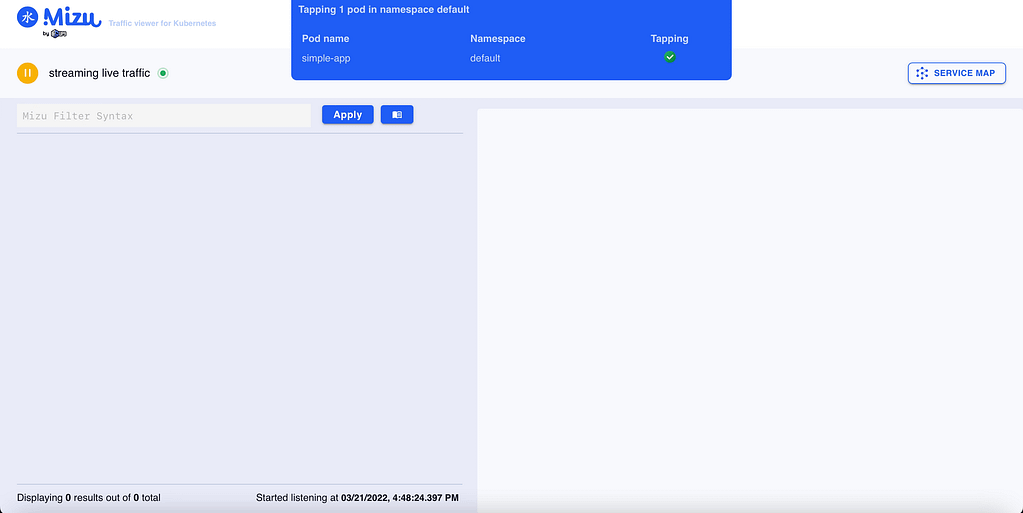

Starting from what you expose, this is the easiest thing because this will be defined by the HTTP Connector resources you’re using, as you can see in the picture below:

All HTTP Connector Resources that you can use to expose REST and SOAP services are based on the Jetty Service implementation, and that means that the loggers that you need to change their configuration are related to the Jetty server itself.

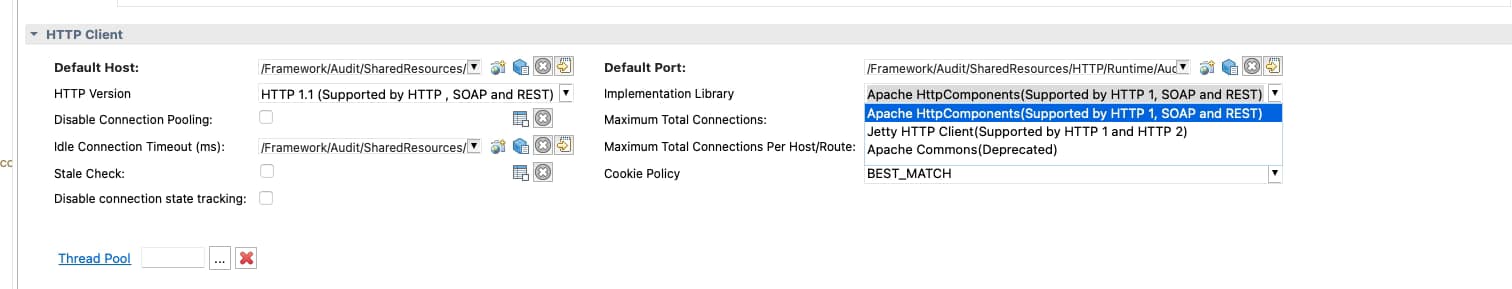

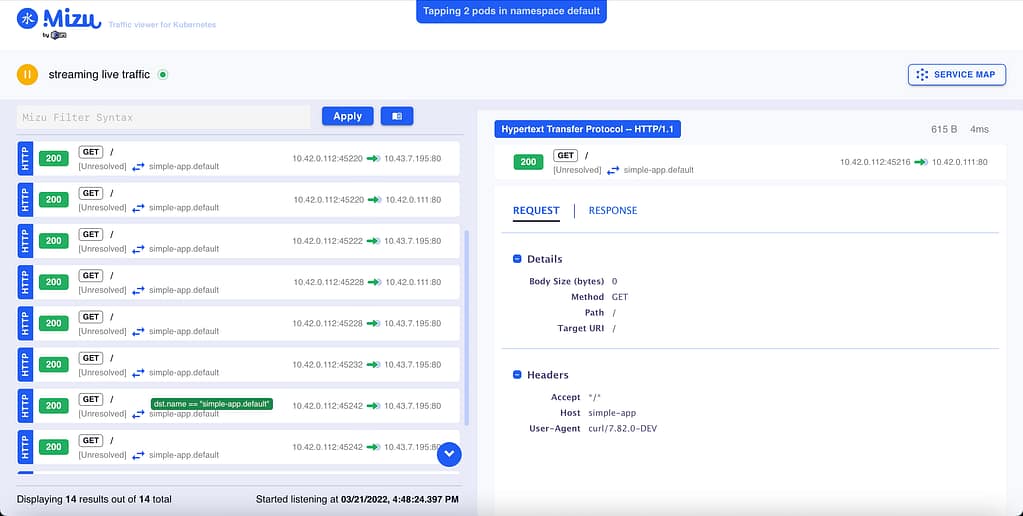

More complex, in theory, are the ones related to the client communication when our TIBCO BusinessWorks application consumes an HTTP-based service provided by a backend because each of these communications has its own HTTP Client Shared Resources. The configuration of each of them will be different because one of the settings we can get here is the Implementation Library, and that will have a direct effect on the way to change the log configuration:

You have three options when you define an HTTP Client Resource, as you can see in the picture above:

- Apache HttpComponents: The default one supports HTTP1, SOAP and REST services.

- Jetty HTTP Client: This client only supports HTTP flows such as HTTP1 and HTTP2, and it would be the primary option when you’re working with HTTP2 flows.

- Apache Commons: Similar to the first one, but this is currently deprecated, and to be honest, if you have some client component using this configuration, you should change it when you can to the Apache HttpComponents.

So, if we’re consuming a SOAP and REST service, it is clear that we will be using the implementation library Apache HttpComponents, and that will give us the logger we need to use.

Because for Apache HttpComponents, we can rely on the following logger: “org.apache.http” and in case we want to extend the server side, or we’re using Jetty HTTP client, we can use this one: “org.eclipse.jetty.http”

We need to be aware that we cannot extend it just for a single HTTP Client resource because the configuration will be based on the Implementation Library, so in case we set the DEBUG level for the Apache HttpComponents library, it will affect all Shared Resources using this implementation Library, and you’ll need to differentiate based on the data inside the log so that will be part of your data analysis.

How to set HTTP Logs in TIBCO BusinessWorks?

Now that we have the loggers, we must set it to a DEBUG (or TRACE) level. We need to know how to do it, and we have several options depending on how we would like to do it and what access we have. The scope of this article is TIBCO BusinessWorks Container Edition, but you can easily extrapolate part of this knowledge to an on-premises TIBCO BusinessWorks installation.

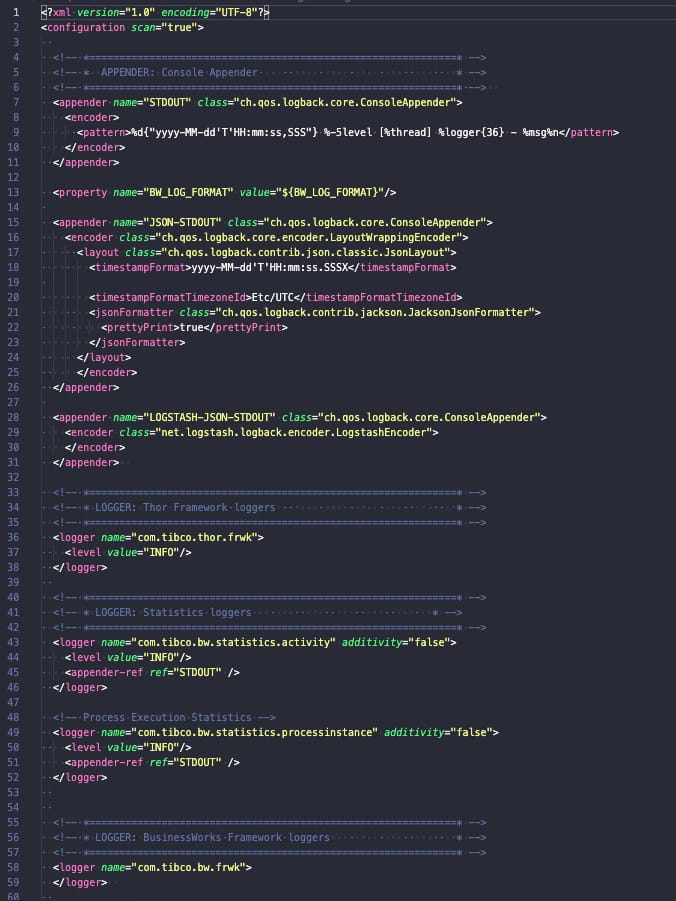

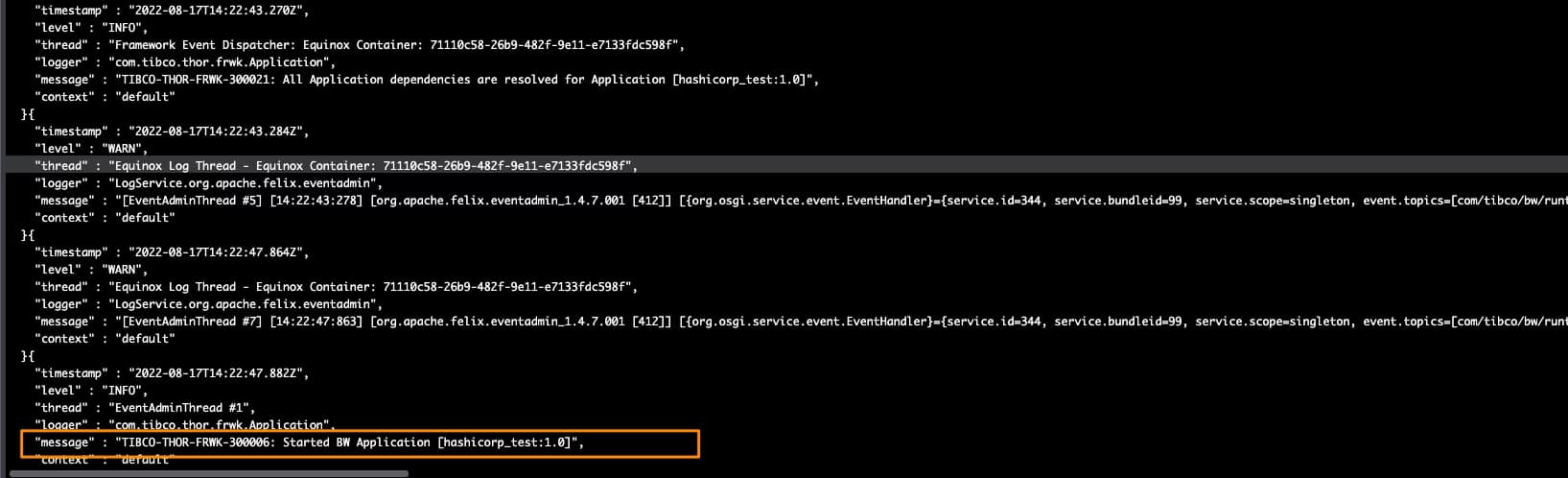

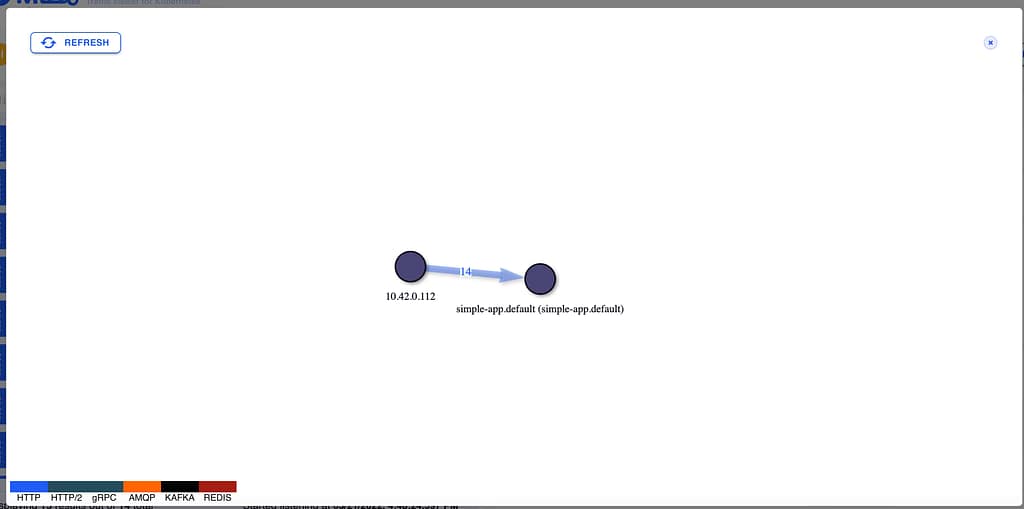

TIBCO BusinessWorks (container or not) relies on its logging capabilities in the log back library, and this library is configured using a file named logback.xml that could have the configuration that you need, as you can see in the picture below:

So if we want to add a new logging configuration, we need to add a new element loggerto the file with the following structure:

<logger name="%LOGGER_WE_WANT_TO_SEE">

<level value="%LEVEL_WE_WANT_TO_SEE%"/>

</logger>

So, the logger was precise based on the previous section, and the level will depend on how much info you want to see. The log Levels are the following ones: ERROR, WARN, INFO, DEBUG, TRACE. DEBUG and TRACE are the ones that show more information.

In our case, DEBUG should be enough to get the full HTTP Request and HTTP Response, but you can also apply it to other things where you could need a different log level.

Now you need to add that to the logback.xml file, and to do that, you have several options, as commented:

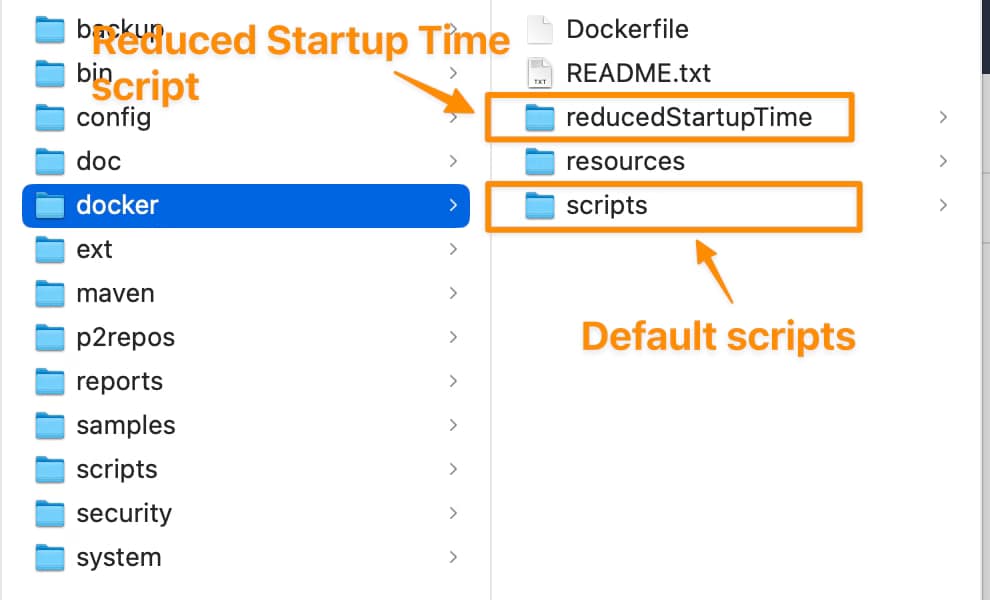

- You can find the logback.xml inside the BWCE container (or the AppNode configuration folder) and modify its content. The default location of this file is this one:

/tmp/tibco.home/bwce/<VERSION>/config/logback.xml To do this, you will need to have access to do a kubectl exec on the bwce container, and if you do the change, the change will be temporary and lost in the next restart. That could be something good or bad, depending on your goal. - If you want to have it permanent or don’t have access to the container, you have two options. The first one is to include a custom copy of the logback.xml in the /resources/custom-logback/ folder in the BWCE base image and set the environment variable

CUSTOM_LOGBACK to TRUE value, and that will override the default logback.xml configuration with the content of this file. As commented, this will be “permanent” and will apply since the first deployment of the app with this configuration. You can find more info the official doc here. - There is also an additional one since BWCE 2.7.0 and above that allows you to change the logback.xml content without a new copy or changing the base image, and that’s based on the usage of the environment property

BW_LOGGER_OVERRIDES with the content in the following way (logger=value) so in our case it would be something like this org.apache.http=DEBUG and in the next deployment you will get this configuration. Similar to the previous one, this will be permanent but doesn’t require adding a file to the base image to be achievable.

So, as you can see, you have different options depending on your needs and access levels.

Conclusion

In conclusion, enhancing HTTP logs within TIBCO BusinessWorks during debugging and troubleshooting is a vital strategy. Elevating log levels provides a comprehensive grasp of information exchange, aiding in analyzing errors and unexpected behaviors. Whether discerning backend rejection causes, scrutinizing HTTP header effects, or isolating consumer call rejections, amplified logs illuminate complex integration scenarios. Adaptations vary based on library usage, encompassing server exposure and service consumption. Configuration through the logback library involves tailored logger and level adjustments. This practice empowers developers to unravel integration intricacies efficiently, ensuring robust and seamless HTTP-based interactions across systems.