Learn From My Own Experience To Clear Your Kubernetes Certification Exam

But I would also like to provide some practical advice based on my own experience if this can help anyone else going through the same process. I know there are a lot of similar articles, and most of them are worth it because each of them provides a different perspective and approach. So here it is mine:

- Fast but Safe. You will have around 2 hours to complete between 15 to 20 practical questions, which pretty much gives you about 6 minutes each on average. That’s enough time to do it, but also you must go fast. So, try to avoid the approach of reading the whole exam first or moving across questions. It is better to start with the first one right away and if you are blocked, move to the next one. At the same time, you must validate the output you are getting to ensure that you are not missing anything. Try to run any command to validate if the objects have been created correctly and have the right attributes and configuration before moving to the next one. Time is precious. I had a lot of time at the end of the exam to review the questions, but it is also true that I spent 20 minutes because I wrote ngnix instead of nginx, and I was unable to see it!!

- Imperative commands is the way to go: You must learn the YAML structure for the main objects. Deployment, Pod, CronJob, Jobs, etc. You will also need to master the imperative commands to generate the initial output quickly. Imperative commands such as kubectl run, kubectl create, kubectl expose will not provide 100% of the answer, but maybe 80% is the base to make arrangements to have the solution to your question quickly. I recommend taking a look at this resource:

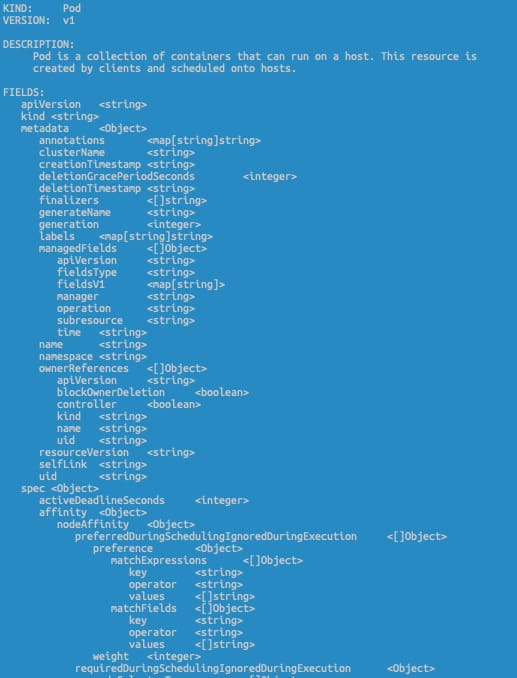

- kubectl explain to avoid going through documentation on thinking a lot. I have a problem learning the exact name of a field or the location in the YAML file. So I used a lot of the kubectl explain, especially with the —rescursive flag. It provides the YAML structure so, if you don’t remember if the key name is configMap or ConfigMapRef or claimName or persitentVolumeClaim, this will be an incredible help. If you also add a grep -A 10 -B 5 command to find your field and its context, you will master it. This doesn’t replace knowing the YAML structure, but it will help to be efficient when you don’t remember the exact name or location.

- Don’t forget about docker/podman and helm: With the changes in the certification in September 2021 also, the building process is essential, so it is excellent if you have enough time in your preparation to play with tools such as docker/podman or helm so you will master any question related to that that you could find.

- Use the simulator: LinuxFoundation is providing you two sessions on the simulator that, from one side, will give you an authentic exam experience, so you will face similar kinds of questions and interface to feel that you are not the first time that you are facing and at the same time you could feel familiar with the environment. I recommend using both sessions (both have the same question), one in the middle of your training and the second one just one or two days before your exam.

So, here are my tips, and I hope you will like them. If they were helpful to you, please let me know on social networks or by mail or another way of contacting your preference! All the best in your preparation, and I’m sure you will get your goals!

📚 Want to dive deeper into Kubernetes? This article is part of our comprehensive Kubernetes Architecture Patterns guide, where you’ll find all fundamental and advanced concepts explained step by step.