We all know that in the rise of the cloud-native development and architectures, we’ve seen Kubernetes based platforms as the new standard all focusing on new developments following the new paradigms and best practices: Microservices, Event-Driven Architectures new shiny protocols like GraphQL or gRPC, and so on and so forth.

But we should be aware that most of the enterprises that are today adopting these technologies are not green-field opportunities. They have a lot of systems already running that all new developments in the new cloud-native architectures need to interact so the rules become more complex and there are a lot of shades of gray when we’re creating our Kubernetes application.

And based on my experience when we are talking with the customer is the new cloud architecture most of them bring the batch pattern here. They know this is not a best practice anymore, that they cannot continue to build their systems in a batch fashion trying to do by night what were should be doing in real-time. But, most of the time they need to co-exist with the systems they already have and could be needed this kind of procedure. So we need to find a way to provide Kubernetes Batch Processing.

Also for customers that already have their existing applications using that pattern and they like to move to the new world is better for them that they can do it without needing to re-architect their existing application. Something that probably they will end up doing but at their own pace.

Batch processing patterns in TIBCO development is a quite used pattern and we have customers all over the world with this kind of development used in production for many years. You know, this kind of process that is executed at a specific moment in time (weekly on Monday, each day at 3 PM .. or just each hour to do some regular job).

It’s a straightforward pattern here. Simply add a timer that you can configure when it will be launched and add your logic. That’s it. As simple as that:

But, how this can be translated into a Kubernetes approach? How we can create applications that work with this pattern and still be managed by our platform? So, fortunately, this is something that can be done, and you have also different ways of doing it depending on what you want to achieve.

Mainly today we are going to describe two ways to be able to do that and try to explain the main differences between one and the other so you can know which one you should use depending on your use case. Those two methods I’m going to call it this way: Batch managed by TIBCO Kubernetes and Cron Job API approach

Batch Managed by TIBCO

This is the simplest one. It is exactly the same approach you have in your existing on-premises BusinessWorks application just deployed into the Kubernetes cluster.

So, you don’t need to change anything in your logic you only will have an application that is started by a Timer with their configuration regarding the scheduling inside the scope of the BusinessWorks application and that’s it.

You only need to provide the artifacts needed to deploy your application into Kubernetes like any other TIBCO BusinessWorks app and that’s it. That means that you’re creating a Deployment to launch this component and you will always have a running pod evaluating when the condition is true to launch the process as you have in your on-premises approach.

Cron-Job Kubernetes API

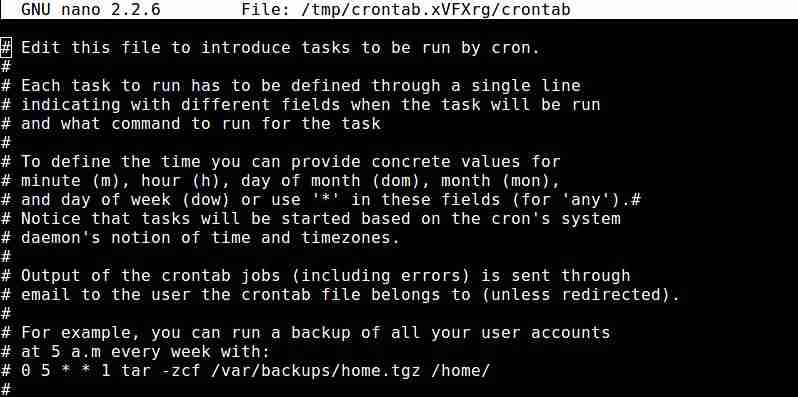

The batch processing pattern is something that is already covered out of the box by the Kubernetes API and that’s why we have the Cron-Job concept. You probably remind the Cron Jobs that we have in our Linux machines. If you’re a developer or a system administrator I’m sure you’ve played with these cronjobs to schedule tasks or commands to be executed at a certain time. If you’re a Windows-based person, this is the Linux equivalent of your Windows Task Scheduler Jobs. Same approach.

And this is very simple, you only need to create a new artifact using this Cron Job API that mainly says the job to execute and the schedule of that job similar of what we’ve done in the past with the cronjob in our Linux machine:

apiVersion: batch/v1beta1

kind: CronJob

metadata:

name: hello

spec:

schedule: "*/1 * * * *"

jobTemplate:

spec:

template:

spec:

containers:

- name: hello

image: busybox

args:

- /bin/sh

- -c

- date; echo Hello from the Kubernetes cluster

restartPolicy: OnFailure

The jobs that we can use here should be compliant with a single rule that also applied in the Unix cron jobs as well: The command should end when the job is done.

And this is something that is critical, which means that our container should exit when the job is done to be able to be used inside this approach. That means that we cannot use a “server approach” as we’re doing in the previous approach because in that case, the pod is never-ending. Does that mean that I cannot use a TIBCO BusinessWorks application as part of a Kubernetes Cron Job? Absolutely Not! Let’s see how you can do it.

So, we should focus on two things, the business logic should be able to run as soon as the process start and the container should end as soon as the job is done. Let’s start with the first one.

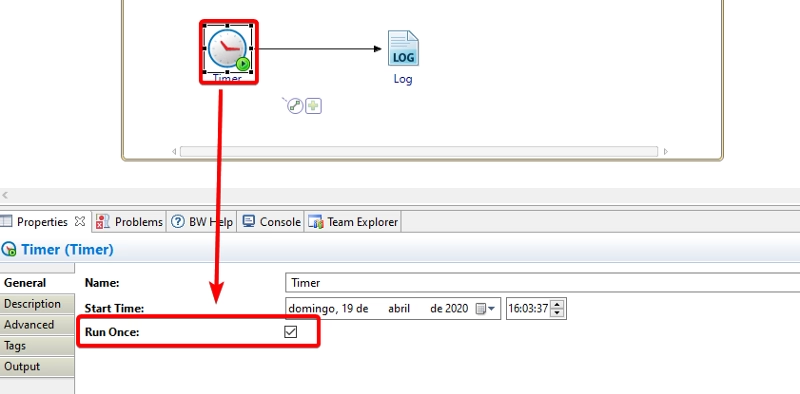

The first one is easy. Let’s use our previous sample: Simple batch approach that writes a log trace each minute. We need to make it start as soon as the process started but this is pretty Out of the box (OOTB) with TIBCO BusinessWorks we only need to configure that timer to run only once and that’s going to start as soon as the application is running:

So, we already have the first requirement fixed, let’s see with the other one.

We should be able to end the container completely as the process ends and that’s challenging because BusinessWorks application doesn’t behave that way. They’re supposed to be always running. But this also can be sorted.

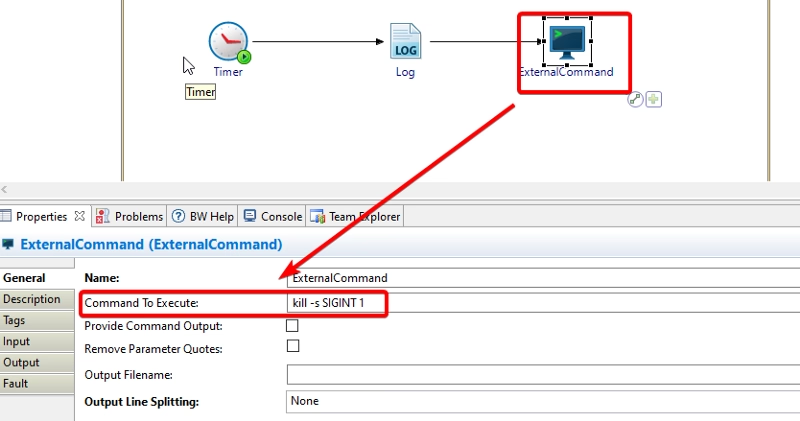

The only thing that we need is to make use of command at the end of the flow. It’s like an exit command in our shell script or Java code to end the process and the container. To do that, we should add an “Execute External Command” Activity and simply configure it to send a signal to the BusinessWorks process running inside the container.

The signal that we’re going to send is SIGINT that is the similar one that we send when we press CTRL+C in a Terminal to require the process to stop. We’re are going to do the same. To do that we’re going to use the command kill that is shipped in all Unix machines and most of the docker base images as well. And the command kill requires two arguments:

kill -s <SIGNAL_NAME> <PID>

- SIGNAL_NAME: We already cover that part and we’re going to use the Singal named SIGINT.

- PID: Regarding the PID we need to provide the PID of the BusinessWorks process running inside container.

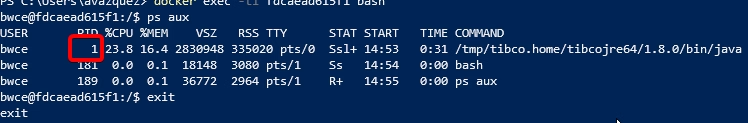

The part of finding the PID for that process running BusinessWorks inside the container can be difficult to locate but if you see the running processes inside a BusinessWorks container you can see that this is not so complicated to find:

If we enter inside a running container of a BusinessWorks application we will check that this PID is always the number 1. So we already have everything ready to configure the activity as it is shown below:

And that’s it with these two changes we are able to deploy this using the CronJob API and use it as part of your toolset of application patterns.

Pros and Cons of each approach

As you can imagine there is not a single solution about when to use one of the other because it is going to depend on your use case and your requirements. So I’ll try to enumerate here the main differences between both approaches so you can choose better when it is on your hand:

- TIBCO Managed approach is faster because the pod is already running and as soon as the condition is met the logic started. Using the Cron Job API requires a warm-up period because the pod starts when the condition is met so some delay can be applied here.

- TIBCO Managed approach requires the pod to be running all the time so it uses more resources when the condition is not reached. So in case you’re running stateless containers like AWS Fargate or similar Cron JOB API is a better fit.

- CronJob API is a standard Kubernetes API that means that integrated completely with the ecosystem, rather TIBCO Managed is managed by TIBCO using application configuration settings.

- TIBCO Managed approach is not aware of other instances of the same application running so you managed to keep a single instance running to avoid running the same logic many times. In the case of the Cron Job API, this is managed by the Kubernetes platform itself.