Table of Contents

What Is Kiali?

Kiali is an open-source project that provides observability for your Istio service mesh. Developed by Red Hat, Kiali helps users understand the structure and behavior of their mesh and any issues that may arise.

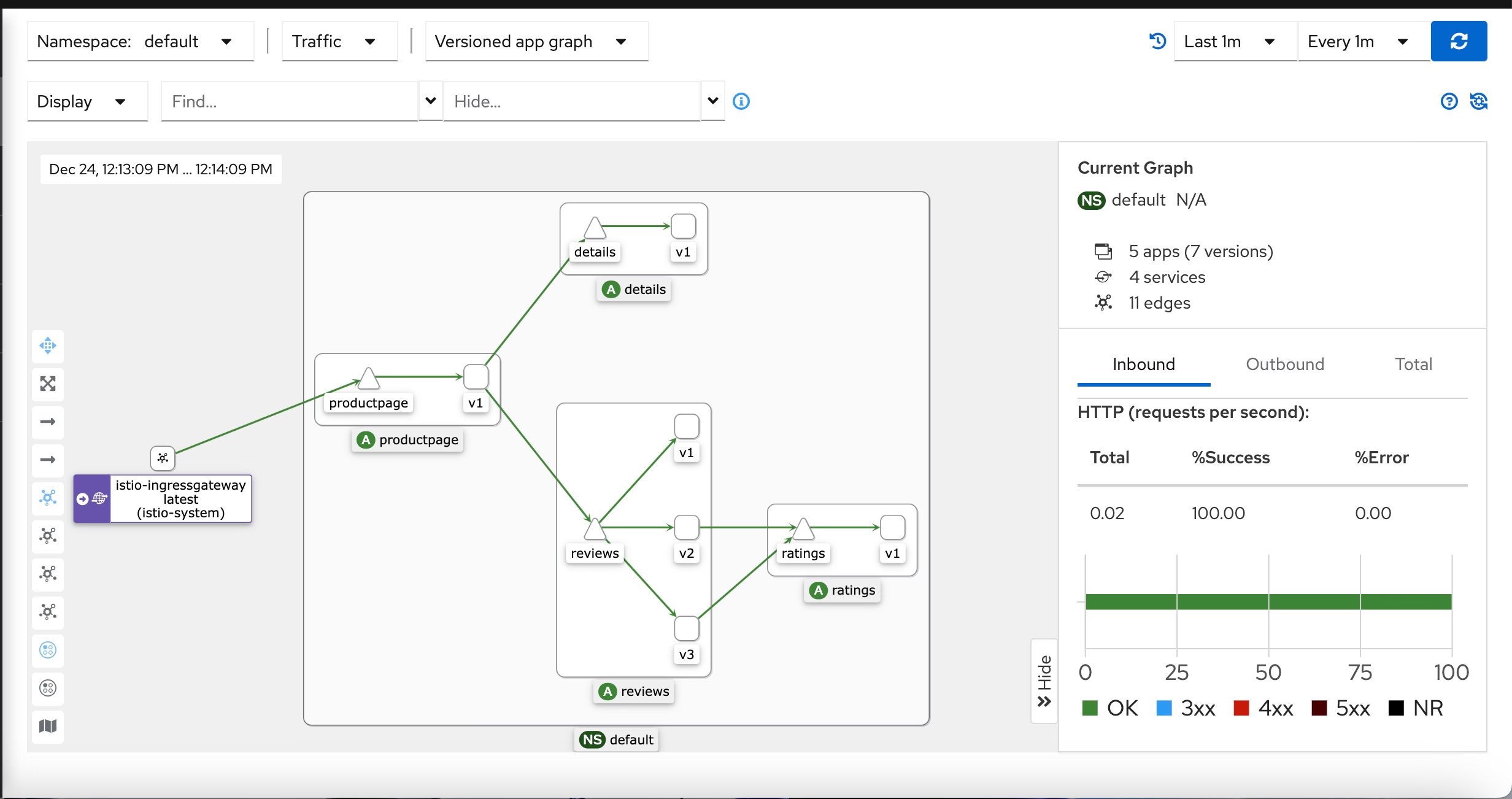

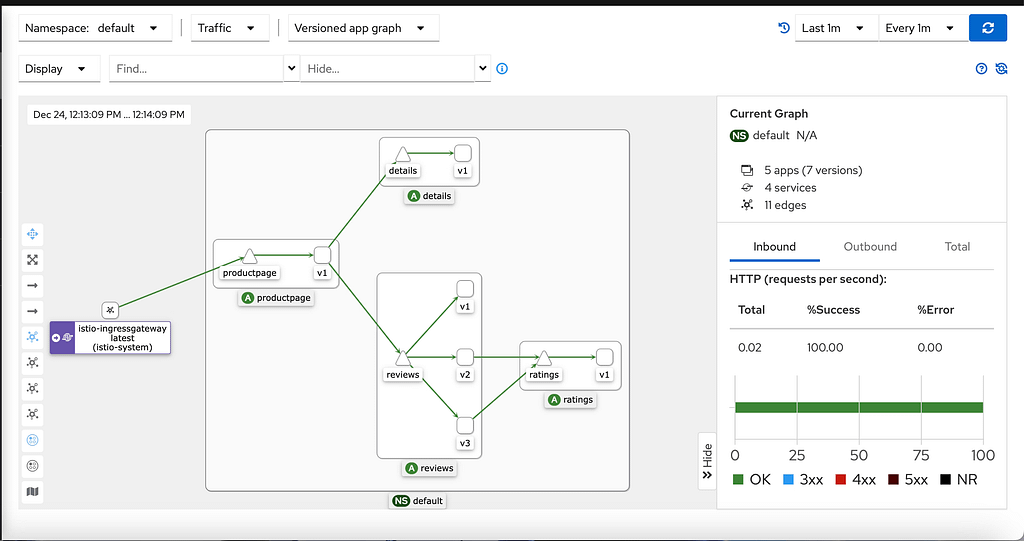

Kiali provides a graphical representation of your mesh, showing the relationships between the various service mesh components, such as services, virtual services, destination rules, and more. It also displays vital metrics, such as request and error rates, to help you monitor the health of your mesh and identify potential issues.

What are Kiali Main Capabilities?

One of the critical features of Kiali is its ability to visualize service-to-service communication within a mesh. This lets users quickly see how services are connected, and requests are routed through the mesh. This is particularly useful for troubleshooting, as it can help you quickly identify problems with service communication, such as misconfigured routing rules or slow response times.

Kiali also provides several tools for monitoring the health of your mesh. For example, it can alert you to potential problems, such as a high error rate or a service not responding to requests. It also provides detailed tracking information, allowing you to see the exact path a request took through the mesh and where any issues may have occurred.

In addition to its observability features, Kiali provides several other tools for managing your service mesh. For example, it includes a traffic management module, which allows you to control the flow of traffic through your mesh easily, and a configuration management module, which helps you manage and maintain the various components of your mesh.

Overall, Kiali is an essential tool for anyone using an Istio service mesh. It provides valuable insights into the structure and behavior of your mesh, as well as power monitoring and management tools. Whether you are starting with Istio or an experienced user, Kiali can help ensure that your service mesh runs smoothly and efficiently.

What are the main benefits of using Kiali?

The main benefits of using Kiali are:

- Improved observability of your Istio service mesh. Kiali provides a graphical representation of your mesh, showing the relationships between different service mesh components and displaying key metrics. This allows you to quickly understand the structure and behavior of your mesh and identify potential issues.

- Easier troubleshooting. Kiali’s visualization of service-to-service communication and detailed tracing information make it easy to identify problems with service communication and pinpoint the source of any issues.

- Enhanced traffic management. Kiali includes a traffic management module allowing you to control traffic flow through your mesh easily.

- Improved configuration management. Kiali’s configuration management module helps you manage and maintain the various components of your mesh.

How To Install Kiali?

There are several ways to install Kiali as part of your Service Mesh deployment, being the preferred option to use the Operator model available here.

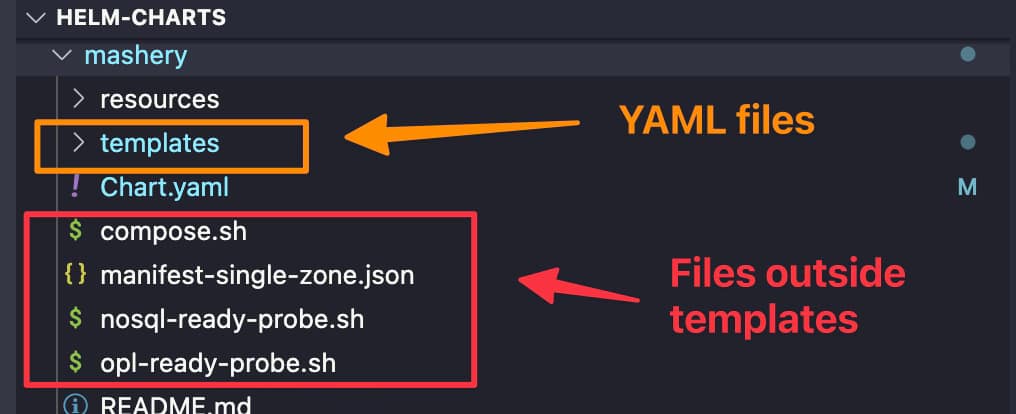

You can install this operator using Helm or OperatorHub. To install it using Helm Charts, you need to add the following repository using this command:

helm repo add kiali https://kiali.org/helm-charts** Remember that once you add a new repo, you need to run the following command to update the charts available

helm repo updateNow, you can install it using the helm installprimitive such as in the following sample:

helm install \

--set cr.create=true \

--set cr.namespace=istio-system \

--namespace kiali-operator \

--create-namespace \

kiali-operator \

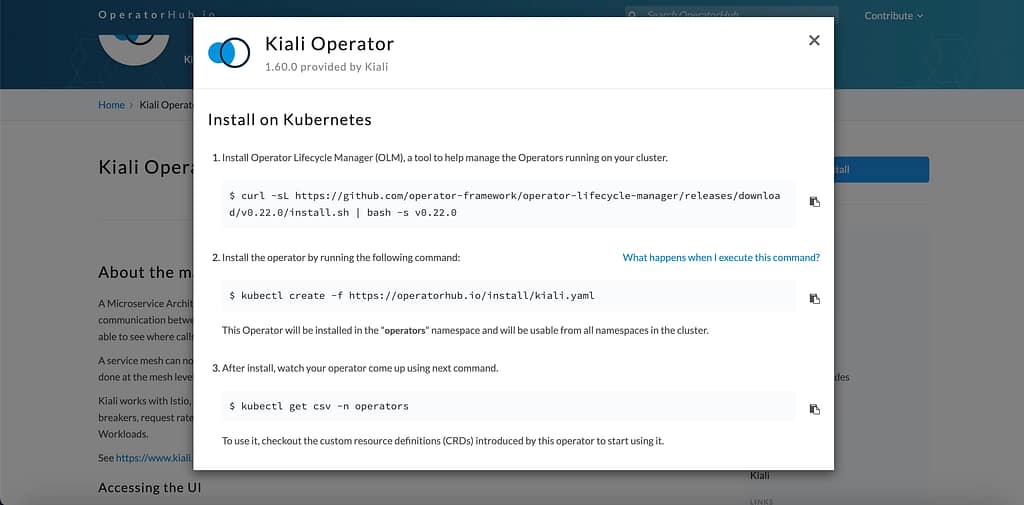

kiali/kiali-operatorIf you prefer going down the route of OperatorHub, you can use the following URL . Now, by clicking on the Install button, you will see the steps to have the component installed in your Kubernetes environment.

In case you want a simple installation of Kiali, you can also use the sample YAML available inside the Istio installation folder using the following command:

kubectl apply -f $ISTIO_HOME/samples/addons/kiali.yamlHow does Kiali work?

Kiali is just the graphical representation of the information available regarding how the service mesh works. So it is not the responsibility of Kiali to store those metrics but to retrieve them and draw them in a relevant way for the user of the tool.

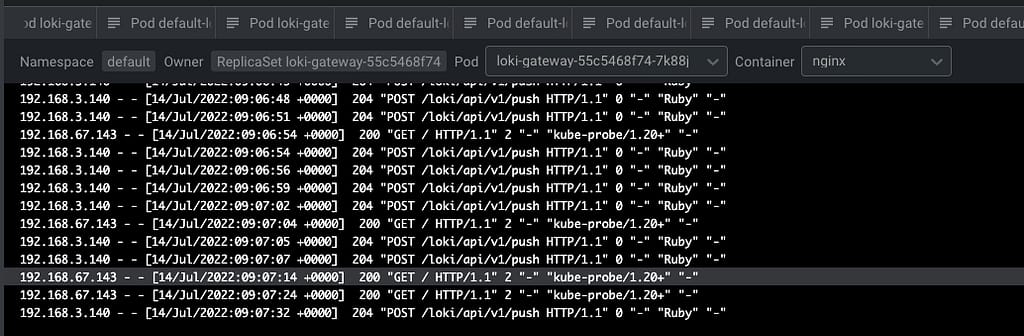

Prometheus does the storage of this data, so Kiali uses the Prometheus REST API to retrieve the information and draw it graphically, as you can see here:

- It is going to show several relevant parts of the graph. It will show the namespace selected and inside of them the different apps (it would detect an app in case you have a label added to the workload with the name

app). Inside, each app will add different services and pods with other icons (triangles for the services and squares for the pods). - It will also show how the traffic reaches the cluster through the different ingress gateways and how it goes out in case we have any egress gateway configured.

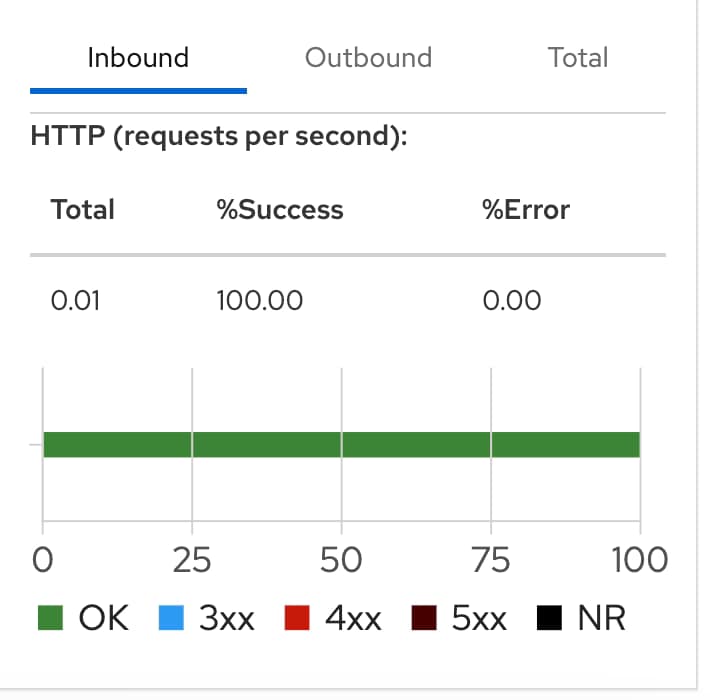

- It will show the kind of traffic we’re handling and the different error rates based on the kind of protocol, such as TCP, HTTP, and so on, as you can see in the picture below. The protocol is decided based on a naming convention on the port name from the service with the expected format: protocol-name

Can Kiali be used with any service mesh?

No, Kiali is specifically designed for use with Istio service meshes.

It provides observability, monitoring, and management tools for Istio service meshes but is incompatible with other service mesh technologies.

If you use a different service mesh, you will need to find an additional tool for managing and monitoring it.

Are there other alternatives to Kiali?

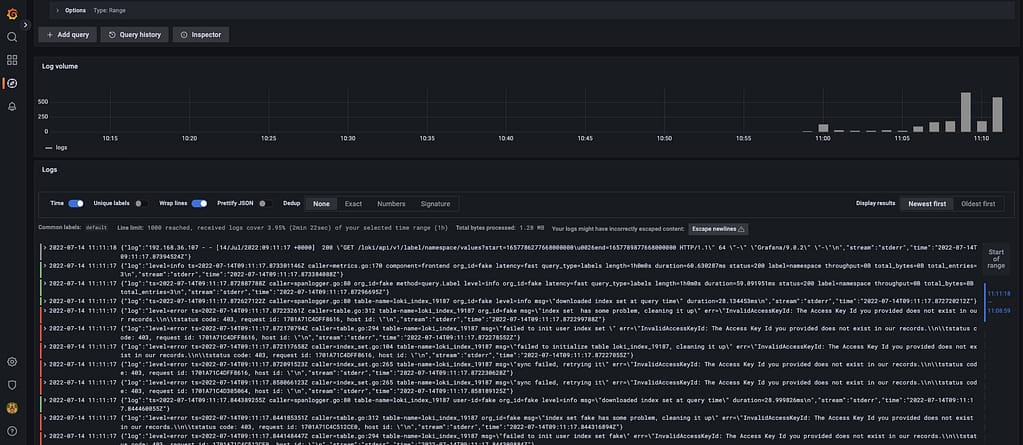

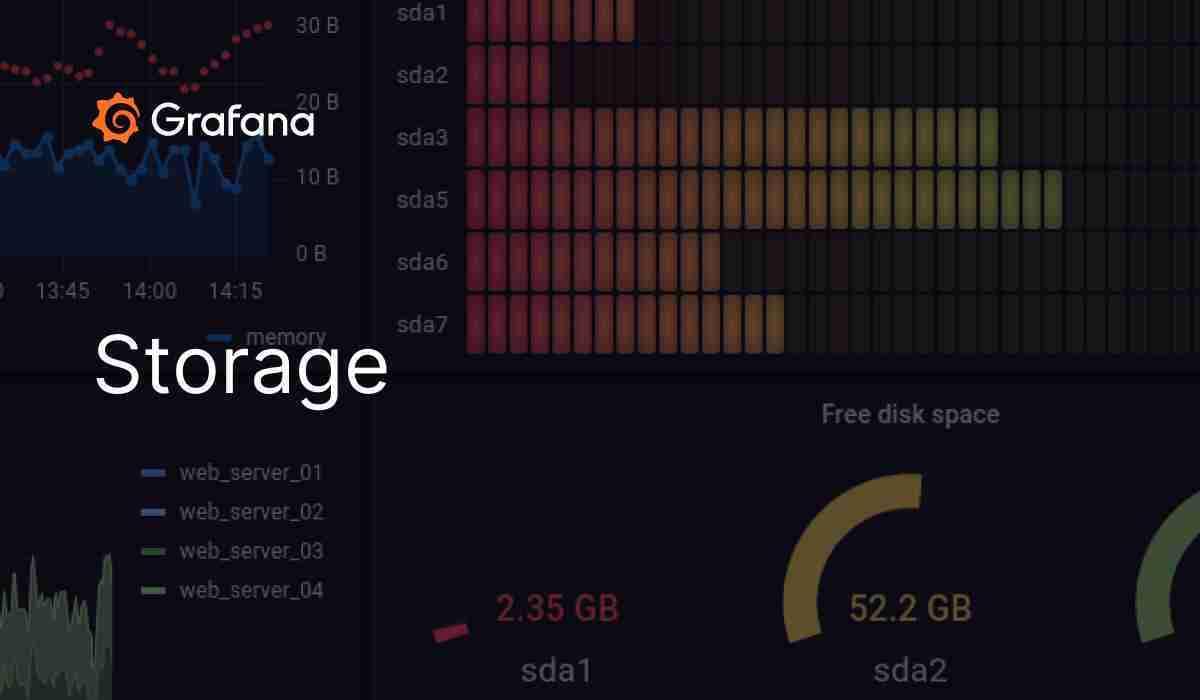

Even if you cannot see natural alternatives to Kiali to visualize your workloads and traffic through the Istio Service Mesh, you can use other tools to grab the metrics that feed Kiali and have custom visualization using more generic tools such as Grafana, among others.

Let’s talk about similar tools to Kialia for other Service Meshes, such as Linkerd, Consul Connect, or even Kuma. Most follow a different approach where the visualization part is not a separate “project” but relies on a standard visualization tool. That gives you much more flexibility, but at the same time, it lacks most of the excellent visualization of the traffic that Kialia provides, such as graph views or being able to modify the traffic directly from the graph view.

📚 Want to dive deeper into Kubernetes? This article is part of our comprehensive Kubernetes Architecture Patterns guide, where you’ll find all fundamental and advanced concepts explained step by step.