Nomad is the Hashicorp alternative to the typical pattern of using a Kubernetes-based platform as the only way to orchestrate your workloads efficiently. Nomad is a project started in 2019, but it is getting much more relevant nowadays after 95 releases, and the current version of this article is 1.4.1, as you can see in their GitHub profile.

Nomad approaches the traditional challenges of isolating the application lifecycle for the infrastructure operation lifecycle where that application resides. Still, instead of going full to a container-based application, it tries to provide a solution differently.

What are the main Nomad Features?

Based on its own definition, as you can read on their GitHub profile, they already highlight some of the points of difference between the de-facto industry standard:

Nomad is an easy-to-use, flexible, and performant workload orchestrator that can deploy a mix of microservice, batch, containerized, and non-containerized applications

Easy-to-use: This is the first statement they include in their definition because the Nomad approach is much simpler than alternatives such as Kubernetes because it works on a single-binary approach where it has all the capabilities that are needed running as a node agent based on its own “vocabulary” that you can read more of it in their official documentation.

Flexibility: This is the other critical thing they provide a hypervisor, an intermediate layer between the application and the underlying infrastructure. It is not just limited to container applications but also supports this kind of deployment. It also allows the deploy it as part of a traditional virtual machine approach. The primary use cases highlighted are running standard windows applications, which is tricky when talking about Kubernetes deployments; even though Windows containers have been a thing for so long, their adoption is not at the same level, as you can see in the Linux container world.

Hashicorp Integration: As part of the Hashicorp portfolio, it also includes seamless integration with other Hashicorp projects such as Hashicorp Vault, which we have covered in several articles, or Hashicorp Consul, which helps to provide additional capabilities in terms of security, configuration management, and communication between the different workloads.

Nomad vs Kubernetes: How Nomad Works?

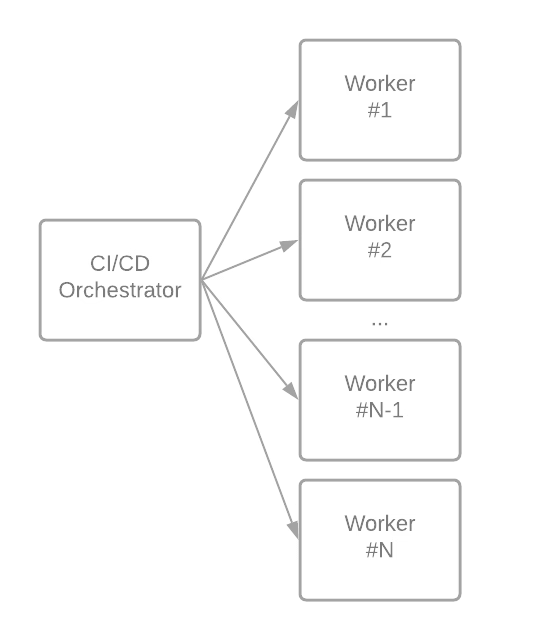

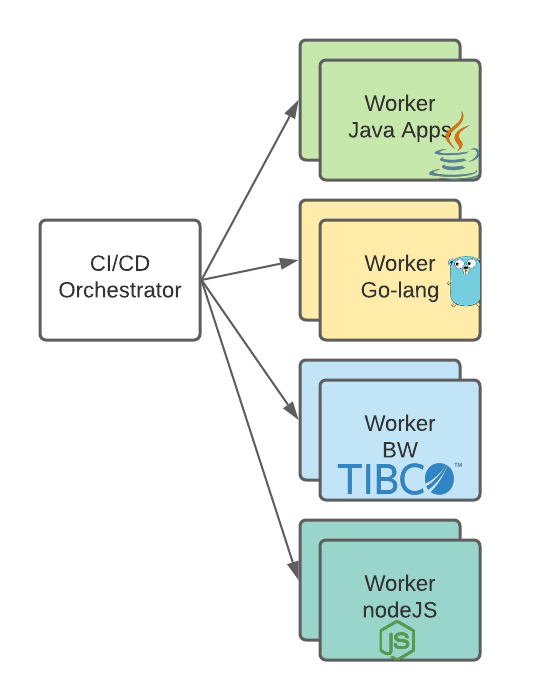

As commented above, Nomad covers everything with a single-component approach. A nomad binary is an agent that can work in server mode or client mode, depending on the role of the machine executing it.

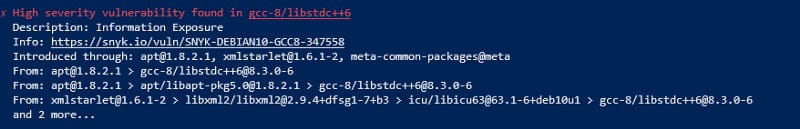

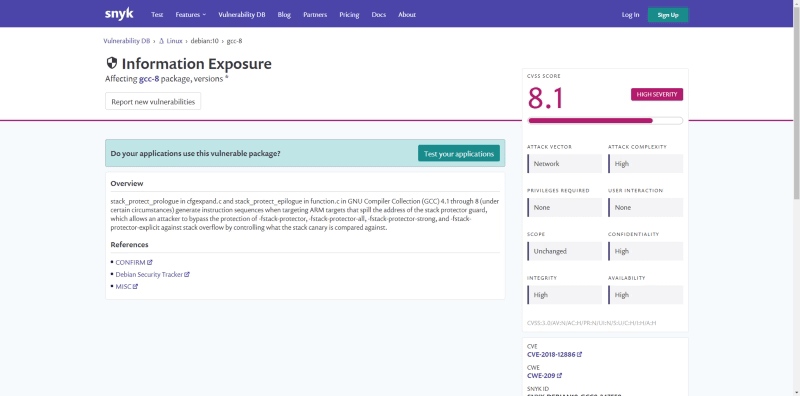

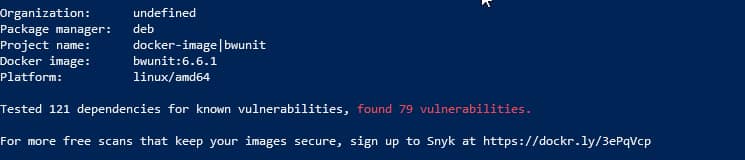

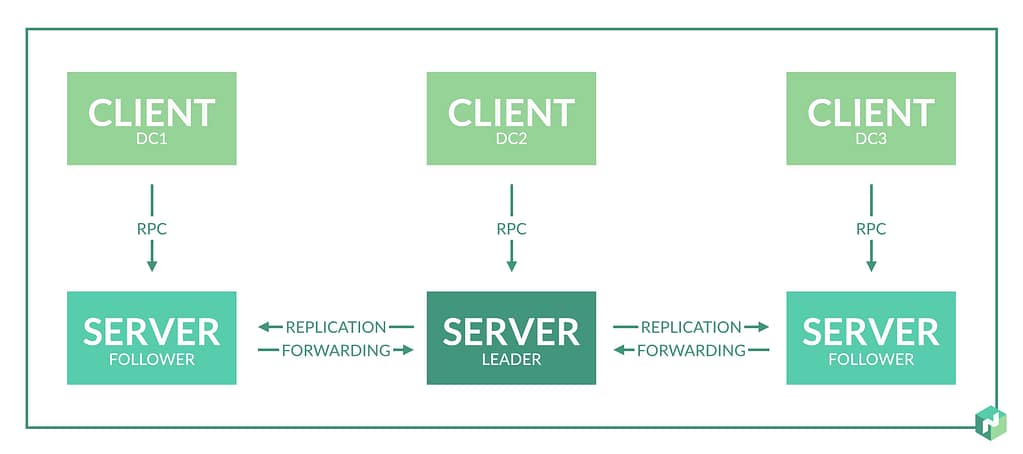

So Nomad is based on a Nomad cluster, a set of machines running a nomad agent in server mode. Those servers are split depending on the role of the leader or followers. The leader performs most of the cluster management, and the followers can create scheduling plans and submit them to the leader for approval and execution. This is represented in the picture below from the Hashicorp Nomad official page:

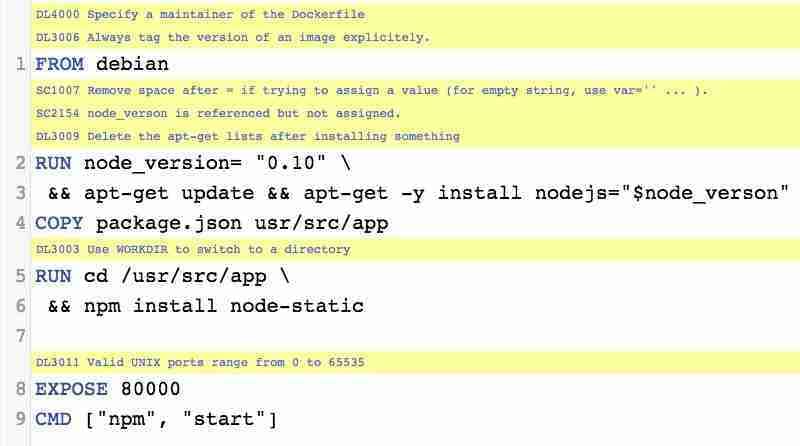

Once we have the cluster ready, we need to create our jobs, and a job is a definition of the task we would like to execute on the Nomad cluster we have previously set up. A task is the smallest unit of work in Nomad. Here is where the flexibility comes to Nomad because the task driver executes each task, allowing different drivers to execute various workloads. This is how following the same approach, we will have a docker driver to run our container deployment or an exec driver to execute it on top of a virtual infrastructure. Still, you can create your task drivers following a plugin mechanism that you can read more about here.

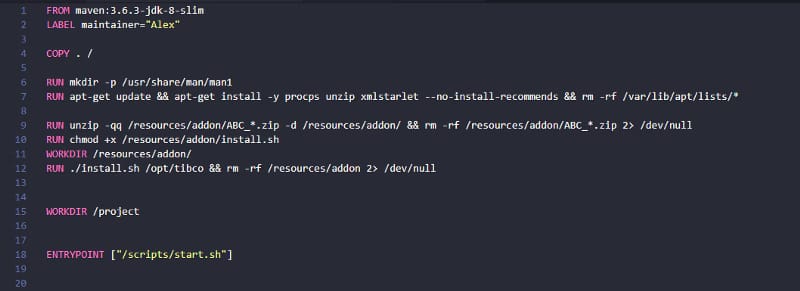

Jobs and Task are defined using a text-based approach but not following the usual YAML or JSON kind of files but a different format, as you can see in the picture below (click here to download the whole file from the GitHub Nomad Samples repo):

Is Nomad a Replace for Kubernetes?

It is a complex question to answer, and even Hashicorp they have documented different strategies. You can undoubtedly use Nomad to run container-based deployments instead of running them on Kubernetes. But at the same time, they also position the solution alongside Kubernetes to run some workloads on one solution and another on the other.

In the end, both try to solve and address the same challenges in terms of scalability, infrastructure sharing and optimization, agility, flexibility, and security from traditional deployments.

Kubernetes focus on different kind of workloads, but everything follows the same deployment mode (container-based) and adopts recent paradigms (service-based, microservice patterns, API-led, and so on) with a robust architecture that allows excellent scalability, performance, flexibility, and with adoption levels in the industry that has become the current de-facto only alternative for modern workload orchestration platforms.

But, at the same time, it also requires effort in management and transforming existing applications to new paradigms.

On the other hand, Nomad tries to address it differently, minimizing the change of the existing application to take advantage of the platform’s benefits and reducing the overhead of management and complexity that a usual Kubernetes platform provides, depending on the situation.

📚 Want to dive deeper into Kubernetes? This article is part of our comprehensive Kubernetes Architecture Patterns guide, where you’ll find all fundamental and advanced concepts explained step by step.