Introduction

When working with complex Helm deployments, mastering loops is just one piece of the puzzle. For a comprehensive understanding of Helm from fundamentals to advanced patterns, check out our complete Helm Charts & Kubernetes Package Management Guide.

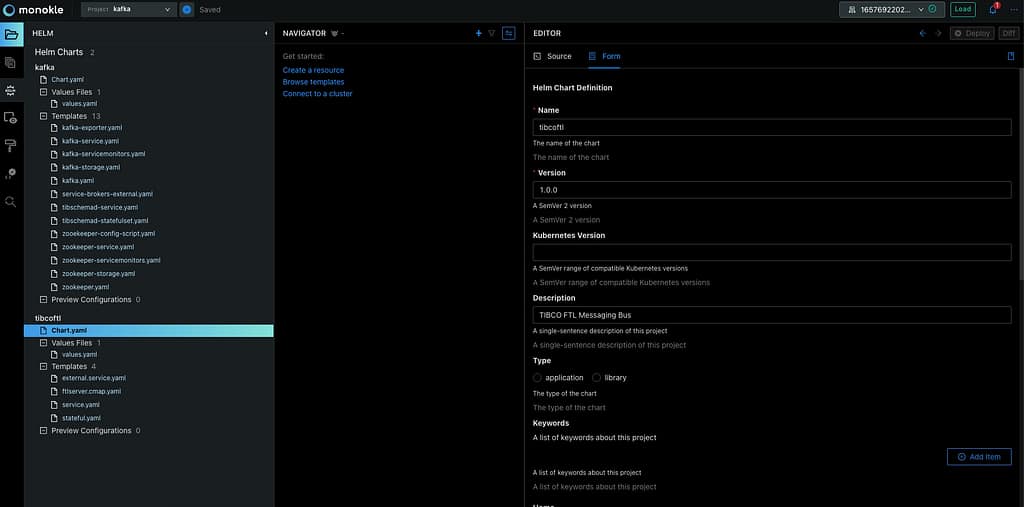

Helm Charts are becoming the default de-factor solution when you want to package your Kubernetes deployment to be able to distribute or quickly install it in your system.

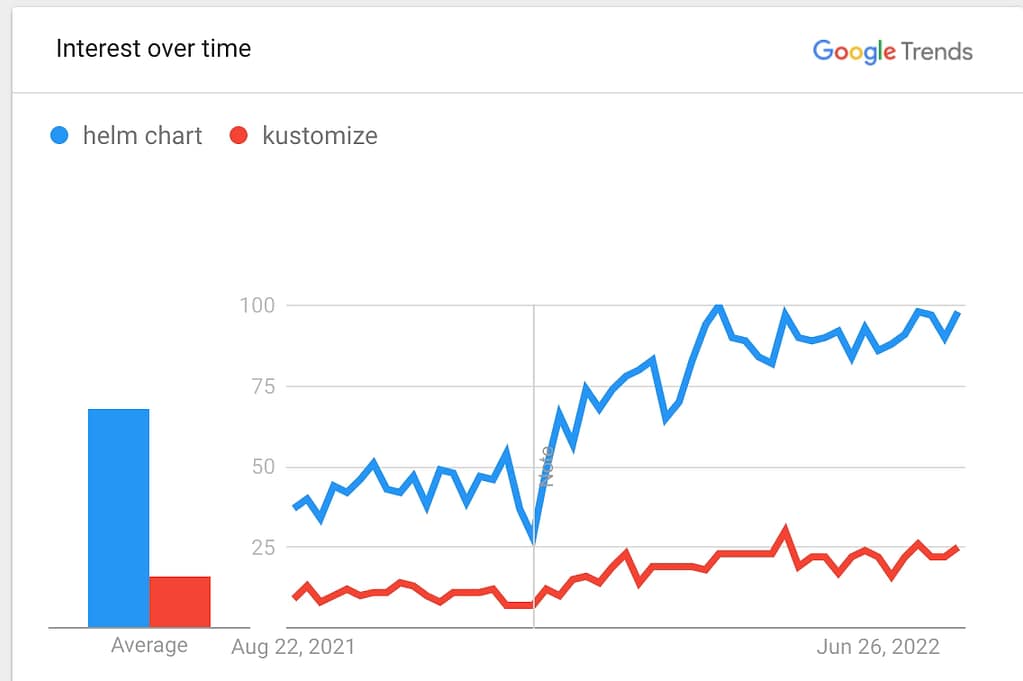

Defined several times as the apt for Kubernetes for its similarity with the ancient package manager from GNU/Linux Debian-like distributions, it seems to continue to grow in popularity each month compared with other similar solutions even more tightly integrated into Kubernetes such as Kustomize, as you can see in the Google Trends picture below:

But creating these helm charts is not as easy as it shows. If you already have been on the work of doing so, you probably get stuck at some point, or you spend a lot of time trying to do some things. If this is the first time you are creating one or trying to do something advanced, I hope all these tricks will help you on your journey. Today we are going to cover one of the most important tricks, and those are Helm Loops.

Helm Loops Introduction

If you see any helm chart for sure, you will have a lot of conditional blocks. Pretty much everything is covered under an if/else structure based on the values.yml files you are creating. But this gets a little bit tricky when we talk about loops. But the great thing is that you will have the option to execute a helm loop inside your helm charts using the rangeprimitive.

How to create a Helm Loop?

The usage of the rangeprimitive is quite simple, as you only need to specify the element you want to iterate across, as shown in the snippet below:

{{- range .Values.pizzaToppings }}

- {{ . | title | quote }}

{{- end }}

This is a pretty simple sample where the yaml will iterate over the values that you have assigned to the pizzaToppings structure in your values.yml

There are some concepts to keep in mind in this situation:

- You can easily access everything inside this structure you are looping across. So, if pizza topping has additional fields, you can access them with something similar to this:

{{- range.Values.pizzaToppings }}

- {{ .ingredient.name | title | quote }}

{{- end }}

And this will access a structure similar to this one in your values.yml:

pizzaToppings:

- ingredient:

name: Pinneaple

weight: 3

The good thing is that you can access their underlying attribute without replicating all the parent hierarchy until you reach the looping structure because inside the range section, the scope has changed. We will refer to the root of each element we are iterating across.

How to access parent elements inside a Helm Loop?

In the previous section, we covered how easily we can access the inner attribute inside the loop structure because of the change of scope, which also has an issue. In case I want to access some element in the parent of my values.yml file or somewhere outside the structure, how can I access them?

The good thing is that we also have a great answer to that, but you can get there. We need to understand a little bit about the scopes in Helm.

As commented, . refers to the root element in the current scope. If you have never defined a range section or another primitive that switches the context, .always will refer to the root of your values.yml. That is why when you see a helm chart, you see all the structures with the following way of working: .Values.x.y.z, but we already have seen that when we have a range section, this is changing, so this is not a good way.

To solve that, we have the context $ that constantly refers to the root of the values. ymlno matter which one is the current scope. So that means that if I have the following values.yml:

base:

- type: slim

pizzaToppings:

- ingredient:

name: Pinneaple

weight: 3

- ingredient:

name: Apple

weight: 3

And I want to refer to the base type inside the range section similar to before I can do it using the following snippet:

{{- range .Values.pizzaToppings }}

- {{ .ingredient.name | title | quote }} {{ $.Values.base.type }}

{{- end }}

That will generate the following output:

- Pinneaple slim

- Apple slim

So I hope this helm chart trick will help you with the creation, modification, or improvement of your upgraded helm charts in the future by using helm loops without any further concern!