Learn how to manage observability requirements as part of your microservice ecosystem

“May you live in interesting times” is the English translation of the Chinese curse, and this couldn’t be more true as a description of the times that we’re living regarding our application architecture and application development.

All the changes from the cloud-native approach, including all the new technologies that come with it like containers, microservices, API, DevOps, and so on has transformed the situation entirely for any architecture, developer, or system administration.

It’s something similar if you went to bed in 2003, and you wake up in 2020 all the changes, all the new philosophies, but also all the unique challenges that come with the changes and new capabilities are things that we need to deal with today.

I think we all can agree the present is polyglot in terms of application development. Today is not expected for any big company or enterprise to find an available technology, an available language to support all their in-house products. Today we all follow and agree on the “the right tool for the right job principle” to try to create our toolset of technologies that we are going to use to solve different use cases or patterns that you need to face.

But that agreement and movement also come with its challenge regarding things that we usually don’t think about like Tracing and Observability in general.

When we use a single technology, everything is more straightforward. To define a common strategy to trace your end to end flows is easy; you only need to embed the logic into your common development framework or library all your developments are using. Probably define a typical header architecture with all the data that you need to be able to effectively trace all the requests and define a standard protocol to send all those traces to a standard system that can store and correlate all of them and explain the end to end flow. But try to move that to a polyglot ecosystem: Should I write my framework or library for each language or technology I’d need to use, or I can also use in the future? Does that make sense?

But not only that, should I slow the adoption of a new technology that can quickly help the business because I need to provide from a shared team this kind of standard components? That is the best case that I have enough people that know how the internals of my framework work and have the skills in all the languages that we’re adopting to be able to do it quickly and in an efficient way. It seems unlikely, right?

So, to new challenges also new solutions. I’m already have been talking about Service Mesh regarding the capabilities that provide from a communication perspective, and if you don’t remember you can take a look at those posts:

But it also provides capabilities from other perspectives and Tracing, and Observability is one of them. Because when we cannot include those features in any technology, we need to use, we can do it in a general technology that is supported by all of them, and that’s the case with Service Mesh.

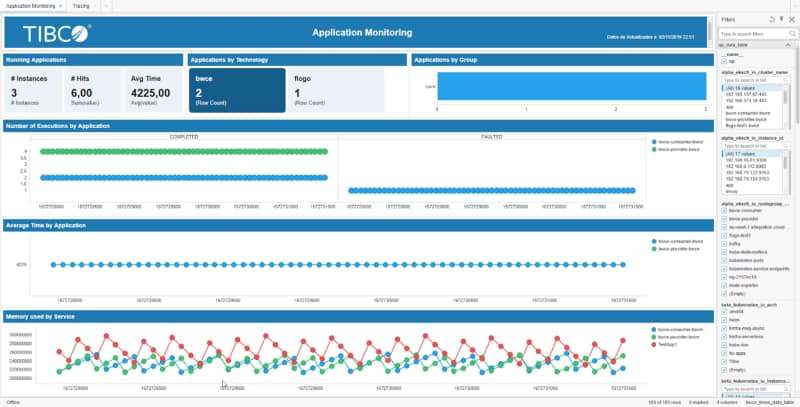

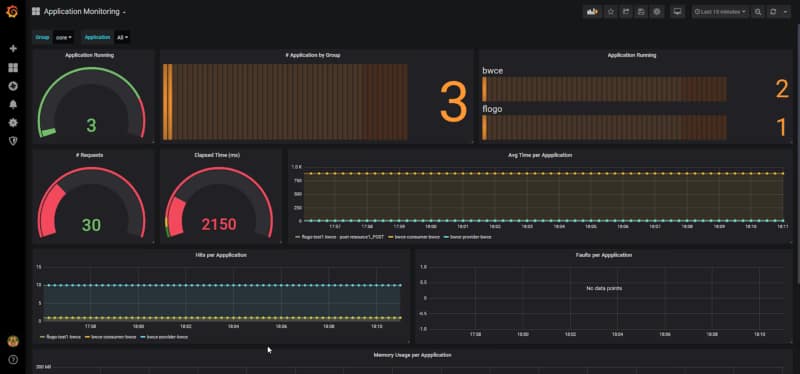

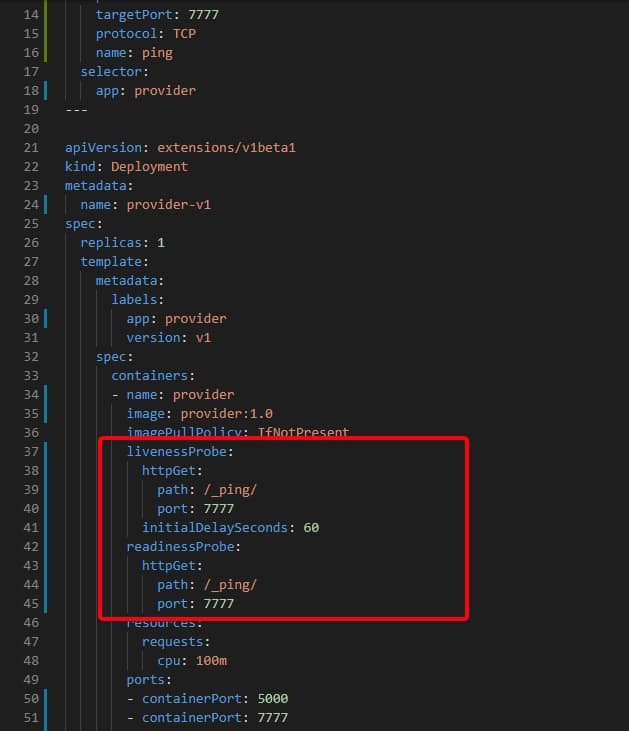

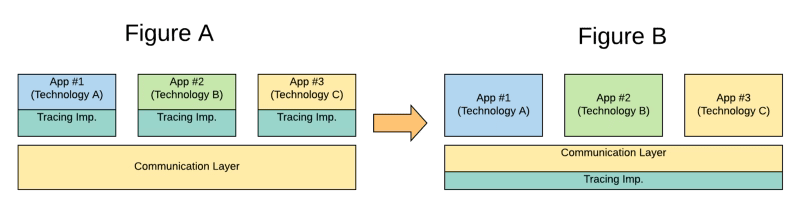

As Service Mesh is the standard way to communicate synchronously, your microservices in an east-west communication fashion covering the service-to-service communication. So, if you’re able to include in that component also the tracing capability, you can have an end-to-end tracing without needed to implement anything in each of the different technologies that you can use to implement the logic that you need, so, you’ve been changing from Figure A to Figure B in the picture below:

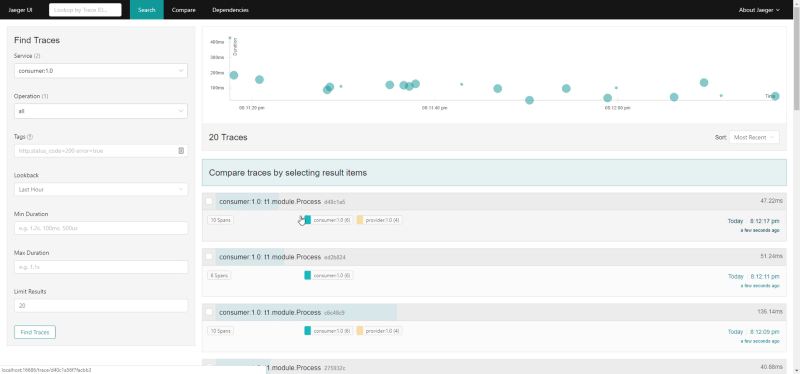

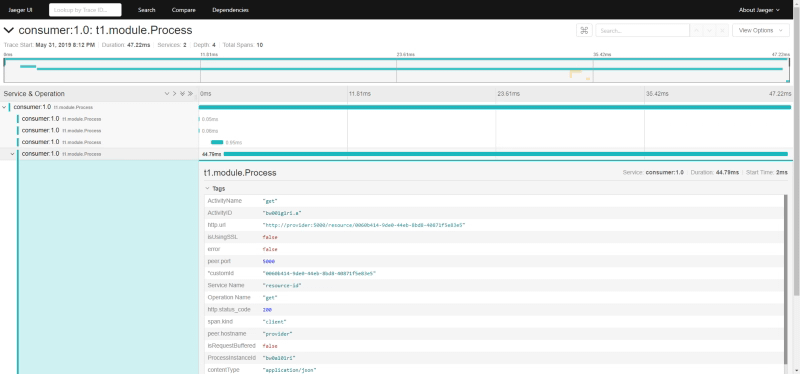

And that what most of the Service Mesh technologies are doing. For example, Istio, as one of the default choices when it comes to Service Mesh, includes an implementation of the OpenTracing standard that allows integration with any tool that supports the standard to be able to collect all the tracing information for any technology that is used to communicate across the mesh.

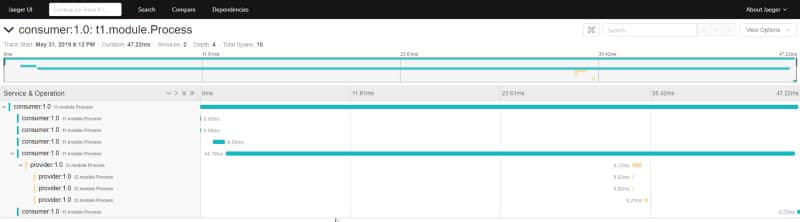

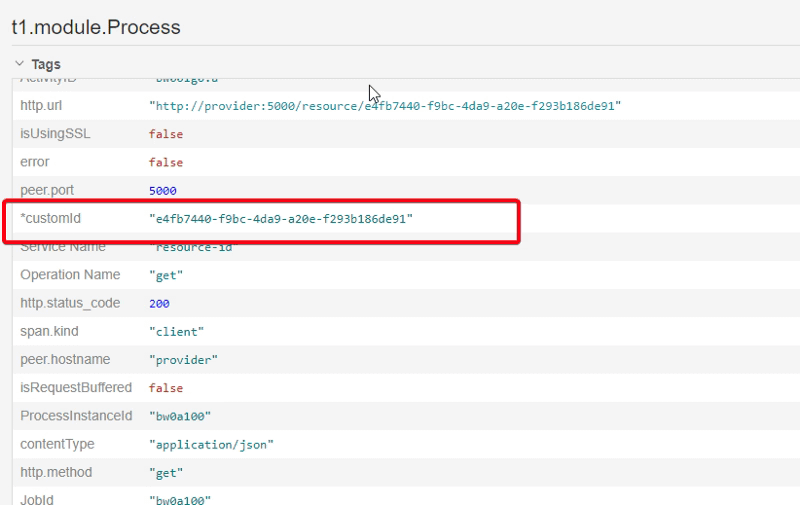

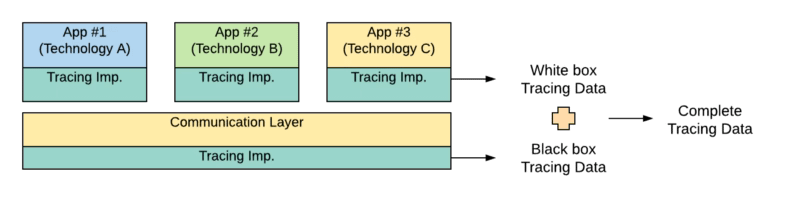

So, that mind-change allows us to easily integrates different technologies without needed any exceptional support of those standards for any specific technology. Does that mean that the implementation of those standards for those technologies is not required? Not at all, that is still relevant, because the ones that also support those standards can provide even more insights. After all, the Service Mesh only knows part of the information that is the flow that’s happening outside of each technology. It’s something similar to a black-box approach. But also adding the support for each technology to the same standard provides an additional white-box approach as you can see graphically in the image below:

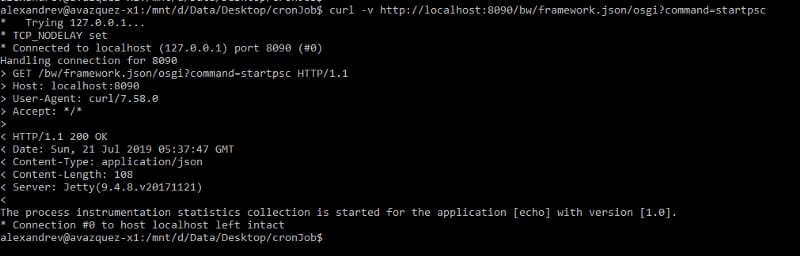

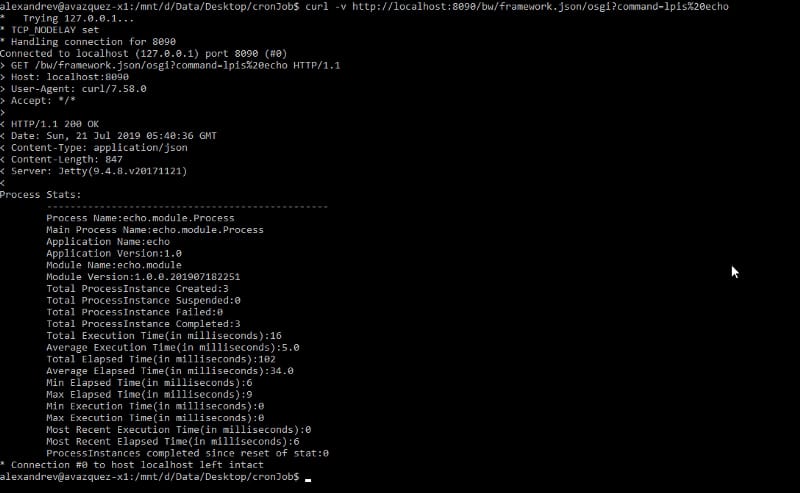

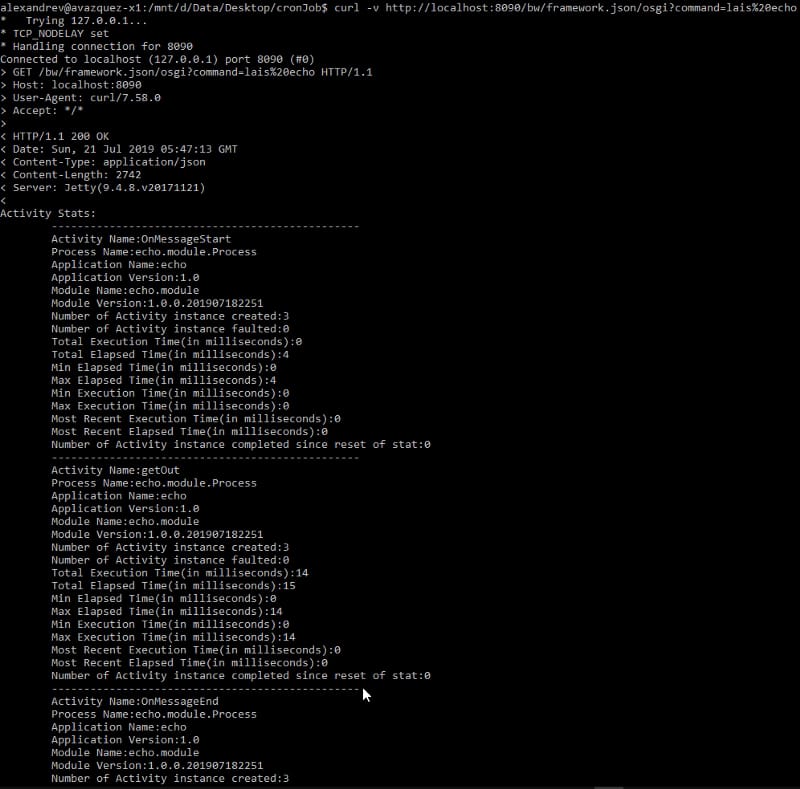

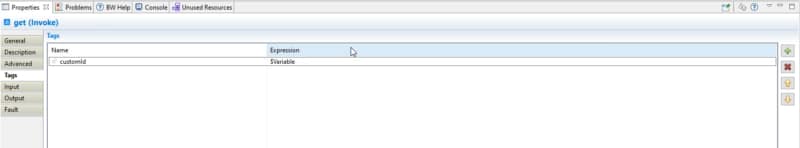

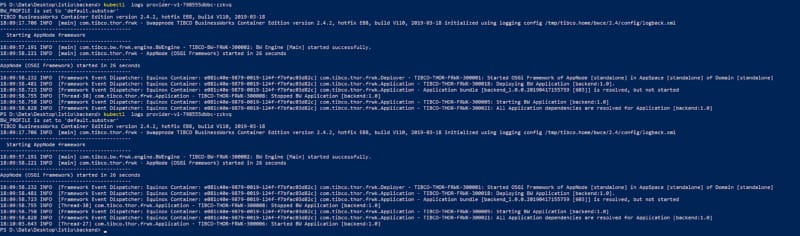

We already talked about the compliance of some technologies with the OpenTracing standard like TIBCO BusinessWorks Container Edition that you can remember it here:

So, also, the support from these technologies of the industry standards is needed and even a competitive advantage because without needing to develop your tracing framework, you’re able to achieve a Complete Tracing Data approach additional to that is already provided by the Service Mesh level itself.