In previous posts, we described how to set up Prometheus to work with your TIBCO BusinessWorks Container Edition apps, and you can read more about it here.

In that post, we described that there were several ways to update Prometheus about the services that ready to monitor. And we choose the most simple at that moment that was the static_config configuration which means:

Don’t worry Prometheus, I’ll let you know the IP you need to monitor and you don’t need to worry about anything else.

And this is useful for a quick test in a local environment when you want to test quickly your Prometheus set up or you want to work in the Grafana part to design the best possible dashboard to handle your need.

But, this is not too useful for a real production environment, even more, when we’re talking about a Kubernetes cluster when services are going up & down continuously over time. So, to solve this situation Prometheus allows us to define a different kind of ways to perform this “service discovery” approach. In the official documentation for Prometheus, we can read a lot about the different service discovery techniques but at a high level these are the main service discovery techniques available:

- azure_sd_configs: Azure Service Discovery

- consul_sd_configs: Consul Service Discovery

- dns_sd_configs: DNS Service Discovery

- ec2_sd_configs: EC2 Service Discovery

- openstack_sd_configs: OpenStack Service Discovery

- file_sd_configs: File Service Discovery

- gce_sd_configs: GCE Service Discovery

- kubernetes_sd_configs: Kubernetes Service Discovery

- marathon_sd_configs: Marathon Service Discovery

- nerve_sd_configs: AirBnB’s Nerve Service Discovery

- serverset_sd_configs: Zookeeper Serverset Service Discovery

- triton_sd_configs: Triton Service Discovery

- static_config: Static IP/DNS for the configuration. No Service Discovery.

And even, it all these options are not enough for you and need something more specific you have an API available to extend the Prometheus capabilities and create your own Service Discovery technique. You can find more info about it here:

But this is not our case, for us, the Kubernetes Service Discovery is the right choice for our approach. So, we’re going to change the static configuration we had in the previous post:

- job_name: 'bwdockermonitoring'

honor_labels: true

static_configs:

- targets: ['phenix-test-project-svc.default.svc.cluster.local:9095']

labels:

group: 'prod'For this Kubernetes configuration

- job_name: 'bwce-metrics'

scrape_interval: 5s

metrics_path: /metrics/

scheme: http

kubernetes_sd_configs:

- role: endpoints

namespaces:

names:

- default

relabel_configs:

- source_labels: [__meta_kubernetes_service_label_app]

separator: ;

regex: (.*)

replacement: $1

action: keep

- source_labels: [__meta_kubernetes_endpoint_port_name]

separator: ;

regex: prom

replacement: $1

action: keep

- source_labels: [__meta_kubernetes_namespace]

separator: ;

regex: (.*)

target_label: namespace

replacement: $1

action: replace

- source_labels: [__meta_kubernetes_pod_name]

separator: ;

regex: (.*)

target_label: pod

replacement: $1

action: replace

- source_labels: [__meta_kubernetes_service_name]

separator: ;

regex: (.*)

target_label: service

replacement: $1

action: replace

- source_labels: [__meta_kubernetes_service_name]

separator: ;

regex: (.*)

target_label: job

replacement: 1

action: replace

- separator: ;

regex: (.*)

target_label: endpoint

replacement: $1

action: replaceAs you can see this is quite more complex than the previous configuration but it is not as complex as you can think at first glance, let’s review it by different parts.

- role: endpoints

namespaces:

names:

- defaultIt says that we’re going to use role for endpoints that are created under the default namespace and we’re going to specify the changes we need to do to find the metrics endpoints for Prometheus.

scrape_interval: 5s metrics_path: /metrics/ scheme: http

This says that we’re going to execute the scrape process in a 5 seconds interval, using http on the path /metrics/

And then, we have a relabel_config section:

- source_labels: [__meta_kubernetes_service_label_app]

separator: ;

regex: (.*)

replacement: $1

action: keep

- source_labels: [__meta_kubernetes_endpoint_port_name]

separator: ;

regex: prom

replacement: $1

action: keepThat means that we’d like to keep that label for prometheus:

- source_labels: [__meta_kubernetes_namespace]

separator: ;

regex: (.*)

target_label: namespace

replacement: $1

action: replace

- source_labels: [__meta_kubernetes_pod_name]

separator: ;

regex: (.*)

target_label: pod

replacement: $1

action: replace

- source_labels: [__meta_kubernetes_service_name]

separator: ;

regex: (.*)

target_label: service

replacement: $1

action: replace

- source_labels: [__meta_kubernetes_service_name]

separator: ;

regex: (.*)

target_label: job

replacement: 1

action: replace

- separator: ;

regex: (.*)

target_label: endpoint

replacement: $1

action: replaceThat means that we want to do a replace of the label value and we can do several things:

- Rename the label name using the target_label to set the name of the final label that we’re going to create based on the source_labels.

- Replace the value using the regex parameter to define the regular expression for the original value and the replacement parameter that is going to express the changes that we want to do to this value.

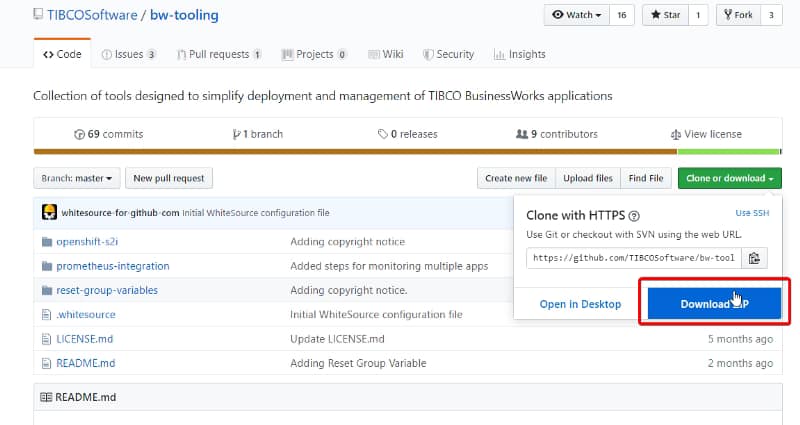

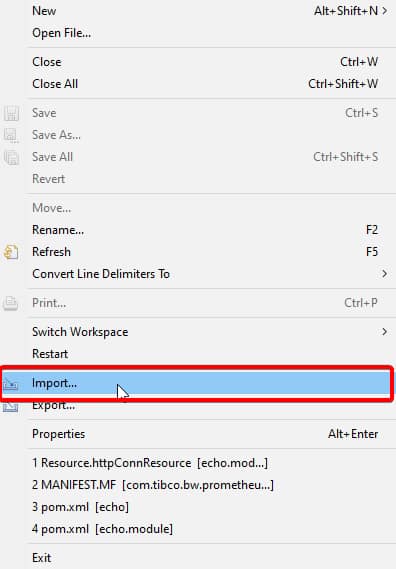

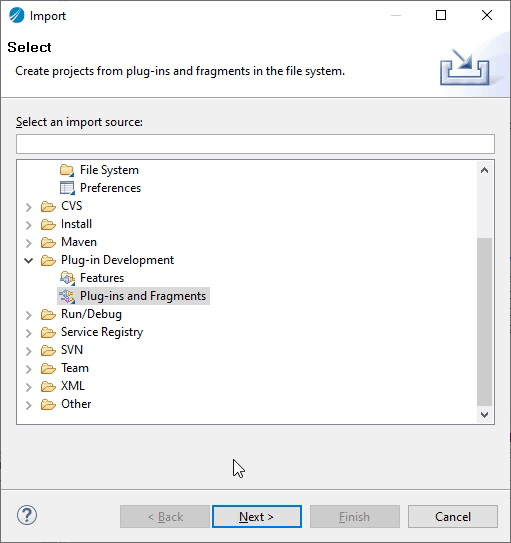

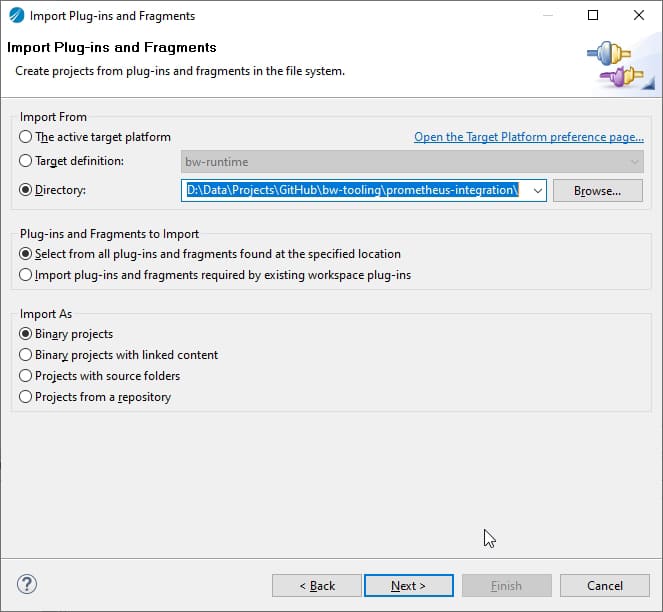

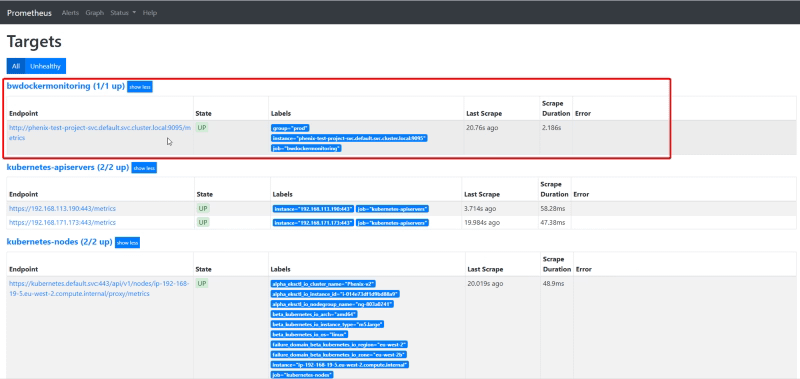

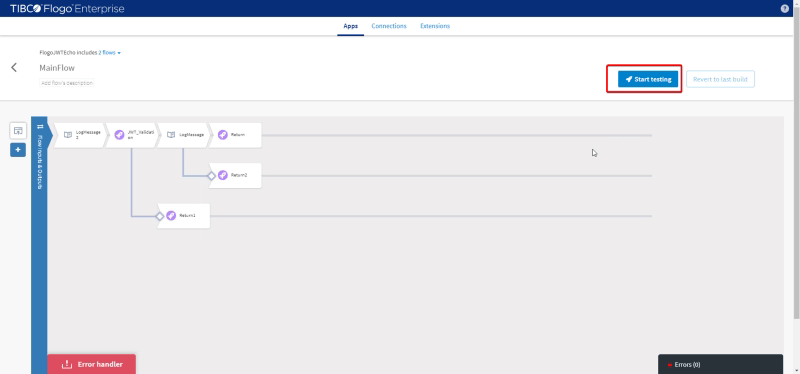

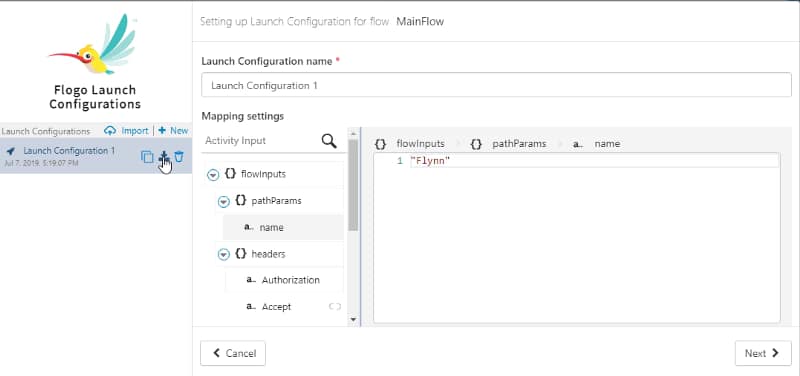

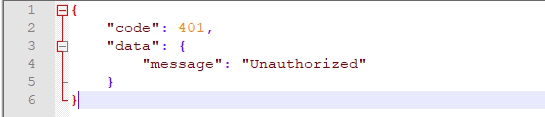

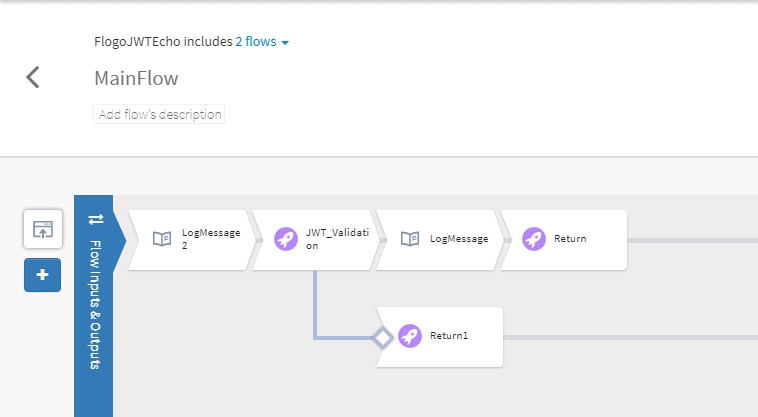

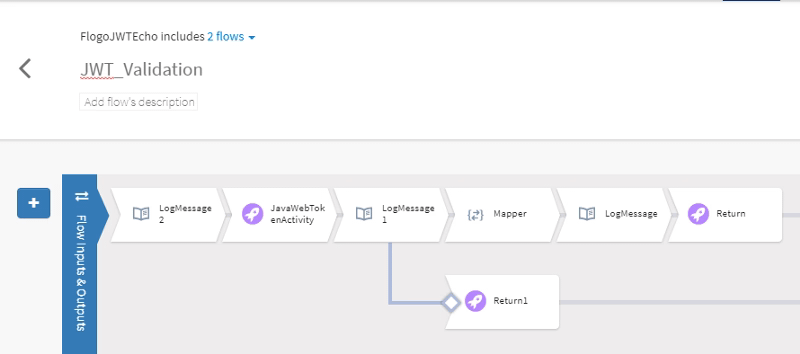

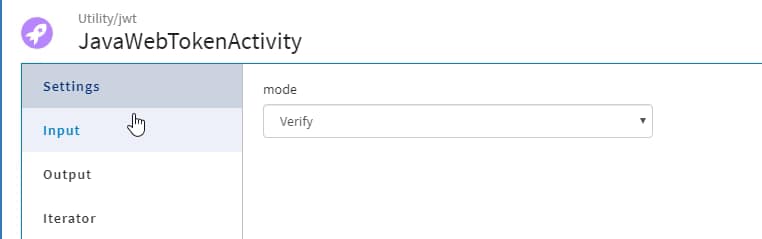

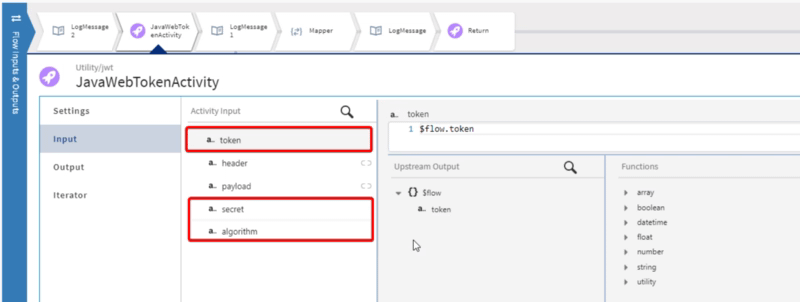

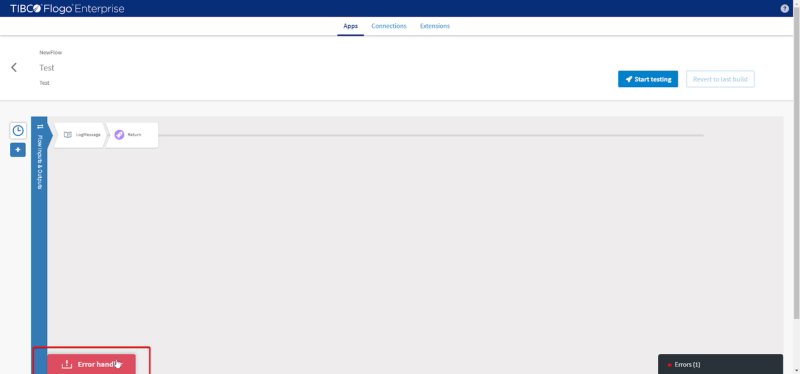

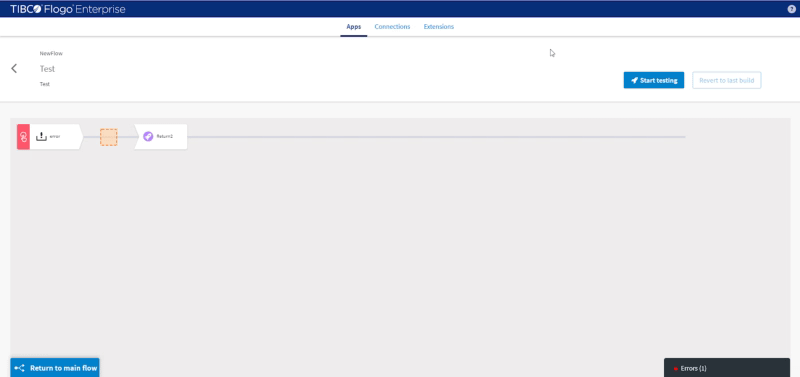

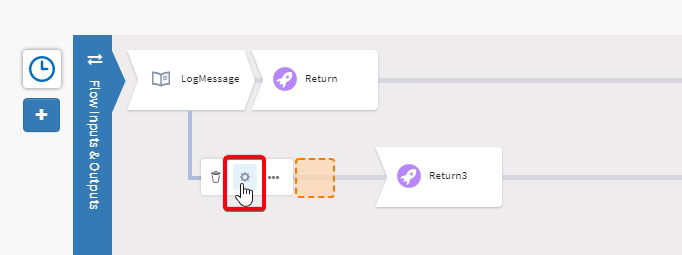

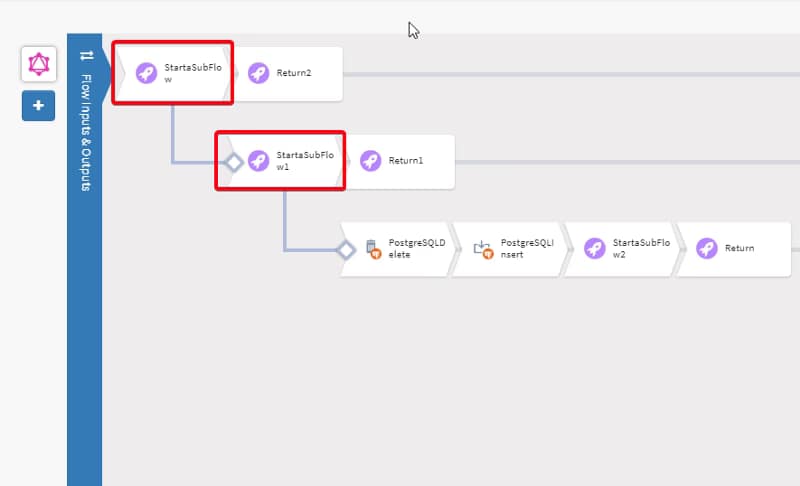

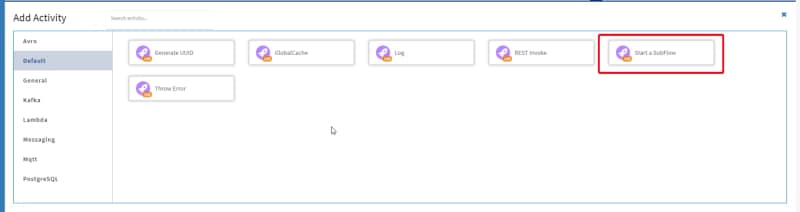

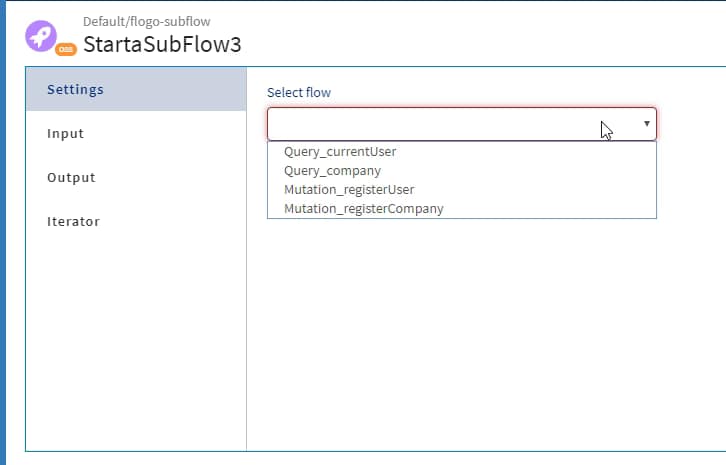

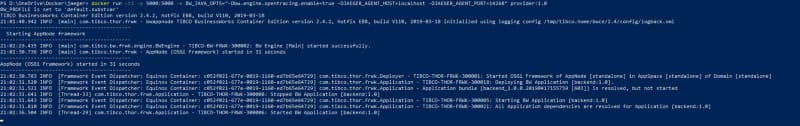

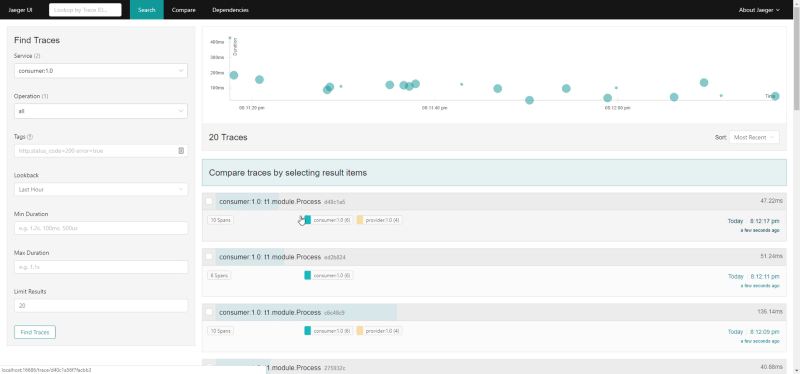

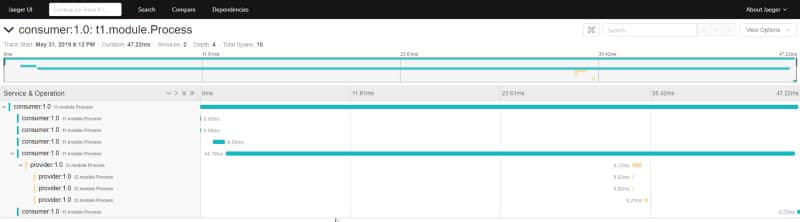

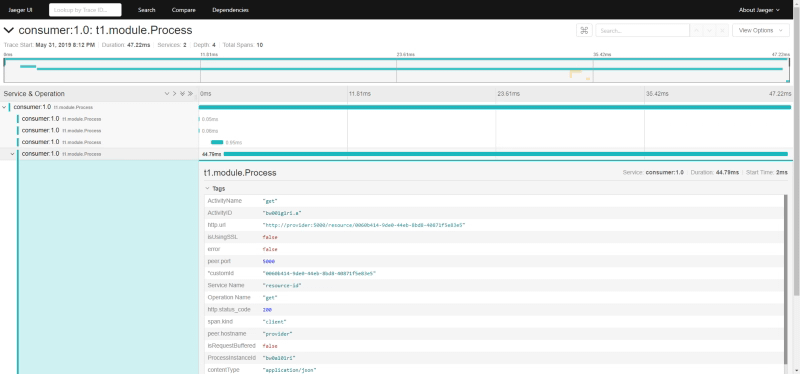

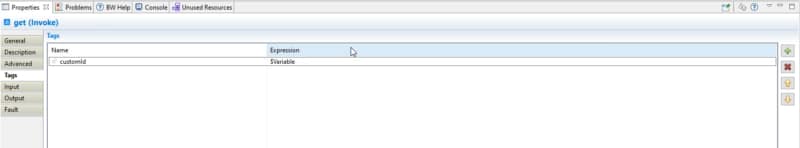

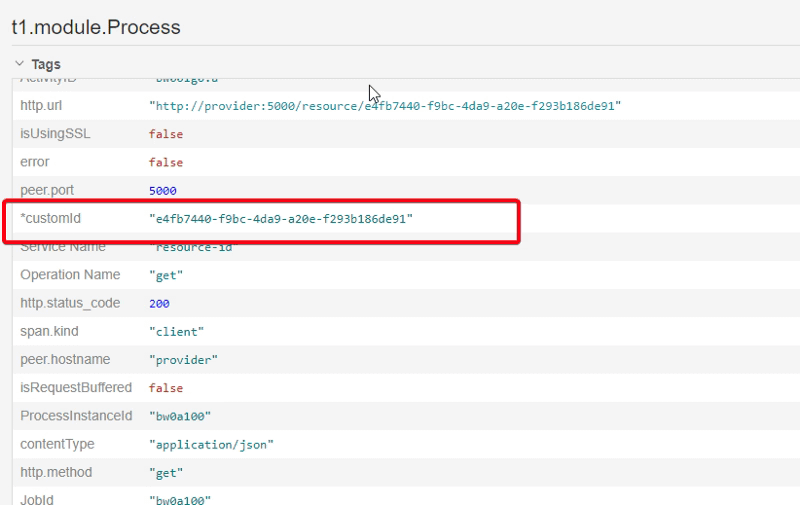

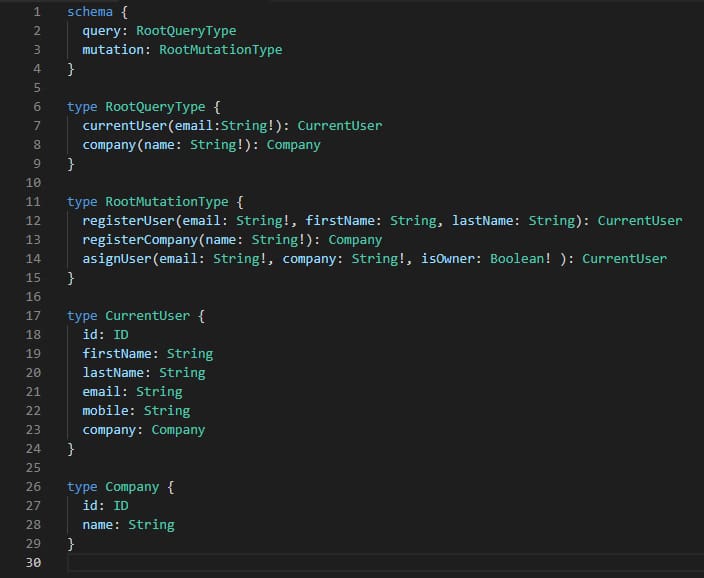

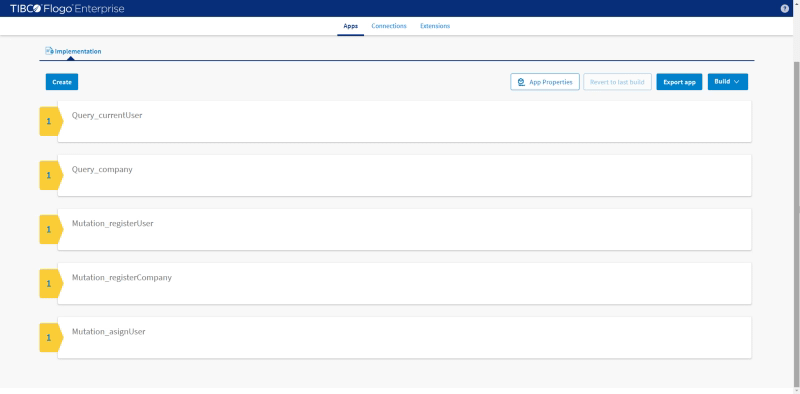

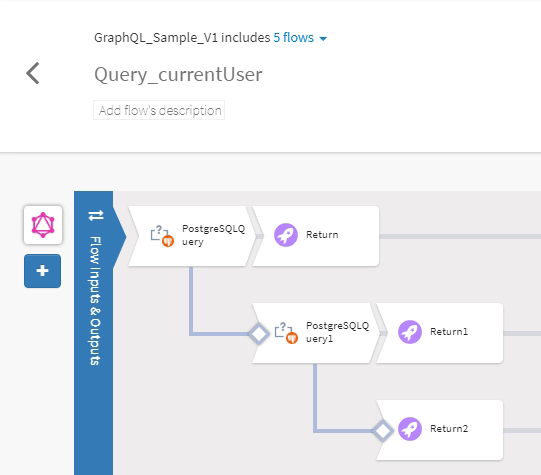

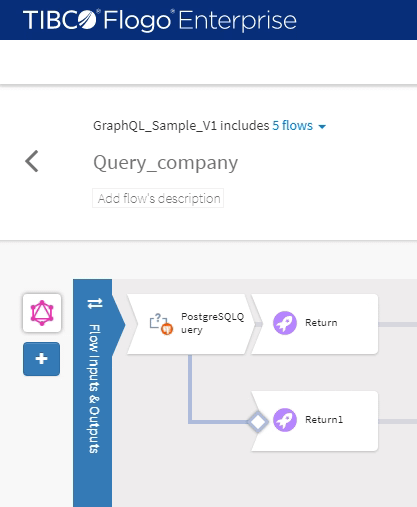

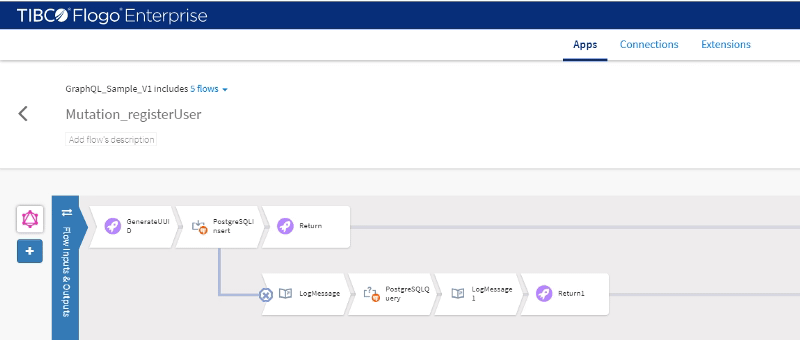

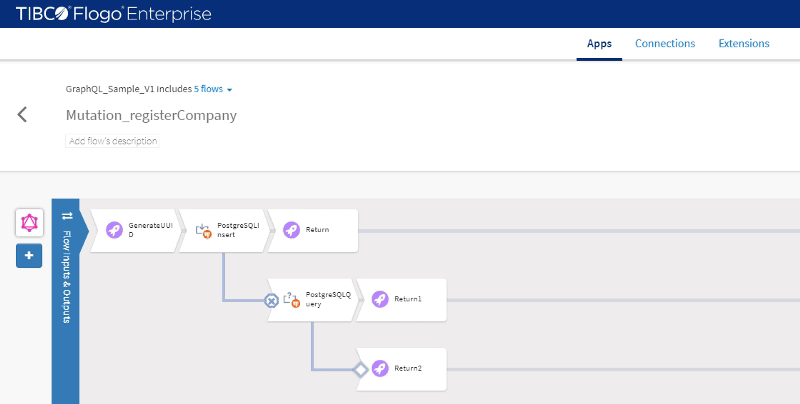

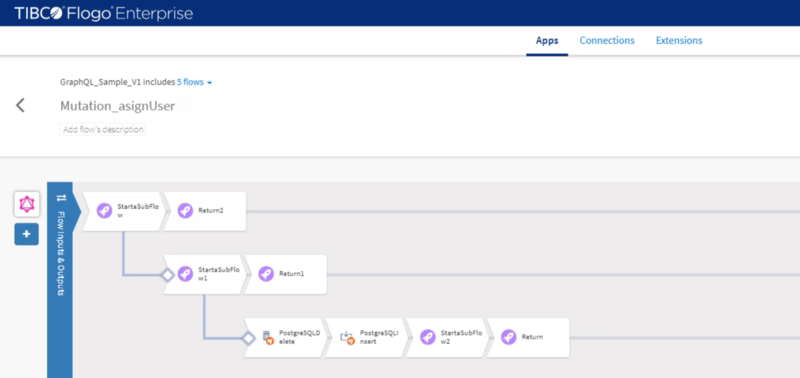

So, now after applying this configuration when we deploy a new application in our Kubernetes cluster, like the project that we can see here:

Automatically we’re going to see an additional target on our job-name configuration “bwce-metrics”

📚 Want to dive deeper into Kubernetes? This article is part of our comprehensive Kubernetes Architecture Patterns guide, where you’ll find all fundamental and advanced concepts explained step by step.